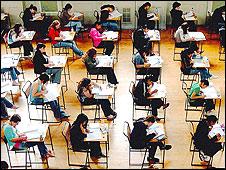

Are exams really getting easier?

- Published

Are exams getting easier... or pupils geting better?

It is a key election question: "Are school standards going up or down?"

But, surrounded by conflicting political claims, is it possible to say whether school standards are higher now than 10, 20 or 50 years ago?

The simple answer, and it is not one you often hear from the politicians, is that it is not possible to compare standards, definitively, over long periods of time.

Indeed, as an important seminar on exam standards heard this week, it may even be the wrong question to ask if we really want to know how well our schools are doing.

The seminar, hosted by the examinations group Cambridge Assessment, started with the question: "School exams: have standards really fallen?"

Professor Roger Murphy from Nottingham University told the seminar that every summer exam results are greeted by claims that standards have fallen.

The evidence of rising pass-rates is, he says, undermined by media-based allegations that exams have become easier.

One solution to this would be to keep the exam papers the same every year.

But exam papers have to change for the simple reason that the curriculum changes all the time.

This is less true in countries, like the USA, where exams are less closely tied to the school curriculum.

'Not an exact science'

But in the UK the tradition is for public exams (A-levels, Highers and national curriculum tests and so on) to assess the syllabus that is taught in schools.

And unless we want to insist that what pupils learn should remain unchanged for 10, 20 or 50 years, then exams must change too.

Would anyone seriously suggest that students today should still be sitting A-level papers from the 1950s, just to make it possible to assess how standards have changed over time?

There is another reason why it is impossible to say whether exam standards have changed over time: exam grades are not very accurate.

That was the rather surprising verdict of assessment experts at the seminar. They argued that assessment "is not an exact science".

So, for example, the same pupil sitting the same A-level at different times could get different grades on each occasion.

This could be down to variations in grading and marking or the selection of questions set. After all, exam papers do not cover the whole syllabus and students gamble on which areas to revise most thoroughly.

'Mount Everest'

There is also the plain fact that students perform differently on different days.

So, as Professor Murphy concluded, "examination grades are only - and can only ever be - an approximate indication of student achievement".

He also challenged the assumption that an A grade in maths is the same as an A grade in French. As he put it: "It cannot be the same, they are as different as chalk and cheese."

Another speaker, Professor Gordon Stobart, from the Institute of Education, agreed that the standards over time debate "goes nowhere" and should be abandoned.

He compared exams standards with climbing Mount Everest.

In 1953 two people got to the top of Everest, an extraordinary achievement at the time.

Yet on a single day in 1996, 39 people stood on the summit.

That might suggest that Everest had become 20 times easier to climb. Yet the mountain remains the same height.

Of course, today people have better equipment, better training, better nutrition and so on. In that sense, it is less surprising that more people can climb Everest.

But while that may make the achievement less exceptional, it does not change the "standard" of the mountain climbing achievement.

'Be prepared'

So a growing number of pupils passing exams does not necessarily mean those exams are easier. It may mean that students are better prepared for the exams.

If that means exams are no longer doing the job of filtering out the best pupils, we could return to the system where only a set percentage of pupils passed each year.

That used to be the way when A-levels were created in order to filter out the small percentage of pupils deemed suitable for university.

Roughly the same proportion of candidates passed each year. Even if one exceptional cohort of pupils presented themselves the pass-rate would remain about the same.

Some people regard those days as a golden age of rigorous exams.

But there are problems. For a start, the exam results would then tell us nothing about how much the nation's pupils knew.

It would also be impossible to judge what was happening to standards over time, as the pass-rate would remain static.

As the seminar grappled with these issues, speakers argued that the problem could be avoided if tests and exams were used for one purpose alone, namely to identify what pupils have learned, rather than being used as an accountability measure for individual schools and the education system as a whole.

Or, as Anastasia de Waal, from the Civitas think-tank, put it: "We need to sever national accountability from the assessment of individual performance."

'Teaching to the best'

This argument goes to the heart of the current row over the national curriculum tests, or SATs, for 11 year-olds.

It is precisely because they are not only an assessment of individuals, but also high stakes tests used to rank schools, that we now have drilling, or "teaching to the test", which shapes the nature of learning.

So, to avoid this interference with teaching and learning, do we have to sacrifice all attempts to measure national standards?

That would be wrong as governments, and voters, need ways of measuring how well school policies are working.

Fortunately, there is another way. A small sample of students, from a representative sample of schools, could be given the same reading or maths test each year.

This would show how standards are changing over time without either constricting curriculum development or leading to the high stakes environment that changes teaching behaviour.

This sort of testing used to happen in England but it was abandoned decades ago.

There is another, perhaps even more useful way of gauging the health of the school system. That is to compare our standards with other countries.

A couple of big organisations already produce such comparative surveys, by setting tests for a representative sample of pupils in each country.

Of course, no one international study gives a definitive answer, as they measure different things. Some assess factual knowledge, while others focus on applied learning.

But they do provide benchmarks for measuring individual countries' performances.

So, instead of the big furore each year over A-levels, Highers, and GCSEs we should perhaps give more attention to the big international studies.

That would leave school exams and tests to focus on assessing what pupils know rather than being used, inappropriately, as a political football.