False claims of 'deepfake' President Biden go viral

- Published

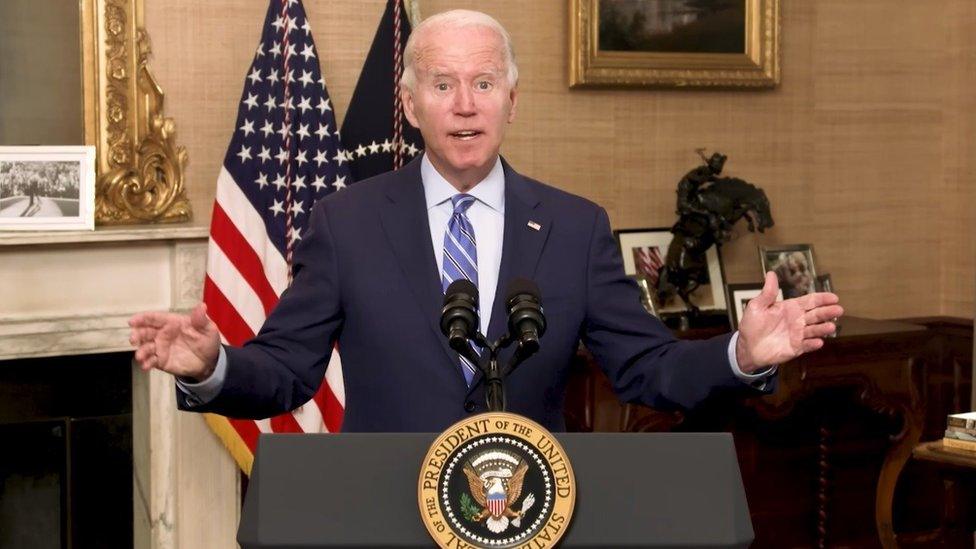

People are falsely claiming a video of US President Joe Biden posted by the Democratic Party is a deepfake.

A deepfake is a video created using artificial intelligence to show someone saying or doing something they didn't do.

We've looked into the video.

What are people claiming?

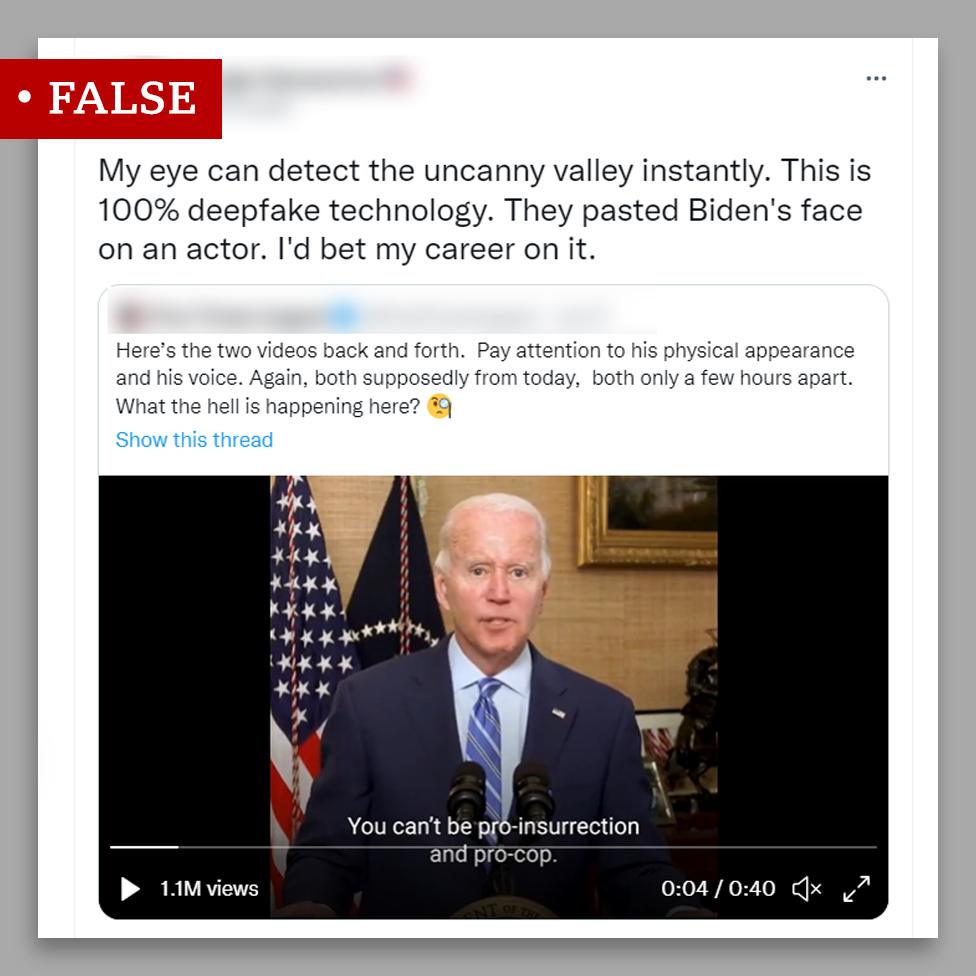

Social media users have claimed the video has been altered to superimpose the president's face on an actor's body using artificial intelligence.

In the 17-second clip, external President Biden speaks about the 6 January riots which saw protesters storm the US Capitol building. The riot is currently being investigated by a congressional panel.

The video was published on the Democrats' official Twitter account on Wednesday, and quickly became part of a wider conspiracy theory.

Posts doubting the legitimacy of the video have been shared thousands of times, including by prominent pundits from the right-wing US television channels Newsmax and One America News.

How do we know it's not fake?

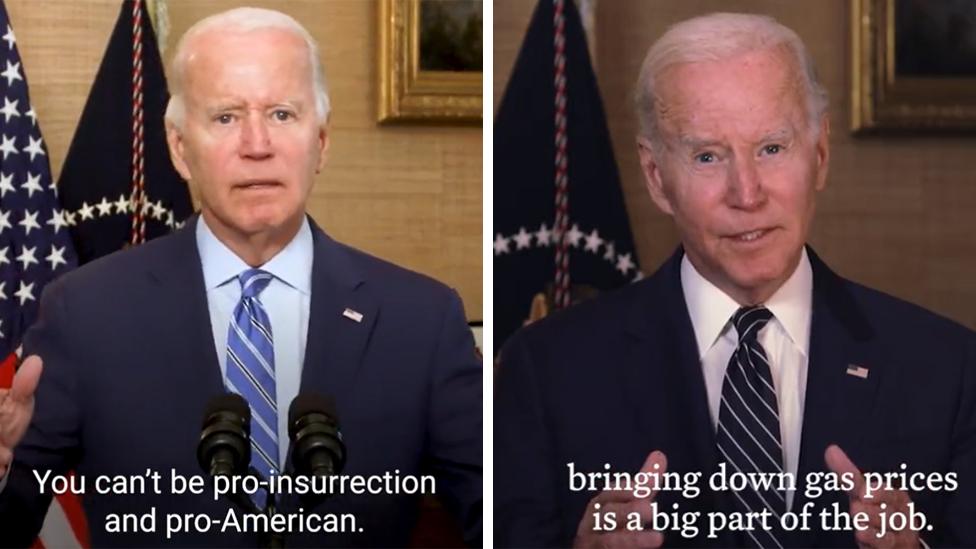

One reason people have cast doubt on the video is because they say President Biden looks different in another video published on the same day - which some people have also claimed is fake.

But the differences in his appearance are clearly due to the lighting used when filming.

You can see the different lighting used in the two videos

People have also claimed the video is suspicious because he doesn't blink - but that wouldn't prove the video was a deepfake.

One post that has been retweeted almost 40,000 times says "a normal person blinks their eye 15 to 20 times a minute".

People blink approximately 12 times a minute, external, but it's perfectly possible not to blink for an extended period of time far longer than the 17 seconds featured in this video.

There is also a cut halfway through the clip of President Biden tweeted by the Democrats, so he wasn't holding continuous eye contact for the whole time.

A longer version of the video has been published by the White House, external which shows the president blinking several times.

In addition, deepfakes can blink.

"A lot of people think you can spot a deepfake because it doesn't blink, which is actually not correct. A badly made deepfake might not blink, but that is not a categorical sign that it's a deepfake," says Sam Gregory, an expert on deepfakes.

"There was research done three or four years ago which found that deepfakes didn't blink, but technology has since improved to enable deepfakes to blink."

Have we seen similar claims before?

Baseless claims about President Biden being a hologram, CGI, deepfake, masked, cloned, played by a body double or recorded in front of a green screen are not new. They have been spreading on the fringes of the internet for a long time, and have sometimes gone viral.

Similar false claims have also been made about other world leaders, including former President Donald Trump, external.

Back in March 2021, a video of President Biden answering questions by reporters in front of the White House went viral after false claims were made that he was filmed in front of a green screen, external.

Most of the people making these claims believe such tricks are being pulled by the White House to shield the president from the public because of his age, ill health, or public gaffes.

Some on the more extreme conspiratorial fringe believe President Biden has been arrested or executed, or that the president is part of a nefarious plot and a puppet for a shadowy evil elite who run the world.

These claims are entirely false. But there is an appetite for such theories in the online world, as this latest viral claim proves.

Although the latest videos are not deepfakes, the technology can be used to artificially manipulate the words and actions of public figures.

Earlier this year a deepfake video circulated of Ukrainian President Volodymyr Zelensky talking of surrendering to Russia.

"In the majority of claims we see globally they are not deepfakes, rather just people wanting to believe something and people not understanding what deepfakes are," says expert Sam Gregory.