AI exam submissions can go undetected - study

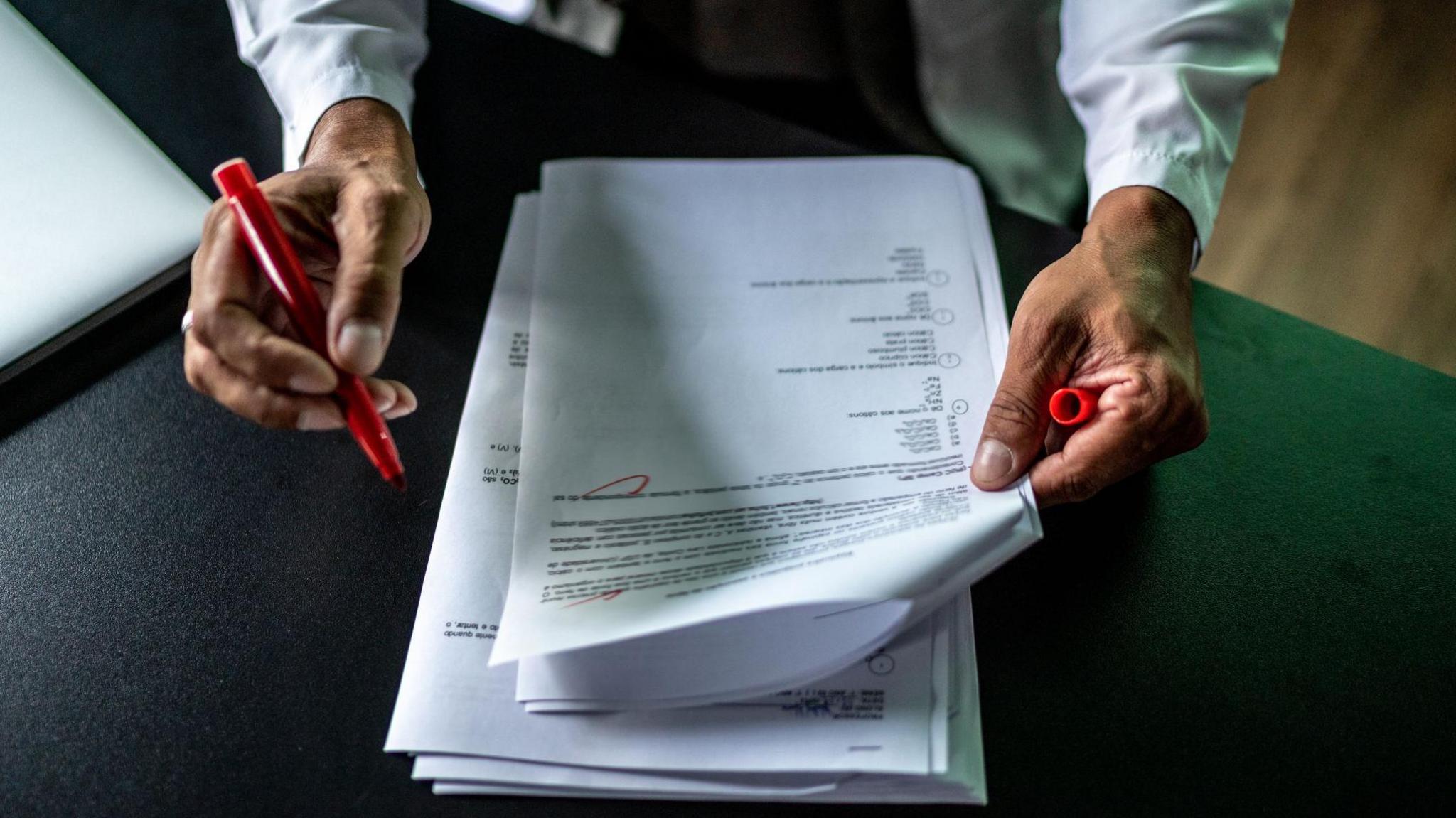

There are worries that students could use AI to write their answers for them

- Published

University exam answers generated by artificial intelligence (AI) could be difficult to spot by even experienced markers, a study has found.

The University of Reading research saw AI-generated answers submitted to examiners on behalf of 33 fake students.

Results showed it was "very difficult" to detect.

It follows concerns that technological advances in AI could lead to a rise in students cheating.

What is AI?

AI is technology that enables a computer to think or act in a more human way.

It does this by taking in information from its surroundings, and deciding its response based on what it learns.

The exams were marked by teachers from the University of Reading

The exams were sent off to be marked by staff from Reading's School of Psychology and Clinical Language Sciences, who were unaware of the study.

About 94% of the answers submitted for undergraduate psychology modules went undetected, the research showed.

Prof Peter Scarfe, who worked on the project, said AI did particularly well in the first and second years of study but struggled more in the final year of study module.

He said: "The data in our study shows it is very difficult to detect AI-generated answers.

"There has been quite a lot of talk about the use of so-called AI detectors, which are also another form of AI but [the scope here] is limited."

The study also found that, on average, AI achieved higher grades than humans.

In most cases, the AI-generated answers went undetected by examiners

Prof Scarfe said the findings should serve as a "wake-up call" for schools, colleges and universities, as AI tools evolve.

In 2023, Russell Group universities, including Oxford and Cambridge, pledged to allow ethical use of AI in teaching and assessments.

But Prof Scarfe said the education sector would need to constantly adapt and update guidance as AI becomes more sophisticated.

He said universities should focus on working out how to embrace the "new normal" of AI in order to enhance education.

Follow BBC South on Facebook, external, X (Twitter), external, or Instagram, external. Send your story ideas to south.newsonline@bbc.co.uk, external or via WhatsApp on 0808 100 2240, external.

- Published9 May 2023

- Published28 May 2024

- Published9 August 2019