Video app TikTok fails to remove online predators

- Published

Video-sharing app TikTok is failing to suspend the accounts of people sending sexual messages to teenagers and children, a BBC investigation has found.

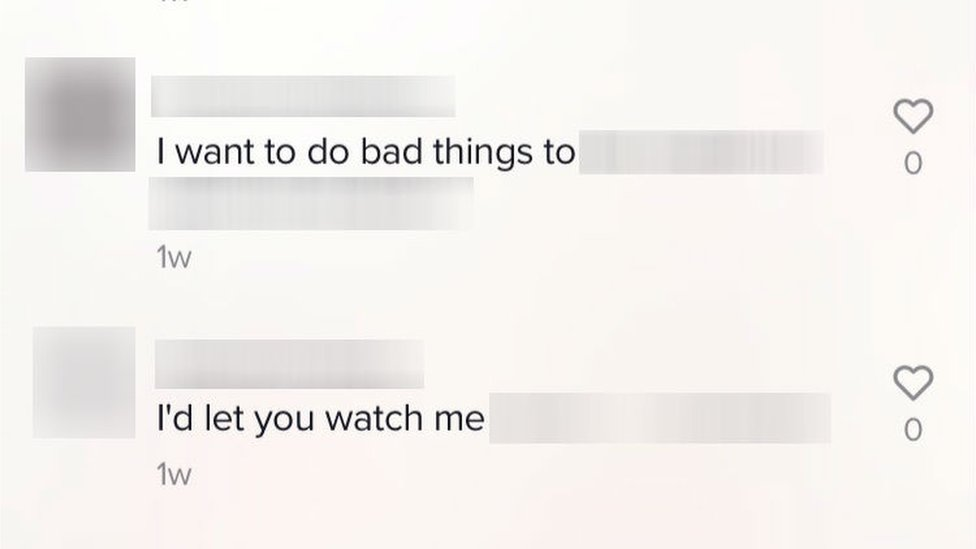

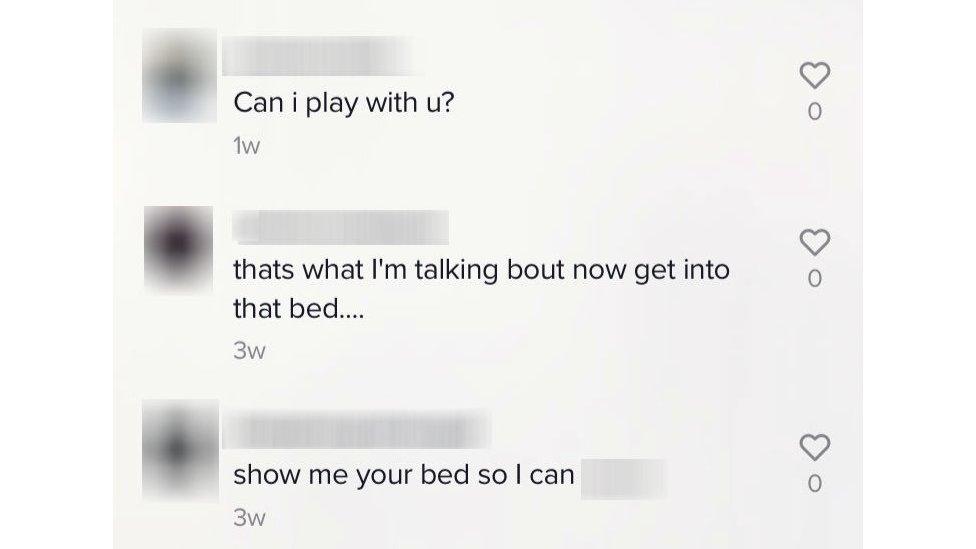

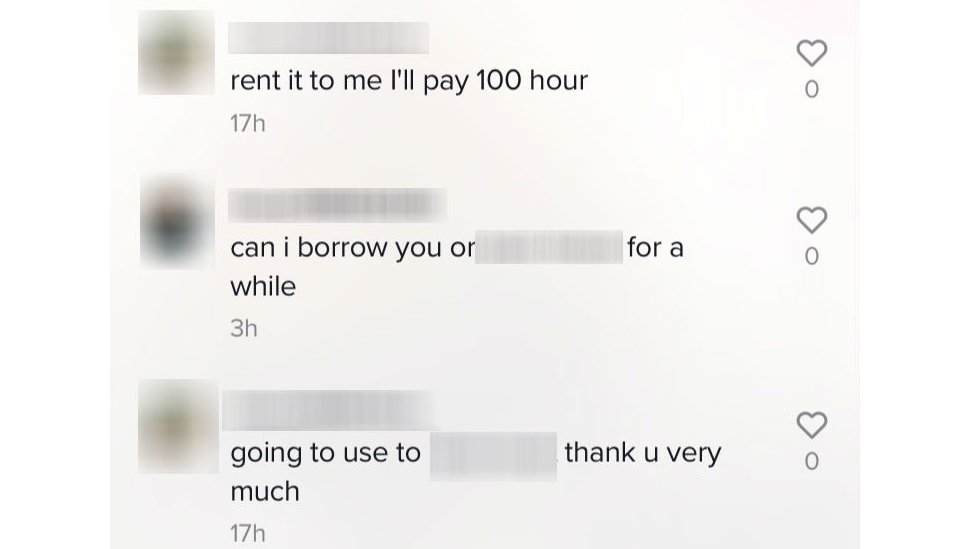

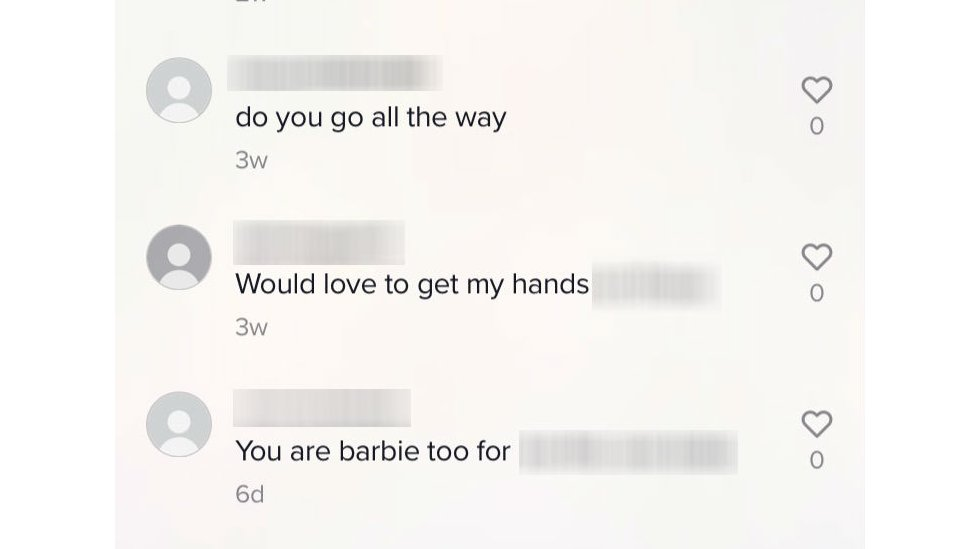

Hundreds of sexually explicit comments have been found on videos posted by children as young as nine.

While the company deleted the majority of these comments when they were reported, most users who posted them were able to remain on the platform, despite TikTok's rules against sexual messages directed at children.

The BBC collected and reported hundreds of sexually explicit comments

TikTok says that child protection is an "industry wide-challenge" and that promoting a "safe and positive app environment" remains the company's top priority.

England's Children's Commissioner Anne Longfield says she will request a meeting with TikTok to discuss the findings of the BBC's investigation.

"I want children to be able to enjoy everything that the app can offer, but we need to make sure that those responsibilities are taken seriously," she says.

How bad is the problem?

TikTok has become hugely popular with teenagers. It allows people to post short videos of themselves lip-syncing and dancing to their favourite songs, performing short comedy skits or completing challenges.

The company says it has more than 500 million monthly active users around the world, but TikTok refused to tell the BBC how many of those are in the UK.

TikTok ran a huge advertising campaign in the UK earlier this year

Over three months, BBC Trending collected hundreds of sexual comments posted on videos uploaded by teenagers and children.

We reported the comments to TikTok using the same tools available to any user of the app.

The language used ranged from sexually suggestive to extremely explicit

TikTok's community guidelines, external forbid users from using "public posts or private messages to harass underage users" and say that if the company becomes "aware of content that sexually exploits, targets, or endangers children" it may "alert law enforcement or report cases".

While the majority of sexual comments were removed within 24 hours of being reported, TikTok still failed to remove a number of messages that were clearly inappropriate for children.

And even though many of the comments themselves were taken down, the vast majority of accounts that had sent sexual messages were still active on the app.

Staying safe online

The NSPCC has a series of guidelines about keeping children safe online, external

They promote the acronym TEAM: Talk about staying safe online; Explore the online world together; Agree rules about what's OK and what's not; and Manage your family's settings and controls.

There are even more resources on the BBC Stay Safe site.

Are predators being allowed to remain online?

The BBC was also able to identity a number of users who, again and again, approached teenage girls online to post sexually explicit messages on their videos.

Some users repeated their sexual comments across a number of TikTok videos

"These are individuals who are using these platforms to try to get access in some way to young children," says Ms Longfield, the children's commissioner.

While many users hide behind anonymous profiles to send disturbing messages, others (often adult men) use what appear to be their real names and photos and upload their own videos on the app.

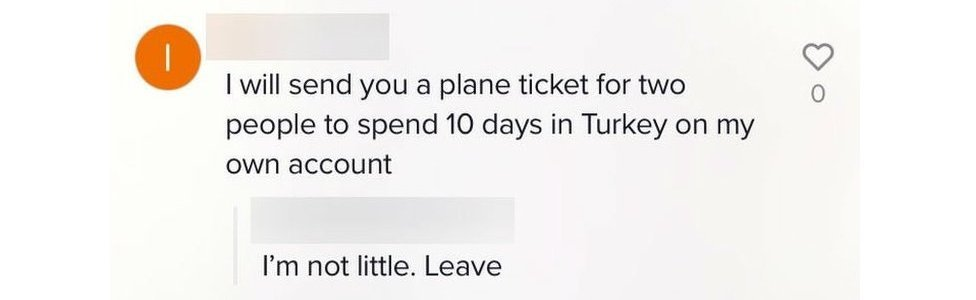

Some users tried to lure children with offers of holidays

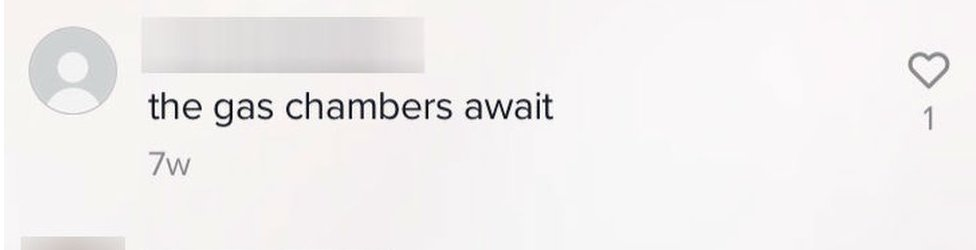

And while our investigation focused on sexually explicit comments, we also found instances where children were sent threatening or violent messages.

The BBC has also come across misogynist, racist, homophobic, and anti-Semitic messages on TikTok

Chris has a 10-year-old son who went on TikTok in January, without his parents' knowledge.

One day, TikTok messages began popping up on his son's phone. "They were like 'do not ignore me', swearing... 'I know who you are and I'll come and get you'," Chris recalls.

The sender was an adult male. While he had not included any sexual content in his messages, Chris wonders: "If [my son] had started engaging in conversation, what could have been next?"

Chris has since deleted the app from his son's phone and informed his school of the incident.

"It's disgusting," he says. "TikTok's got a responsibility now and if people are getting on there and seeing messages like this should be contacting the police at the very least."

You may also be interested in:

How safe do users feel?

Emily Steers and Lauren Kearns, from Northamptonshire, are both 15 years old. Together, they run a TikTok channel with more than a million followers.

Emily and Lauren dancing as they film themselves on TikTok

"People come up to us and ask for pictures... It's really weird", Lauren says.

They say most of the messages they get from their fans online are harmless, but among the hundreds of comments posted on their videos, we also found sexual messages.

As part of our investigation, we reported one of them to TikTok, but after 24 hours the company had failed to take down the comment or remove the user who posted it.

"It is a bit worrying," says Emily's father, Mark. "When they do catch people saying bad things or sexual things, they should have the power to block them or actually take them away straightaway."

Emily's dad, Mark, says he won't let his daughter go on TikTok without supervision until she's older

More from BBC Trending

Are some kids on TikTok too young?

Earlier this year, TikTok was hit with a $5.7m (£4.3m) fine in the US after it was found to have illegally collected personal information of children under 13 who had been using the app.

The case highlighted how easy it had become for young children to join TikTok, despite the company's terms of use, external which say that 13 is the minimum age to join the platform.

As a result of the fine, TikTok has been asking some users in the US to verify their age by sending the company identification.

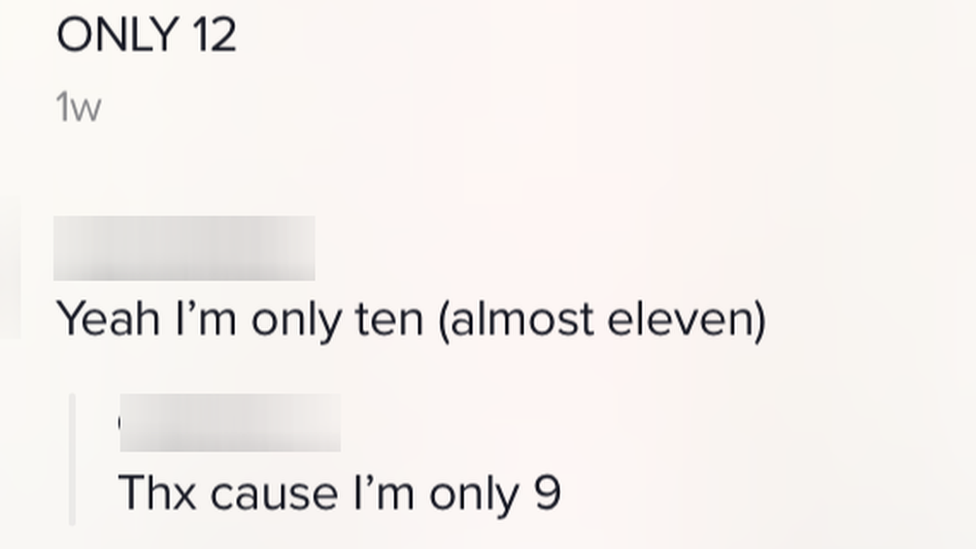

In the course of our investigation, the BBC came across several accounts run by children under 13 - some as young as nine. TikTok has no plans to start verifying the age of users in the UK.

In the UK, the Information Commissioner's Office told the BBC it is investigating TikTok, but did not provide further details about the focus of their investigation.

And in India, a court ruling on Wednesday asked the government to ban TikTok, external because of concerns about "pornography" and child protection.

Children under age 13 use TikTok despite the company's rules

"We need to have robust age verification tools in place," says Damian Collins MP, chairman of the Commons Digital, Culture, Media and Sport Committee. "The age policies are meaningless if they don't have the ability to really check whether people are the age or not.

"We've been discussing content regulation with a number of different social media companies and will certainly be taking a good look at what's been happening at TikTok," he says.

Damian Collins: "This is really serious abuse and it needs to be stopped."

What is TikTok's response?

TikTok declined the BBC's request for an interview.

In a statement, the company said: "We are committed to continuously enhancing our existing measures and introducing additional technical and moderation processes in our ongoing commitment to our users."

"We take escalating actions ranging from restricting certain features up to banning account access, based on the frequency and severity of the reported content. In addition, we have multiple proactive approaches that look for potentially problematic behaviour and take action including terminating accounts that violate our Terms of Service."

TikTok says it uses a combination of technology and human moderation to remove content. The company refused to say how many moderators it employs.

The Children's Commissioner for England told the BBC that she will seek a meeting with TikTok to discuss the findings of our investigation. And she also says parents have a key role to play in keeping their children safe online.

Children's Commissioner for England, Anne Longfield, says she will write to the company

"So much is often hid in the comments for many of these things and that's actually where the danger can lie," Anne Longfield says. "Be very proactive, complain about it to the company and demand action."

If you would like advice on how to stay safe online, you can find it on BBC Own It (for children) and on the NSPCC, external (for parents).

If you have been affected by any of the issues raised in this story, you can look for help and information on the BBC's Action Line.

Do you have a story? Send us an email, external.

You can follow BBC Trending on Twitter @BBCtrending, external, and find us on Facebook, external. All our stories are at bbc.com/trending.