The iPhone at 10: How the smartphone became so smart

- Published

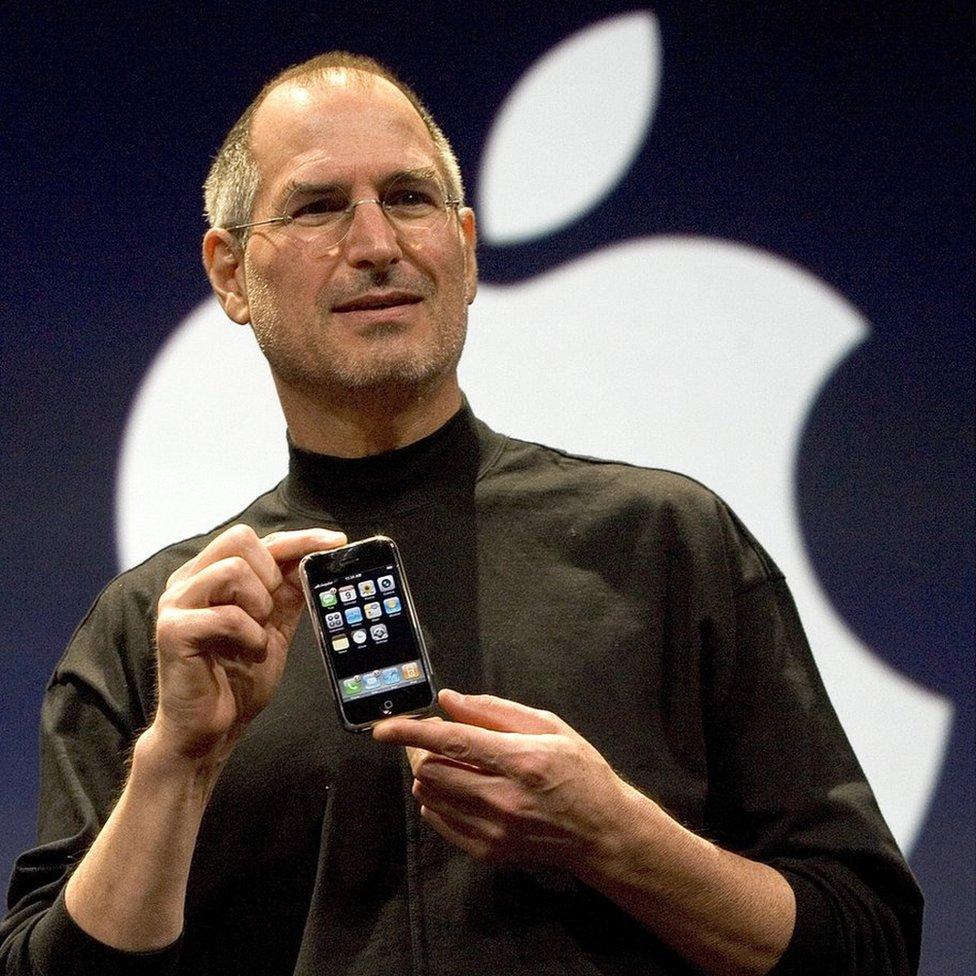

On 9 January 2007, one of the most influential entrepreneurs on the planet announced something new - a product that was to become the most profitable in history.

It was, of course, the iPhone. There are many ways in which the iPhone has defined the modern economy.

There is the sheer profitability of the thing, of course: there are only two or three companies in the world that make as much money as Apple does from the iPhone alone.

Apple may not have sold the first smartphone, but the iPhone represented a quantum leap compared with earlier models, and its version became an object of desire for most of humanity.

There's the way the iPhone transformed other markets - software, music, and advertising.

But those are just the obvious facts about the iPhone. And when you delve more deeply, the tale is a surprising one. We give credit to Steve Jobs and other leading figures in Apple - his early partner Steve Wozniak, his successor Tim Cook, his visionary designer Sir Jony Ive - but some of the most important actors in this story have been forgotten.

Find out more

50 Things That Made the Modern Economy highlights the inventions, ideas and innovations which have helped create the economic world we live in.

It is broadcast on the BBC World Service. You can find more information about the programme's sources and listen online or subscribe to the programme podcast.

Ask yourself: what actually makes an iPhone an iPhone? It's partly the cool design, the user interface, the attention to detail in the way the software works and the hardware feels. But underneath the charming surface of the iPhone are some critical elements that made it, and all the other smartphones, possible.

The economist Mariana Mazzucato has made a list of 12 key technologies that make smartphones work: 1) tiny microprocessors, 2) memory chips, 3) solid state hard drives, 4) liquid crystal displays and 5) lithium-based batteries. That's the hardware.

Then there are the networks and the software. So 6) Fast-Fourier-Transform algorithms - clever bits of maths that make it possible to swiftly turn analogue signals such as sound, visible light and radio waves into digital signals that a computer can handle.

At 7) - and you might have heard of this one - the internet. A smartphone isn't a smartphone without the internet.

At 8) HTTP and HTML, the languages and protocols that turned the hard-to-use internet into the easy-to-access World Wide Web. 9) Cellular networks. Otherwise your smartphone not only isn't smart, it's not even a phone. 10) Global Positioning Systems or GPS. 11) The touchscreen. 12) Siri, the voice-activated artificial intelligence agent.

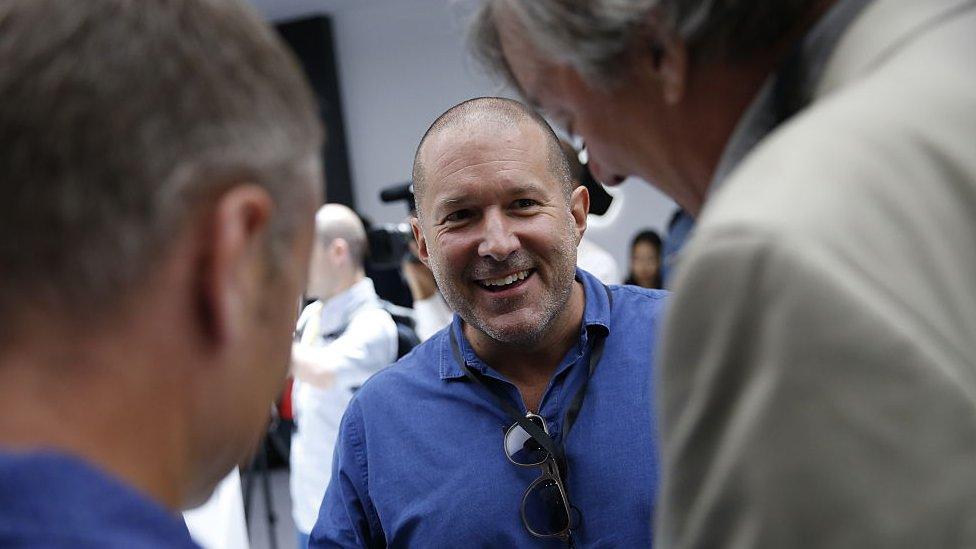

Apple's designer Sir Jony Ive has been widely lauded for his contribution to the iPhone's success

All of these technologies are important components of what makes an iPhone, or any smartphone, actually work. Some of them are not just important, but indispensable. But when Mariana Mazzucato assembled this list of technologies, and reviewed their history, she found something striking.

The foundational figure in the development of the iPhone wasn't Steve Jobs. It was Uncle Sam. Every single one of these 12 key technologies was supported in significant ways by governments - often the American government.

A few of these cases are famous. Many people know, for example, that the World Wide Web owes its existence to the work of Sir Tim Berners-Lee. He was a software engineer employed at Cern, the particle physics research centre in Geneva that is funded by governments across Europe.

And the internet itself started as Arpanet - an unprecedented network of computers funded by the US Department of Defense in the early 1960s. GPS, of course, was a pure military technology, developed during the Cold War and opened up to civilian use only in the 1980s.

Other examples are less famous, though scarcely less important.

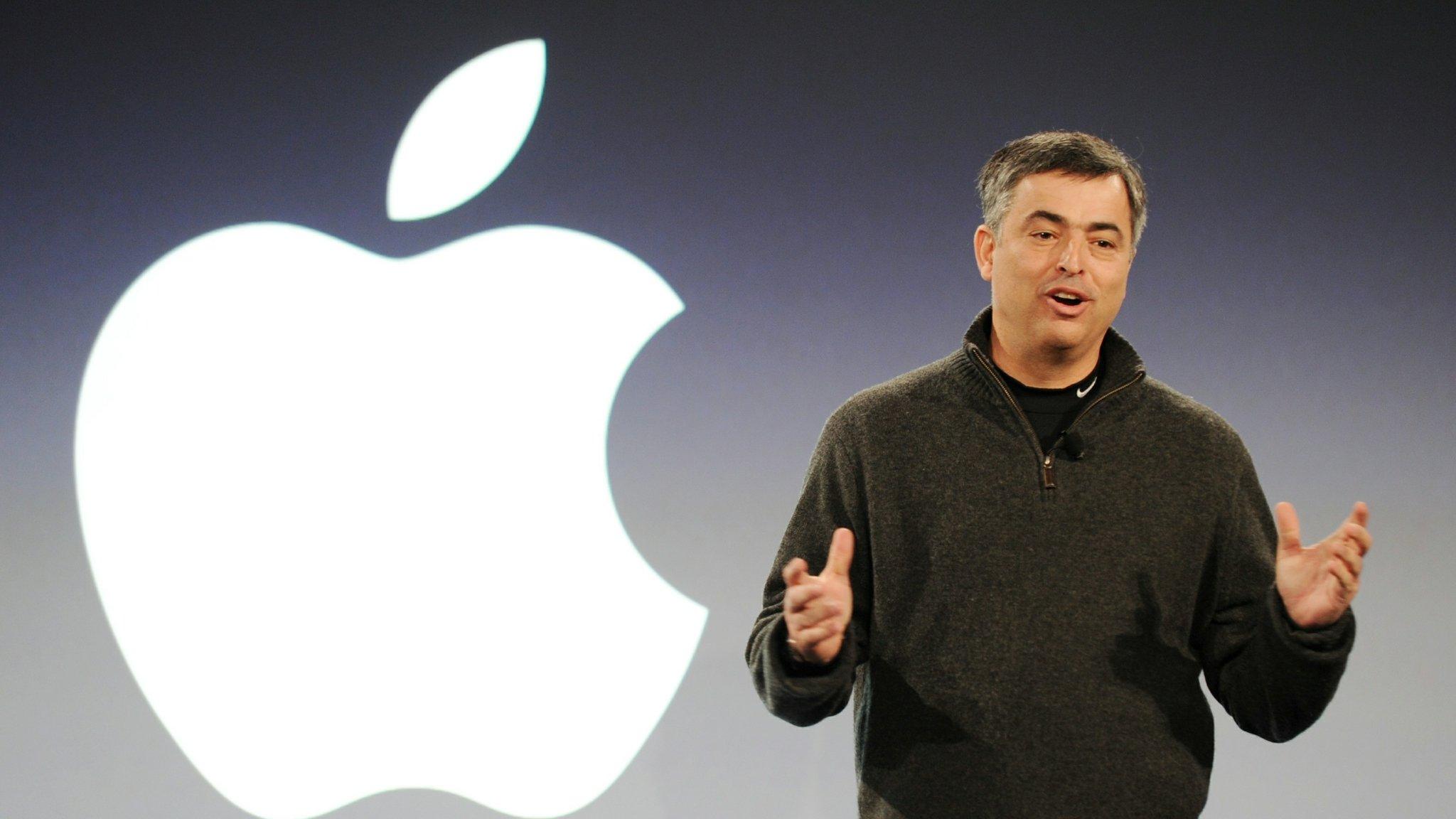

Smartphones have all benefited from government investment in technology

The Fast-Fourier-Transform is a family of algorithms that have made it possible to move from a world where the telephone, the television and the gramophone worked on analogue signals, to a world where everything is digitised and can therefore be dealt with by computers such as the iPhone.

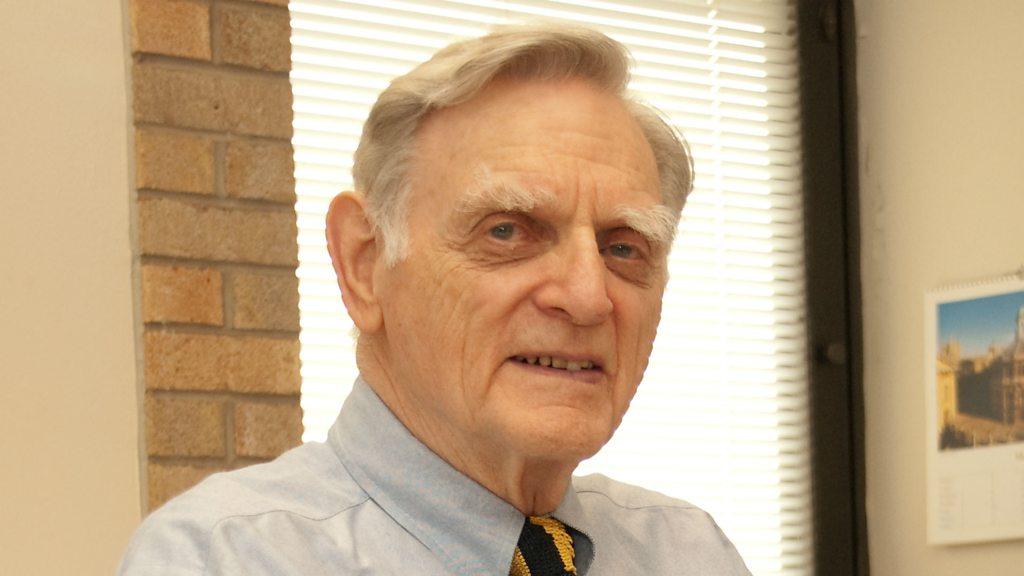

The most common such algorithm was developed from a flash of insight from the great American mathematician John Tukey. What was Tukey working on at the time? You've guessed it: a military application.

Specifically, he was on President Kennedy's Scientific Advisory committee in 1963, trying to figure out how to detect when the Soviet Union was testing nuclear weapons.

Smartphones wouldn't be smartphones without their touchscreens - but the inventor of the touchscreen was an engineer named EA Johnson, whose initial research was carried out while Johnson was employed by the Royal Signals and Radar Establishment, a stuffily-named agency of the British government.

The work was further developed at Cern - those guys again. Eventually multi-touch technology was commercialised by researchers at the University of Delaware in the United States - Wayne Westerman and John Elias, who sold their company to Apple itself.

Touchscreen technology has gone on to drive the development of tablet computers

Yet even at that late stage in the game, governments played their part: Wayne Westerman's research fellowship was funded by the US National Science Foundation and the CIA.

Then there's the girl with the silicon voice, Siri.

Back in the year 2000, seven years before the first iPhone, the US Defence Advanced Research Projects Agency, Darpa, commissioned the Stanford Research Institute to develop a kind of proto-Siri, a virtual office assistant that might help military personnel to do their jobs.

Twenty universities were brought into the project, furiously working on all the different technologies necessary to make a voice-activated virtual assistant a reality.

Seven years later, the research was commercialised as a start-up, Siri Incorporated- and it was only in 2010 that Apple stepped in to acquire the results for an undisclosed sum.

Increasingly sophisticated lithium-ion batteries have been essential for smartphone growth

As for hard drives, lithium-ion batteries, liquid crystal displays and semiconductors themselves - there are similar stories to be told.

In each case, there was scientific brilliance and plenty of private sector entrepreneurship. But there were also wads of cash thrown at the problem by government agencies - usually US government agencies, and for that matter, usually some arm of the US military.

Silicon Valley itself owes a great debt to Fairchild Semiconductor - the company that developed the first commercially practical integrated circuits. And Fairchild Semiconductor, in its early days, depended on military procurement.

Of course, the US military didn't make the iPhone. Cern did not create Facebook or Google. These technologies, that so many people rely on today, were honed and commercialised by the private sector. But it was government funding and government risk-taking that made all these things possible.

That's a thought to hold on to as we ponder the technological challenges ahead in fields such energy and biotechnology.

Steve Jobs was a genius, there's no denying that. One of his remarkable side projects was the animation studio Pixar - which changed the world of film when it released the digitally animated film, Toy Story.

Even without the touchscreen and the internet and the Fast-Fourier-Transform, Steve Jobs might well have created something wonderful.

But it would not have been a world-shaking technology like the iPhone. More likely it would, like Woody and Buzz, have been an utterly charming toy.

Tim Harford is the FT's Undercover Economist. 50 Things That Made the Modern Economy was broadcast on the BBC World Service. You can find more information about the programme's sources and listen online or subscribe to the programme podcast.

Correction: An earlier version of this story suggested the iPhone was the first smartphone, but other smartphones had predated its launch in 2007.

- Published7 December 2016

- Published7 December 2016

- Published25 October 2016

- Published14 October 2016