Coronavirus: Facebook accused of forcing staff back to offices

- Published

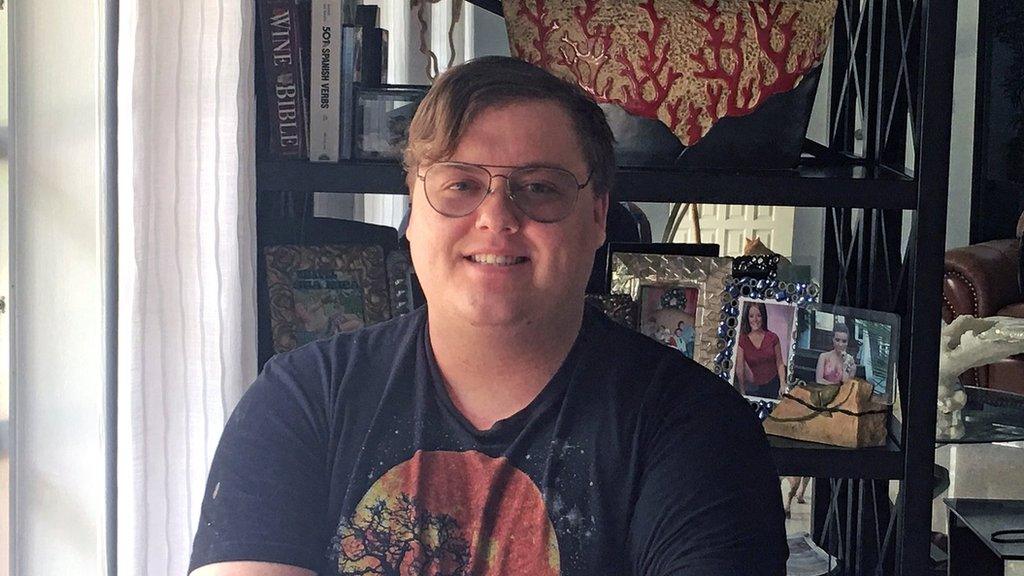

Content moderators say they have Facebook's "most brutal job"

More than 200 Facebook workers from around the world have accused the firm of forcing its content moderators back to the office despite the risks of contracting coronavirus.

The claims came in an open letter, external that said the firm was "needlessly risking" lives to maintain profits.

They called on Facebook to make changes to allow more remote work and offer other benefits, such as hazard pay.

Facebook said "a majority" of content reviewers are working from home.

"While we believe in having an open internal dialogue, these discussions need to be honest," a spokesperson for the company said.

"The majority of these 15,000 global content reviewers have been working from home and will continue to do so for the duration of the pandemic."

In August, Facebook said staff could work from home until the summer of 2021.

But the social media giant relies on thousands of contractors, who officially work for other companies such as Accenture and CPL, to spot materials on the site that violate its policies, such as spam, child abuse and disinformation.

The letter comes a day after Mr Zuckerberg was grilled by Washington lawmakers over its handling of problematic posts

In the open letter, the workers said the call to return to the office had come after Facebook's efforts to rely more on artificial intelligence to spot problematic posts had come up short.

"After months of allowing content moderators to work from home, faced with intense pressure to keep Facebook free of hate and disinformation, you have forced us back to the office," they said.

"Facebook needs us. It is time that you acknowledged this and valued our work. To sacrifice our health and safety for profit is immoral."

This letter gives a fascinating behind the scenes glimpse into what is happening at Facebook - and all is not well.

Mark Zuckerberg's dream is that AI moderation will one day solve some of the platform's problems.

The idea is that machine learning and sophisticated software will automatically pick up and block things like hate speech or child abuse.

Facebook claims that nearly 95% of offending posts are picked up before they are flagged.

Yet it's still easy to find grim stuff on Facebook.

On Monday I published a piece showing the kinds of racist and misogynistic content aimed at Kamala Harris on the platform.

Facebook removed some of the content, however even though I flagged it to Facebook, some of it is still there - a week after I reported it.

What this letter suggests is that AI is simply not working as Facebook execs would hope.

Of course, these are voices of moderators - Facebook will have a different take.

You could also argue that human voices may have a vested interest to say AI doesn't work.

But clearly, as the spotlight is well and truly on Facebook, there are internal problems that have now spilled out into the open.

Facebook said the reviewers have access to health care and that it had "exceeded health guidance on keeping facilities safe for any in-office work".

But the workers said only those with a doctor's note are currently excused from working in an office and called on Facebook to offer hazard pay and make its contractors full-time staff.

"Before the pandemic, content moderation was easily Facebook's most brutal job. We waded through violence and child abuse for hours on end. Moderators working on child abuse content had targets increased during the pandemic, with no additional support," they said.

"Now, on top of work that is psychologically toxic, holding onto the job means walking into a hot zone."

The letter is addressed to Facebook boss Mark Zuckerberg and chief operating officer Sheryl Sandberg, as well as the chiefs of Accenture and CPL. It was organised by UK law firm Foxglove, which works on tech policy issues. More than 170 of the signatories were anonymous.

Facebook is not the only company to face staff worries about in-person work amid the pandemic.

Amazon has also come under fire for conditions in its warehouses, while outbreaks at firms from manufacturers to finance companies have stirred fears.

It comes just a day after Washington lawmakers grilled Mr Zuckerberg on the firm's content review policies.

- Published31 January 2022

- Published13 May 2020

- Published20 August 2019

- Published16 November 2020

- Published17 November 2020