Election results: How did pollsters get it so wrong?

- Published

I monitored 91 GB-wide voting intention polls during the 2015 election campaign and found nothing in them to prepare me for the final outcome.

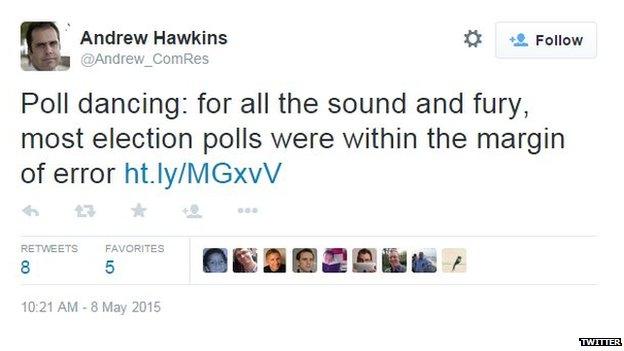

The majority of them were so close to each other that they were within the margin of error.

At one stage it seemed there might be a difference between methodologies - telephone versus internet, with telephone polls suggesting stronger Conservative leads than those conducted online.

However, in the final polls before election day itself these differences seemed to disappear as the BBC's poll tracker demonstrates.

A systematic overstatement of Labour share

The good news for the pollsters was that their share for the Lib Dems was pretty close, as were the shares for UKIP, the Greens and the catch-all category of Others.

The bad news for the polling industry was that the crucial shares for Conservative and Labour were the ones where they came most adrift.

When all the qualifications of margins of error are allowed, there did appear to be a systematic overstatement of the Labour share and an equally systematic understatement of the Conservative one.

In four elections from 1992, the final polls overstated Labour but not in the fifth election - 2010 - so 2015 seems a reversion to type.

Looking at the final polls of the nine companies that published them, I have studied the combined differences in their Conservative and Labour shares from the actual outcome for both parties and they range from 6-9%.

Eight of them overstated Labour's share and all nine understated the Conservative share.

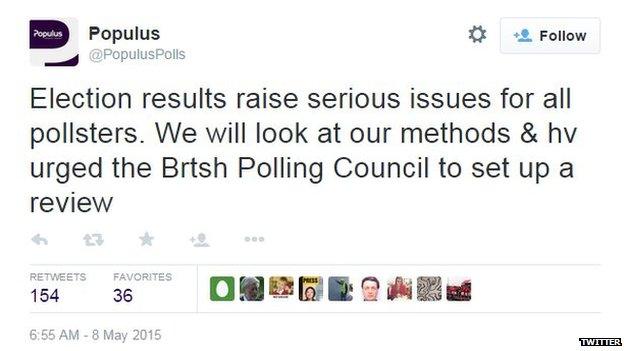

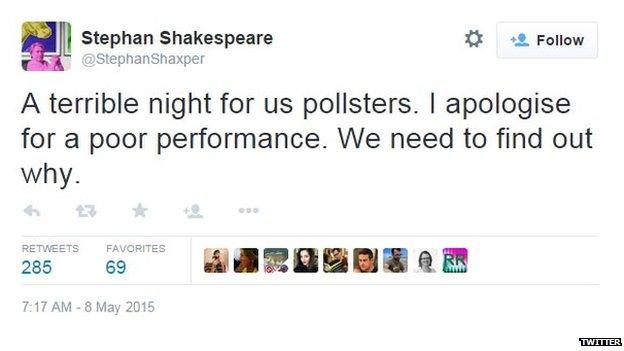

Pollsters react to the results

How on Earth did this happen?

If there was any late shift to the Conservatives, the polls did not pick it up. If anything, earlier small signs of a Conservative increase seemed to stall in the final week.

How did all this happen? Shy Conservatives? But why should Conservative voters be alone in being reluctant to declare themselves?

Where was the Labour zeitgeist in this campaign that made their voters more likely to boast their affiliations?

Why would Lib Dem voters be less reluctant to proclaim their party allegiance than Conservatives?

And given the assault on UKIP from every quarter, why would their voters not be the most shy of all?

'Polling firms must avoid knee-jerk denial'

It is for the pollsters to decide whether to conduct a review of their performance but I trust they will avoid the initial knee-jerk reaction of the immediate aftermath of the 1992 polls debacle.

At that time some tried to deny there was really a problem at all - it was all down to external forces (eg late swing) and no fault of the polling companies. That proved totally unsustainable in a very short time.

Despite some pollsters being in denial, others realised there was a problem and set about finding out how to fix it.

The industry engaged in a very comprehensive investigation of the various methodologies they used in 1992 and many of the current ingredients of political polling were introduced as a result of that serious study.

This general election performance follows the performance of the polls in the 2014 Scottish referendum where the choice was simply binary and yet all the polls were out by between 4-6%.

Something is wrong. A lot of us would like to know what it is.

- Published8 May 2015

- Published5 May 2015

- Published8 May 2015

- Published8 May 2015

- Published8 May 2015

- Published8 May 2015