How accurate is the Pisa test?

- Published

The Pisa league table which ranks test results of students from 65 countries is taken very seriously by policymakers and the media, who celebrate a good performance and bemoan a poor one. But how accurate is it?

Every three years results are published of tests taken by about half a million 15-year-old school children from around the world in maths, reading, science and problem-solving.

The OECD, which runs Pisa, the Programme for International Student Assessment, publishes a report analysing the performance of the different countries.

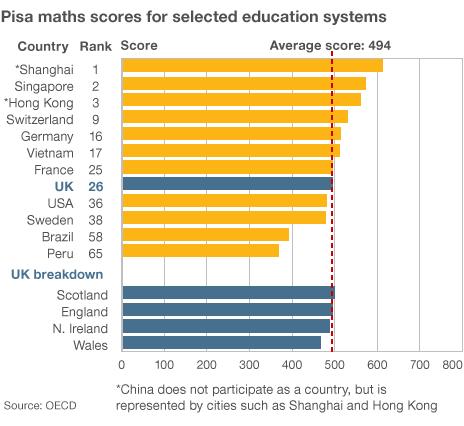

This year the five top-performers are all in Asia: Shanghai is at the top of the class (China is not assessed on a country-wide basis), followed by Singapore, Hong Kong; Taiwan, and South Korea.

Other consistently strong performers are Finland and Switzerland.

Countries like the US and the UK are middling - yet again. And Peru languishes at the bottom of the league, with the likes of Indonesia, Brazil and Tunisia.

Tunisia, by the way, is the only African country that takes part.

But how does the test work?

About 4,000 children in each of the 65 countries are subjected to the test, which lasts for two hours.

But only a small number of pupils in each school answer the same set of questions.

The reason for this is that Pisa wants to measure a comprehensive set of skills and abilities, so it draws up more questions than a single child could answer (about four-and-a-half hours' worth) and distributes them between different exam papers.

Pisa then uses a statistical model, called the Rasch model, to estimate each student's latent ability. They also extrapolate from each student's answers how they would have fared if they had answered all the other questions, had they been given them.

David Spiegelhalter, professor of the public understanding of risk at Cambridge University, says this practice raises its own questions.

"They are predicted conditional on knowing the difficulties of the questions - as if these are fixed constants," he says.

But he thinks there is actually "considerable uncertainty" about this.

Furthermore, a question that is easy for children brought up in one culture may not be as easy for those brought up in another, Spiegelhalter says. "Assuming the difficulty is the same for all students around the whole world" is a mistake, he argues.

So when you see the league table of countries, the first thing to understand is that each country has been ranked according to an estimate of national performance.

Spiegelhalter is not the only academic to find fault with the Pisa tests.

"I don't think it's reliable at all," says Svend Kreiner, a statistician from the University of Copenhagen in Denmark.

He has been looking at the reading test, in particular, and he too casts doubt on the idea that a question in Danish for a Danish child is always as hard as a question in Chinese for a Chinese child. Language differences and cultural differences, he says, can both influence the difficulty level.

"I'm not actually able to find two questions in Pisa's test that function in exactly the same way in different countries," Kreiner says.

When Svend Kreiner first made these criticisms in 2011, the OECD's deputy director for education, Andreas Schleicher, defended the Pisa tests to the BBC.

He argued that although it is possible to find a task in which Denmark does significantly better than England and another task that Denmark does worse than England the tests were still a valid way to compare performance.

"The model is always an approximation of reality," he said.

"The question is does the model fit the reality such that there is no distortion of the results."

He does say that people shouldn't pay too much attention to the precise country rankings, because of the margin of error in the calculations. He would not attach too much importance to whether a country was ranked 27th or 28th, he says.

But for David Spiegelhalter this does not go far enough.

"Pisa does present the uncertainty in the scores and ranks - for example the UK rank in the 65 countries is said to be between 23 and 31," he writes in a recent blog post, external.

"But I believe that the imputation of plausible values, based on an over-simplistic model and assuming the 'difficulties' are fixed, known quantities, will underestimate, to an unknown extent, the appropriate uncertainty in the scores and rankings."

It's unwise for countries to base education policy on their Pisa results, he says, as Germany, Norway and Denmark did after doing badly in 2001. This is to underestimate the "random error associated with the Pisa rankings", in his view.

And when changes in education policy are followed by a higher Pisa ranking, he cautions against inferring a causal connection.

"While, with hindsight, any pundit can construct a reason why a football team lost a match, it's not so easy to say what will make them win the next one," he writes in the blog post.

Another fundamental question is how to weigh educational attainment against well-being.

South Korea might have come near the top of the educational rankings, but they come bottom in the rankings of happiness at school, Spiegelhalter notes - and Finland is only just above Korea.

By contrast, he points out that the UK fares "rather well" on these three questions:

"I am happy at school"

"I am satisfied with my school"

"I enjoy getting good grades"

Spiegelhalter does think there is merit to examining children around the world.

Broad lessons can be learned, he says, it's just that at present too much attention is often paid to the precise numbers.

Additional reporting by Charlotte McDonald and Hannah Barnes

Follow @BBCNewsMagazine, external on Twitter and on Facebook, external