'Eyes of a machine': How to classify Planet Earth

- Published

Senegal: A land cover map classifies the different types of surface across the planet

We are a force of Nature. Humans have reshaped the surface of the Earth to their whim.

Changes that used to occur naturally over hundreds, even thousands of years, can now turn over in a matter of weeks.

One of the ways we've tried to keep track of this relentless recasting of our planet is through the land cover map.

Researchers will take aerial photos or satellite images and categorise the scene below. Where are the grasslands and forests; the roads and buildings; what is water and what is snow or ice?

Such maps tell us where the resources are and help us to manage them. They aid urban planning, assess crop yields, analyse flood risks, and track impacts on biodiversity - the list is endless.

The challenge is corralling the flood of new data that threatens to make any land cover map out of date the moment it's produced.

It's why researchers are increasingly reaching for artificial intelligence (AI) tools.

Esri has made the 2020 Sentinel-2 map open to all. Anyone can play

Take for example, this week's Living Atlas release from US company Esri, external, the leading producer of geographic information system (GIS) software.

Esri has produced a global land cover map for 2020, external made from the pictures acquired by the European Union's Sentinel-2 satellite constellation.

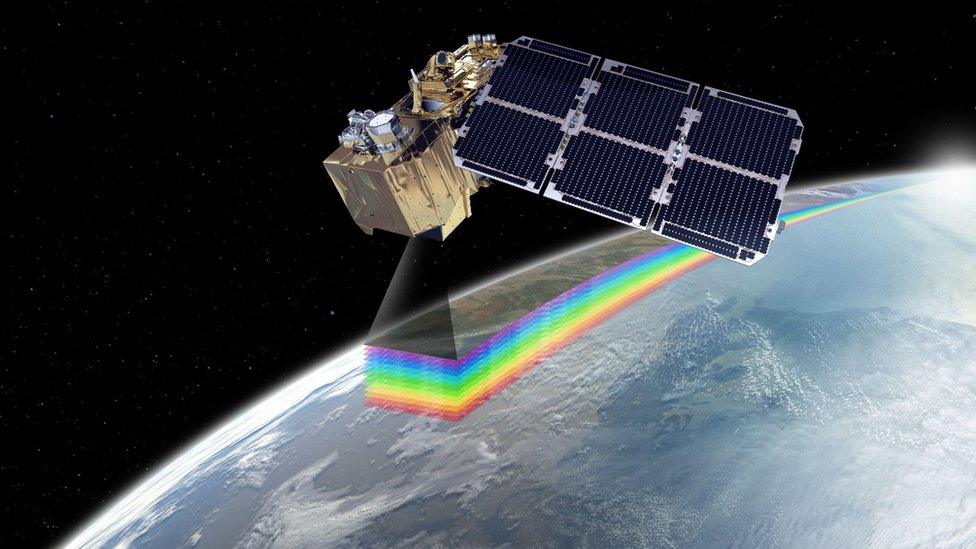

This is a pair of spacecraft, external in orbit that continuously photograph Earth's surface at a resolution of 10m (the size of each pixel in an image). Terabytes of data are coming down every day.

An army of researchers would struggle to fully characterise the contents of all those pixels, but a machine can do it - and fast.

"In a typical workflow, a 2020 land cover map would probably not come out until middle or late this year, because it takes that much processing time, it takes that much checking and validation work to go on," explained Sean Breyer, who manages Esri's Living Atlas of the World programme.

"But we've come up with a process - with our partners Impact Observatory, external - that uses an AI approach. The entire runtime to compute the entire planet's land cover was under a week. That brings in a whole new dimension to land cover mapping that says we could potentially do land cover mapping on a weekly basis or even a daily basis for targeted areas," he told BBC News.

Artwork: There are two Sentinel-2 satellites in orbit managed by the European Space Agency

Tech firm Impact Observatory developed its AI land classification model using a training dataset of five billion human-labelled image pixels. This model was then given the Sentinel-2 2020 scene collection to classify, processing over 400,000 Earth observations to produce the final map.

"So we had experts labelling these images, and then much like the way a child learns, we're feeding information to the model," said Dr Caitlin Kontgis, the head of science and machine learning at Impact Observatory.

"With more iterations and more information, the model learns these patterns. So if it sees ice in one location, it can then find ice in another location.

"The data-set we used to train the model is incredibly novel and allows us to look not only at spectral features - like the colours in a satellite image - but also at the spatial context. And in doing so, we were able to train this model up and then run it over the globe to get this highest resolution map available in less than a week."

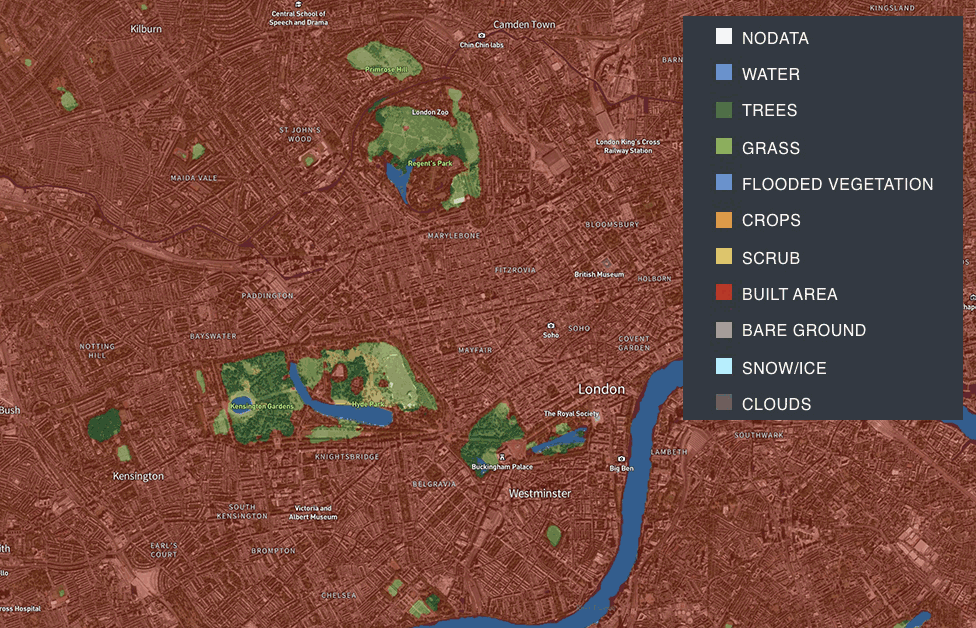

Central London's famous parks: Green islands surrounded by concrete and steel

The Living Atlas Sentinel-2 2020 Land Cover Map, external, produced with the assistance of Microsoft, is open source. Anyone can play with it. Take a look at your home area and see how well you think the model did in gauging the different surface types.

"If you look at some of the 30m-resolution products out there, they miss lots of the small town areas - the little shires, the collections of four or five buildings as part of a farm unit. But at 10m resolution, I think we're picking most of those up," said Sean Breyer.

There is a rapidly increasing population of satellites above our heads. Not only are they getting a sharper view of things (sub-1m resolution is the new battleground), but the type of information they return is becoming richer. More wavelengths, more colours; and the same scenes are being observed several times a day, looking for changes.

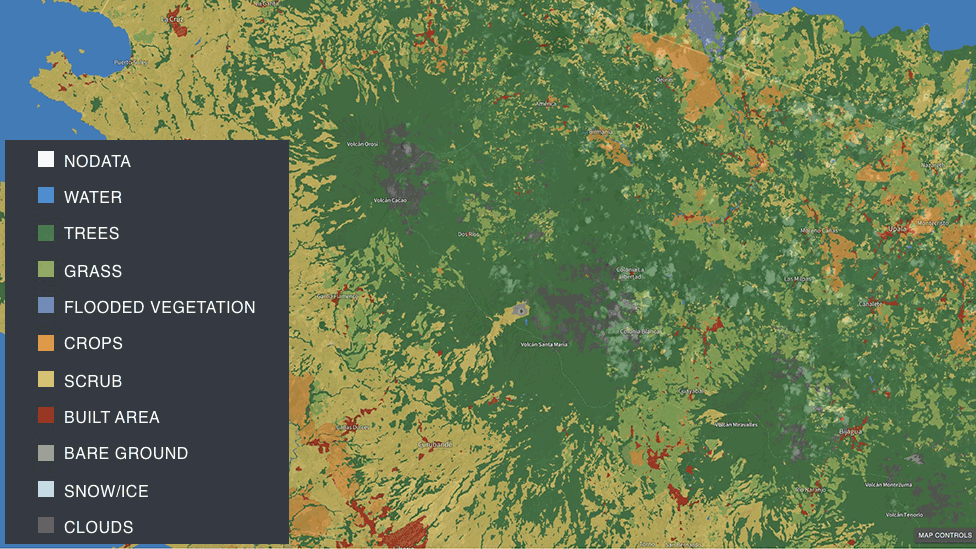

Whether it's tracking deforestation or sensing gas plumes from volcanoes - this knowledge bounty just gets bigger and bigger. But it means nothing unless we can fully use the data. Machine learning solutions are the only way we'll get near to staying on top of it all.

Costa Rica: Even with a year of data some Sentinel scenes are frustrated by cloud