'My search for the boy in a child abuse video'

- Published

One day, just after I had dropped my son off at school, I was sent a horrific video on WhatsApp. It made me question how images and videos of child sex abuse come to be made, and how they can be openly circulated on social media. And I wanted one answer above all - what happened to the boy in the video?

It may sound strange, but the woman who sent me the video was a fellow mum at the school gates. A group of us had set up a WhatsApp group to discuss term dates, uniforms, illnesses.

Then one morning, out of the blue, one of these mums sent a video to the group, with two crying-face emojis underneath it.

It was just a black box, no thumbnail, and we all pressed play without thinking. Maybe it would be a meme or a news story. Maybe one of the "stranger danger" videos some of the mums had started to share.

The video starts with a shot of a man and a baby, about 18 months old, sitting on a sofa. The baby smiles at the man.

I can't describe the rest.

If I tell you what I saw in the 10 seconds it took to grasp what was happening, and press stop, you'll have the image in your head too. And you don't want it. It's a video of child sex abuse. It's nine minutes long.

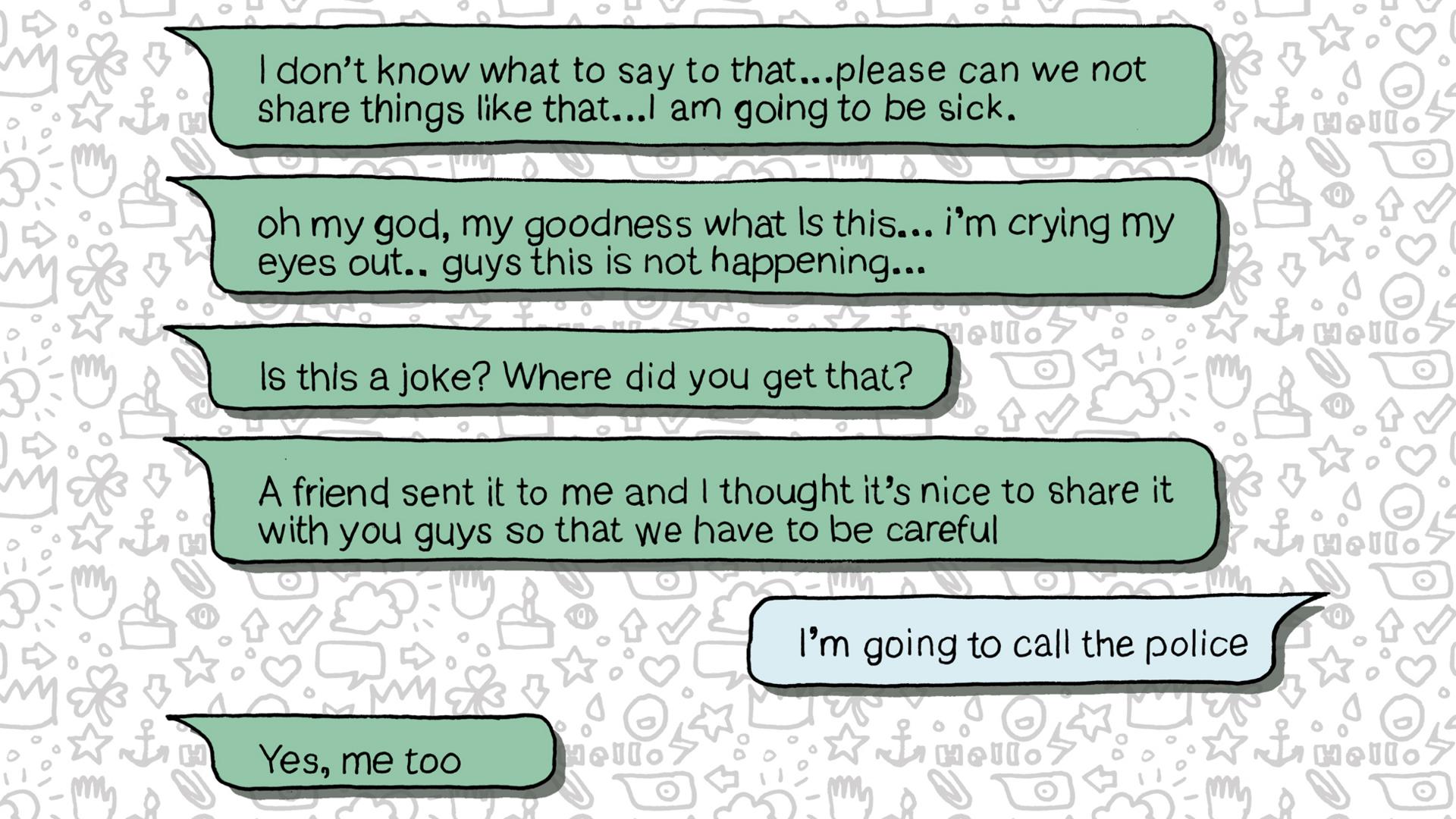

I screamed, and threw my phone across the room. It was pinging with messages from distraught members of the group.

I took my phone to the police station. I told them what had happened. I told them I believed the woman had sent it to us as a warning, and that I hoped they would investigate where the video came from. Was it new, or one they'd already come across? Was this little boy still in danger? Could this evidence help save him, or catch the abuser?

The police had my phone for two weeks. I found out the next day that they arrested the woman who sent it and visited other members of the group. And then I didn't hear anything else about it.

But one question stayed with me. What happened to the boy in the video? And so, a few months later, once I could read my own kids a bedtime story without thinking of him and life had got back to normal, I began to look for answers.

I started by trying to speak to the police officer investigating the video on my phone. But every time I called Wembley CID to speak to him, he'd just gone out.

He didn't want anything to do with me.

I checked with Alan Collins, a lawyer who specialises in child sex abuse, to see if any of the things I might normally do to track down people would work. Could I, for example, send former police officers a copy of the video to see if they recognised it?

"You could be looking at a prison sentence of 10 years," he told me. Same goes for taking a still and sending that. Just possessing an image like this on my phone could land me in jail.

So I called a friend of a friend who used to work for the police. He told me Wembley CID would have sent my phone off to one of the digital forensics labs spread across the city. The labs list all the illegal content, and when it's child sex abuse they grade it: Category A for the most serious, Cat B, Cat C. This WhatsApp video was Cat A.

Next, the file goes to victim identification and my case was passed to the Metropolitan Police's Online Child Sexual Exploitation and Abuse Command. An officer there, Det Sgt Lindsay Dick, agreed to talk to me, but he didn't want to say much about the techniques used in case it helped offenders work out how to evade capture.

An estimated 100,000 men in the UK regularly view child sex abuse

Police arrest between 400 and 450 people a month, men mostly, for viewing or downloading these files

WhatsApp takes down 250,000 accounts every month suspected of spreading child sex abuse material, based on the names of groups and profile pictures

Report child sexual abuse safely and anonymously to the Internet Watch Foundation, external

He did tell me about one case, where an officer had got hold of a phone that had images of a boy being abused on it, along with images of the same boy not being abused. In one, he's standing at a bus stop in school uniform. An officer recognised the bus stop as a Mersey Transport sign, and put a call in to the Merseyside team. They recognised the school uniform. The boy was identified, his parents arrested, and social services took over. Victim-identification police all over the world rely on little clues like this.

Lindsay Dick wouldn't discuss the details of what I'd been sent, even though he had investigated the case. Then, when I asked him about a suggestion from an editor to take a still from the video of the perpetrator's face, to help identify him, I started to feel some heat.

"Do you still have a copy of that video?" he asked me, sternly. "No," I replied. But it was still sitting somewhere on WhatsApp's server, and because I was still a member of the group, it was still showing on my phone. Even though I'd done nothing wrong, I realised how seriously the police took this kind of thing.

This hit home late last year when a senior Metropolitan Police officer, Supt Novlett Robyn Williams, was given 200 community hours' unpaid work and threatened with losing her job for failing to report a video of child sex abuse her sister had sent her on WhatsApp. (She is now appealing against the conviction.)

The Metropolitan Police refused to help me any further in my search for the boy in the video. At one point they even told officers in another part of the country, incorrectly, that I'd been cautioned for sharing the video.

I found out later from the woman who sent the video to me that she had been given three years on the sex offenders register. But the investigating officers at Wembley CID took the case no further - they didn't arrest the friend who had sent it to her, and they didn't even try to find out who had sent it to her friend. Further up that chain of people sharing the video must be some dangerous people, perhaps an abuser. But nothing was done to follow the trail.

The Metropolitan Police says: "The scale of child abuse and sexual exploitation offending online has grown in recent years. This increased demand on police, coupled with the need to keep up with advancement of technology and adapt our methods to detect and identify offenders, means it is a challenging area for the Met and police forces nationally. However, we remain committed to bringing those who commit child abuse offences online to justice, and safeguarding victims and young people at risk.

"We encourage anyone concerned about a child at risk of abuse or a possible victim, to contact police immediately. Anyone who receives an unsolicited message which depicts child abuse should report it to police immediately so action can be taken. Images of this nature should not be shared under any circumstances."

I needed someone who wasn't involved with the case to give me some more clues about where this file I'd been sent might have come from. So I started searching, and I came across news articles about a team in Queensland, Australia with a reputation for infiltrating child abuse video-sharing sites.

Their head of victim identification, former Greater Manchester Police detective Paul Griffiths, told me the file I'd been sent had probably started life on one of these sites.

"What tends to happen is that when a file gets produced like that, it generally stays under cover, under wraps, circulating amongst a fairly small, tight network. Very often people who would know that they need to keep it safe and not distribute it widely," he said.

These networks of paedophiles use the dark web, a part of the internet that isn't indexed readily by search engines such as Google. They access sites through a connection called TOR, or the onion router. They use a fake IP address, connected to several other servers dotted around the globe, which makes their location untraceable.

Members of these dark web sites are like sick stamp collectors - they post thumbnails of what they have on dedicated online forums, and look to complete series, usually of a particular child.

Some of them are "producers" - they abuse the children, or film them being abused.

A couple of years ago Paul Griffiths' team was watching one site called Child's Play. They had intelligence that two of the site's leaders were meeting up in the US. Officers intercepted them, arrested them, and got their passwords.

Now they could see everything - each and every video - and they could get to work finding children and perpetrators. They made hundreds of arrests worldwide, and 200 children have been saved so far.

"It's Sherlock Holmes stuff, it's following little clues and seeing what you can piece together to try and find a needle in a haystack," says Griffiths.

The big worry now is live-streaming, where adults can pay to watch children being abused in real time. It's even harder to detect, because no file containing clues circulates, and the platforms are all encrypted. Just as the police and technology get better at finding victims in stills or videos, another threat emerges.

"There's a famous story and it often gets told in relation to this area of crime, in relation to the young girl walking on the beach and there's starfish all over the beach and she's picking the starfish up and putting them back into the sea and a guy says to her, 'Little girl, what are you doing? You're never gonna be able to save all of these starfish.' And she says, 'No, but I'll save that one.' And that's really what we're doing," says Griffiths.

"You know, we're saving the ones we can save. And if some magical solution appears somewhere in the future that's going to save all of them, that's going to stop this happening, then that'll be wonderful. But in the meantime, we can't just sit back and ignore what we know is happening."

Find out more

Paul Griffiths is part of a small network of people who travel the globe for meetings and conferences on what to do about the huge numbers of videos and images circulating online.

He told me to contact Maggie Brennan, a lecturer in clinical forensic psychology at the University of Plymouth, who has been studying child-sex-abuse material for years. Between 2016 and 2018 she combed through the child-abuse images in a database run by Interpol, to build up a profile of victims.

She found a chilling pattern that suggested the age of the boy in the video I saw is not that unusual.

"Concerningly, there is a substantive, small, but important proportion of those images that do depict infants and toddlers. And we found a significant result in terms of the association between very extreme forms of sexual violence and very young children."

Like the boy in the video I was sent, most children on the database are white - most likely a reflection of the fact that the police forces contributing to it are from majority-white countries.

There's constant pressure, Brennan says, to quantify the numbers of images or videos that are in existence, and the numbers of victims who are being sexually exploited. But it's impossible. Databases only hold the images that have been found, through police raids or reports. Who knows how many are circulating out there?

Paul Griffiths says it only takes one person to bring a video out of the depths of the dark web and unleash it on the general population.

"Sooner or later it comes into the possession of someone who either doesn't know how to keep it safe and hidden, or doesn't really care. And they spread it wider. It can take a few hours, and it's all over the internet."

I spoke to one offender who served seven months in prison for viewing child abuse images. He had been offered the files on Skype during an adult online sexual meet-up. He'd opened the first file, seen it was of a child - and carried on opening all 20. Then he tried to share them with someone else. Eventually, the man who sent him the files sent them to someone who told the police. But it's a telling example of how easily files like the one I was sent spread, from the depths of the dark web, on to platforms like Skype, and then to people's phones.

Despite the lack of action taken on my case, the UK policing response to child sex abuse images is one of the most robust in the world.

The Child Abuse Image Database (CAID) has seen huge investment over the last five years. When detectives receive the phone or laptop of a suspect, they can run images on it through state-of-the-art software that checks whether images are new, or already known to police. All police forces are linked up, and the database talks to others around the world.

In the 1990s the Home Office undertook a study of the proliferation of indecent imagery of children. There were less than 10,000 images in circulation then. Now there are almost 14 million images on the UK database.

The levels of depravity in videos and images are getting worse, Chief Constable Simon Bailey tells me. He's been the National Police Chief Council's lead for child protection and abuse investigations for the last five years.

I am expecting a forbidding character when I go to interview him at his Norfolk HQ. What I find is a man at the end of his tether.

"It just keeps growing, and growing, and growing," he says. "And there is an element of, 'These figures are just so huge that just can't be right.' Well trust me, it is right. And if I have one really significant regret around my leadership and our response to this it's that we have struggled to land with the public the true scale of what we are dealing with, the horrors of what we are dealing with. Most people, I would like to think, would be mortified that this type of abuse is taking place."

Last year, Simon Bailey called for a boycott of tech companies, such as Google and Facebook, until they invest in technology to filter and block these images and videos. The public, including myself, took little notice.

Last year, the robots Facebook deploys to sift through its Messenger service reported 12 million posts containing images of child abuse to the National Centre for Missing and Exploited Children, which runs the US database.

So child protection campaigners are horrified at Facebook's decision to start encrypting Messenger over the coming months, because it will mean the platform effectively goes "dark" and abusers will be able to share material with impunity.

It will become more like WhatsApp, where end-to-end encryption means no-one except the sender and receiver knows anything about what's in a message.

WhatsApp's encryption works because your phone and the phone of the person you're messaging generate the encryption codes and keys. When the message leaves your phone, it does travel through WhatsApp's servers - but they don't have the keys to decrypt it. The only way robots or artificial intelligence could scan the message would be if it was encrypted after it left your phone. But that would give prying regimes, law enforcement or the tech firms themselves a window into our messages. WhatsApp thinks it would lose customers as a result.

The solution is to do the scanning on our phones.

One way this could be done would be for everyone to download software with a list of all the unique codes of all the known child abuse images and videos. But this would still raise privacy concerns. The tech firms could fiddle with the list of codes to include non-child-abuse images - censorship in other words.

So it would be better if phones could run an algorithm to generate the codes themselves, completely independently from any government or tech company. This is the tricky bit though. No one's invented that algorithm yet.

One expert I spoke to put it like this: "I wouldn't call it impossible, I'd call it unknown how to do it today." It would take huge amounts of research and development - but it could probably be done.

The failure by Big Tech to invest in this angers Simon Bailey.

"They hold the key to so much of this. Their duty of care I think to children, they have completely absolved themselves of that.

"The reaction is always, 'Well we're doing our best.' No you're not. You need to do more. You're making billions of pounds in profit. Invest."

There are high hopes for the UK government's Online Harms White Paper published in April 2019 - a radical proposal for legislation that would see tech firms held responsible for the harm they do. It proposes a new regulator and campaigners hope the resulting bill will include mandatory reporting of any child sex abuse. The results of a public consultation are due soon.

At the moment, the tech firms choose what to tell us. No-one knows, for example, how much Facebook or WhatsApp spend on child protection. Facebook says it has the best artificial intelligence on the market trying to block and filter messages. But the company won't reveal how it works, or how much it costs.

John Carr, who advises governments on child safety online, says this lack of transparency has to end.

"I work with people in these companies all the time, they agree with me on most stuff, they care passionately about protecting children but they don't make the decisions. It's their bosses, typically in California, that decide what happens. People high up in those companies need to feel the heat, they need to feel that their feet are being brought to the fire."

Of course, I ask to speak to WhatsApp. They say no.

One bright summer day, I finally get a breakthrough in my search for the boy in the video. Simon Bailey's assistant puts me in touch with a senior police officer who opens some doors, and I get a call.

I'm told the boy is alive. He's one of the lucky minority who have been identified and rescued.

The video I was sent is an old one, from America. There are already three versions of it on the UK's CAID database - mine makes it four. Police can't tell me where he is, or what his name is. But they do tell me his abuser is serving a long jail term. It's the news I was hoping for.

And I'm happy to leave it at that. There's one word that has stuck in mind over the months that I've tried to find out more about this case - "revictimisation". Each time anybody watches or shares a video of a child being abused, that child is revictimised. And as I get closer to finding the boy, I realise I could end up making a phone call to tell him, out of the blue, that I've seen the video of him. He'd be reminded of the horror. He'd be humiliated. I should just leave him in peace.

But I've learned about a campaign group in the US made up of victims - people filmed by abusers - who want to be found, and heard.

James Marsh, a lawyer who has been representing and campaigning for victims of child sex abuse for 14 years, recently spearheaded a change to the law in America that gives victims a right to a minimum of $3,000 compensation from everyone convicted of viewing or sharing a video of their abuse.

It's called the Amy, Vicky, and Andy Child Pornography Victim Assistance Act of 2018, after the three young victims who backed it by writing impact statements for the courts.

"It's only been really very recently that victims have become more empowered to reclaim that space, really trying to assert that this is not a victimless crime, that these are not harmless pictures, and that they have voices that deserve to be heard," he tells me.

Voluntary principles

A set of "voluntary principles" for the tech industry, designed to counter online child sexual exploitation and abuse was published this week, external by the governments of the UK, the US, Australia, Canada and New Zealand, after consultations with six leading tech companies.

An accompanying letter acknowledged the industry's efforts, but said there was "much more to be done to strengthen existing efforts, external". It added that the initiative was intended to "drive collective action".

The principles include preventing dissemination of child sex abuse material, targeting online grooming, preventing search results from surfacing child sexual abuse, and sharing data about the evolving threat.

Recently a seasoned journalist called him for details about what happened to one of his clients. She was expecting to hear that the abuse involved naked photos. When he told her of the reality of the content of these films - the rape of a small child - she was horrified, and didn't publish the details.

"I think as a journalist you're turning off your readership by including these graphic descriptions. And yet, how else can you accurately report what's happening to bring about meaningful change?"

He says his clients, as they get older, have become frustrated by this squeamishness. "This is our lived experience," they say. "Face up to it."

I ask him if he thinks the young man, Andy, from the Amy and Vicky and Andy Act, would be up for talking to me. He agrees to connect us on Facebook Messenger.

And with that, I had found a boy in a video. One who had suffered the same terrible fate as the boy in the video I was sent.

Whatever I might have wanted to ask that boy, I could ask Andy. Did he have somewhere safe to sleep at night? Had he managed to stay safe?

Andy has a warm voice. He's eager to talk, candid and hugely likeable. It's strange, but our conversation is not a miserable one.

He tells me he is in his early 20s. He's been out of jail for six months, which is the longest he's managed since he was a child. Fighting mainly, and robbery. When he was younger he was into drugs - meth, heroin and weed. He's doing well at staying off them now. And he has two children, who he adores.

But he lives in a kind of prison.

"I'm kind of a boring person. I don't really go out much, I stay at home, I kind of live just with my kids, and that's about it."

Andy lives in constant fear that someone might recognise him from one of the videos his abuser made of him, as the abuse continued until he was 13. If someone so much as looks at him in the street, he worries.

His story starts with his parents' divorce. His mother wanted a male figure in his life, and so when Andy was around seven years old she sent him to a youth programme. The "mentor" allocated by the organisation groomed Andy and his family for a few months and took Andy on trips to Las Vegas.

Andy didn't tell anyone when the sexual abuse started, because he didn't want to upset his mother.

"I didn't know how my mom would act about it. I didn't want her to be embarrassed because something had happened to me. I didn't know if it was normal. That's kind of how he made it seem."

How to get help

If you've been affected by the issues touched on in this story, you can find sources of support on the BBC Action Line

The Lucy Faithfull Foundation, external works to prevent and tackle child sexual abuse and exploitation

When Andy grew old enough he confronted his abuser, who soon afterwards went on the run to Mexico. Then, one day, the FBI turned up at Andy's high school and knocked on his classroom door. The abuser had got sloppy - he'd sent a video to someone he didn't know, who'd reported him.

"And then they tell me that there's hundreds of thousands of videos and pictures of me out on the web."

Until that moment, Andy had no idea his abuse had been shared at all, let alone with thousands of people. But despite this shocking news, and the difficulty of telling his mother the truth, Andy agreed to help the FBI lure his abuser back across the border.

"We coaxed him back with a birthday party of mine.

"It was a whole three months, all the way up until my birthday. I talked with him on the phone, like nothing was wrong. He sent me a few packages."

The FBI officers set up the sting. They showed Andy the route they thought his abuser would take and the location of the planned arrest.

"It was really cool. They caught him a couple blocks from my grandmother's house, pulled him over and arrested him."

It was one of the best moments of Andy's life.

Like other identified victims in the US, Andy gets a letter every time someone is convicted of viewing or sharing a video of him. He has thousands of them.

He's doing his best to rebuild his life. When he can afford therapy, things are better. His lawyers are working on some big cases, which should bring him more compensation. But it's tough.

"Work is really hard for me. And it's not because I'm lazy or anything. It's mainly the mentality of not being able to trust somebody. I can't let this person in. So I'm really anti-social."

Andy, like all victims, was incredibly unlucky to fall into the hands of an abuser. Had he not, he thinks he would by now have been the CEO of his father's business.

He is finding purpose and solace in his involvement with Amy, Vicky and Andy's law. The three young campaigners hope to meet up in person soon. In the future, Andy wants to give up his anonymity and go into schools to teach children how to stay safe, and how to tell someone if they are not. He feels an obligation to do it he says.

"You know, I want people to know my story."

I will never get to talk to the other boy in the video. I hope he's OK. I hope he can rebuild his life - however slowly and imperfectly - like Andy.

I've been able to give you a happy ending. Both Andy and the little boy in the video I was sent have been identified and their abusers are in prison. But this is wildly misleading.

Most of the boys and girls in videos won't be rescued. And without radical reform of the way we manage technology and privacy, videos like the one I saw will keep circulating, and new children will be abused to feed the demand.

Illustrations by Hello Emma, external

You may also be interested in:

Last year Rose Kalemba wrote a blog post explaining how hard it had been - when she was raped as a 14-year-old girl - to get a video of the attack removed from a popular porn website. Dozens of people then contacted her to say that they were facing the same problem today.