Leap Motion: Touchless tech wants to take control

- Published

Leap Motion's gesture-controlled device connects to a computer via a USB cable. It is far from being the first firm to try to revolutionise how we control our PCs.

There are already many other PC-compatible gesture sensors, including a version of Microsoft's Kinect for Windows. However, their size may have limited their appeal.

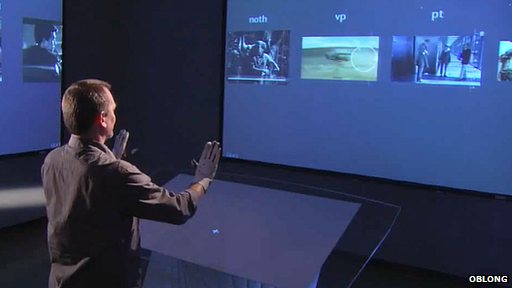

Oblong's G-Speak platform is a more expensive gesture-based system that can be used with gloves or bare-handed. It inspired the tech used in the film Minority Report.

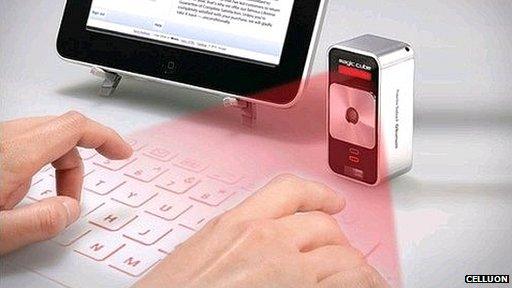

Celluon's Magic Cube lets you project a full-size laser keyboard on to any flat surface. The bluetooth gadget also lets you use your fingertip as a mouse to drag, point and click.

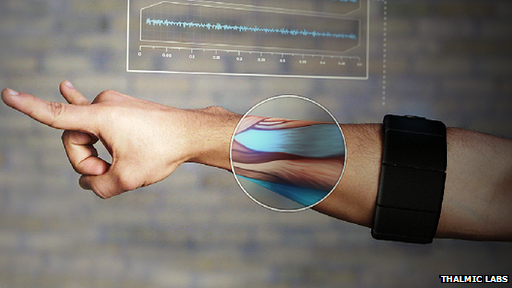

The Myo armband includes a motion tracker as well as a sensor that detects muscles' electrical activity and gestures. It is still in development.

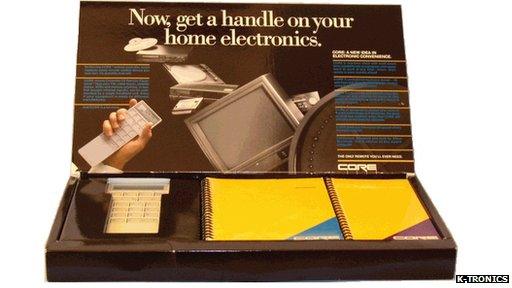

Apple co-founder Steve Wozniak created the Core UC-100 control. All 16 keys on its pad were programmable to trigger custom functions, but it proved too clunky for the masses.

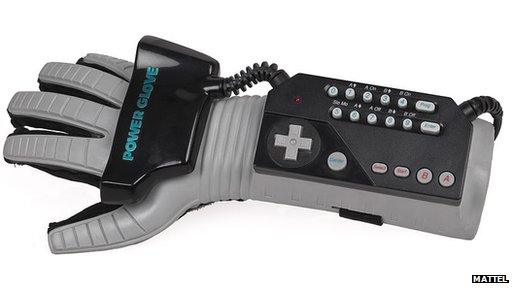

Mattel launched the Power Glove in 1989. It was designed to work with a Nintendo games console to simulate hand movements but ultimately failed to catch on.

Images of this saucery-spin on a keyboard and trackball appeared in 2009. It never made it beyond the concept stage so we'll never know how hard it would be to use.

Tobii's gaze-controlled sensors allow users to control computers with their eyes. The look-and-do tech was originally developed for people with disabilities.

Zspace is developing a system which combines special glasses and a stylus to allow users to work in what it describes as a "3D holographic-like environment".

The keyboard and mouse have long been the main bridge between humans and their computers.

More recently we've seen the rise of the touchscreen. But other attempts at re-imagining controls have proved vexing.

"It's one of the hardest problems in modern computer science," Michael Buckwald, chief executive and co-founder of Leap Motion, told the BBC.

But after years of development and $45m (£29m) in venture funding, his San Francisco-based start-up has come up with what it claims is the "most natural user interface possible."

It's a 3D-gesture sensing controller that allows touch-free computer interaction.

Using only subtle movements of fingers and hands within a short distance of the device, virtual pointing, swiping, zooming, and painting become possible. First deliveries of the 3in (7.6cm)-long gadget begin this week.

"We're trying to do things like mould, grab, sculpt, draw, push," explains Mr Buckwald.

"These sorts of physical interactions require a lot of accuracy and a lot of responsiveness that past technologies just haven't had."

He adds that it's the only device in the world that accurately tracks hands and all 10 fingers at an "affordable" price point, and it's 200 times more precise than Microsoft's original Kinect.

It works by using three near-infrared LEDs, external (light emitting diodes) to illuminate the owner's hands, and then employs two CMOS (complementary metal-oxide-semiconductor) image sensors to obtain a stereoscopic view of the person's actions.

Hundreds of thousands of pre-orders have poured in from around the world, and thousands of developers are working on applications, Mr Buckwald says.

Leap Motion is convinced it has a shot at making gesture controls part of the mainstream PC and Mac computing experience.

But some high-profile Silicon Valley leaders doubt Leap Motion will render the mouse and keyboard obsolete anytime soon.

They include Tom Preston-Werner, chief executive and founder of Github, a service used by developers to share code and advice.

Coders will still have a need for keyboards and computer mice for years to come, he says, adding that sticking your arm out and waving it for any length of time will be uncomfortable and tiring.

For developers who work long hours, Mr Preston-Warner says he prefers approaches like the forthcoming Myo armband, which wirelessly transmits electrical signals from nerves and muscles to computers and gadgets without being tethered to a USB port.

Other Silicon Valley programmers like Ajay Juneja, aren't convinced Leap Motion's touch-free controller has entirely solved the human-computer interface problem either.

"It's a tool for hobbyists and game developers," says the founder of Speak With Me, a firm that develops natural-language voice-controlled software.

"What else am I going to use a gestural interface for?"

Of course, Leap Motion has lots of ideas.

The company already has its own app store called Airspace with 75 programmes including Core's Painter Freestyle art software, Google Earth and other data visualisation and music composition apps. The New York Times also plans to release a gesture-controlled version of its newspaper.

Leap Motion is launching its own app store to help developers promote compatible software

Mr Buckwald says he doesn't expect a single "killer app" to emerge. Instead he predicts there will be "a bunch of killer apps for different people".

Kwindla Kramer, chief executive of Oblong Industries - which helped inspire the gesture-controlled tech in the movie Minority Report - considers Leap Motion's controller "a step forward".

His firm makes higher-end devices ranging from $10,000 to $500,000 for industry.

Leap's "accuracy and pricing" is great, he says, but adds that the "tracking volume" - the area where the device can pick up commands - is somewhat limited.

Still, most experts believe the user interfaces of the future will accept a mash-up of different types of controls from a range of different sensors.

Meanwhile, Leap is already looking beyond the PC and says it hopes to embed its tech into smartphones, tablets, TVs, cars and even robots and fighter jets in future.

- Published12 June 2013

- Published16 April 2013

- Published11 January 2013