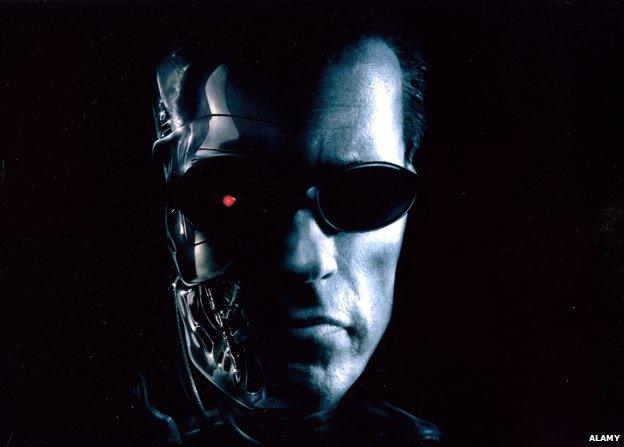

Does AI really threaten the future of the human race?

- Published

- comments

The end of the human race - that is what is in sight if we develop full artificial intelligence, according to Stephen Hawking in an interview with the BBC. But how imminent is the danger and if it is remote, do we still need to worry about the implications of ever smarter machines?

My question to Professor Hawking about artificial intelligence comes in the context of the work done by machine learning experts at the British firm Swiftkey, who have helped upgrade his communications system. So I talk to Swiftkey's co-founder and chief technical officer, Ben Medlock, a computer scientist with a Cambridge doctorate which focuses on how software can understand nuance in language.

Stephen Hawking: "Humans, who are limited by slow biological evolution, couldn't compete and would be superseded"

Ben Medlock told me that Professor Hawking's intervention should be welcomed by anyone working in artificial intelligence: "It's our responsibility to think about all of the consequences good and bad", he told me. "We've had the same debate about atomic power and nanotechnology. With any powerful technology there's always the dialogue about how do you use it deliver the most benefit and how it can be used to deliver the most harm."

He is, however sceptical about just how far along the path to full artificial intelligence we are. "If you look at the history of AI, it has been characterised by over-optimism. The founding fathers, including Alan Turing, were overly optimistic about what we'd be able to achieve."

He points to some successes in single complex tasks, such as using machines to translate foreign languages. But he believes that replicating the processes of the human brain, which is formed by the environment in which it exists, is a far distant prospect: "We dramatically underestimate the complexity of the natural world and the human mind, "he explains. "Take any speculation that full AI is imminent with a big pinch of salt."

While Medlock is not alone in thinking it's far too early to worry about artificial intelligence putting an end to us all, he and others still see ethical issues around the technology in its current state. Google, which bought the British AI firm DeepMind earlier this year, has gone as far as setting up an ethics committee to examine such issues.

DeepMind's founder Demis Hassabis told Newsnight earlier this year that he had only agreed to sell his firm to Google on the basis that his technology would never be used for military purposes. That, of course, will depend in the long-term on Google's ethics committee, and there is no guarantee that the company's owners won't change their approach 50 years from now.

Prof Murray Shanahan introduces the topic of artificial intelligence

The whole question of the use of artificial intelligence in warfare has been addressed this week in a report by two Oxford academics. In a paper called Robo-Wars: The Regulation of Robotic Weapons, external, they call for guidelines on the use of such weapons in 21st Century warfare.

"I'm particularly concerned by situations where we remove a human being from the act of killing and war," says Dr Alex Leveringhaus, the lead author of the paper.

He says you can see artificial intelligence beginning to creep into warfare, with missiles that are not fired at a specific target: "A more sophisticated system could fly into an area and look around for targets and could engage without anyone pressing a button."

But Dr Leveringhaus, a moral philosopher rather than a computer scientist, is cautious about whether there is anything new about these dilemmas. He points out that similar ethical questions have been raised at every stage of automation, from the arrival of artillery allowing the remote killing of enemy soldiers to the removal of humans from manufacturing by mechanisation. Still, he welcomes Stephen Hawking's intervention: "We need a societal debate about AI. It's a matter of degree."

Driverless cars raise basic questions about decision-making by computers

And that debate is given added urgency by the sheer pace of technological change. This week the UK government has announced three driverless car pilot projects, and Ben Medlock of Swiftkey sees an ethical issue with autonomous vehicles. "Traditionally we have a legal system that deals with a situation where cars have human agents," he explains. "When we have driverless cars we have autonomous agents... You can imagine a scenario when a driverless car has to decide whether to protect the life of someone inside the car or someone outside."

Those kind of dilemmas are going to emerge in all sorts of areas where smart machines now get to work with little or no human intervention. Stephen Hawking's theory about artificial intelligence making us obsolete may be a distant nightmare, but nagging questions about how much freedom we should give to intelligent gadgets are with us right now.