Google’s plan to make talk less toxic

- Published

Online comments can be aggressive

"Never read below the line" - that has become sensible advice for anyone tempted to look at online comments.

The depressingly toxic nature of internet conversations is of increasing concern to many publishers. But now Google thinks it may have an answer - using computers to moderate comments.

The search giant has developed something called Perspective, which it describes as a technology that uses machine learning to identify problematic comments. The software has been developed by Jigsaw, a division of Google with a mission to tackle online security dangers such as extremism and cyberbullying.

The system learns by seeing how thousands of online conversations have been moderated and then scores new comments by assessing how "toxic" they are and whether similar language had led other people to leave conversations. What it's doing is trying to improve the quality of debate and make sure people aren't put off from joining in.

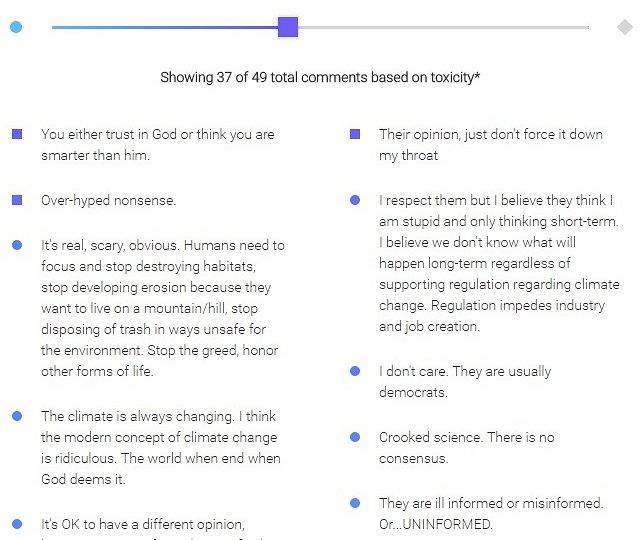

In this example the most "toxic" comments are hidden

Jared Cohen of Jigsaw explains three ways Perspective could be used: by websites to help moderate comments, by users wanting to choose the level of rudeness they see in the online conversations they take part in, and by people wanting to restrain their own behaviour.

I was intrigued by this last example. He explained that the research had uncovered the fact that many aggressive comments came from people who were usually reasonable but were having a bad day. "If you start yelling in real life you get feedback - online it's just putting something into a white box," he said.

"You could get feedback as you type. 'Hey this is 70% toxic'," he suggested.

A demo on the Jigsaw website shows how the tool might allow users to determine the level of "toxicity" in comments about global warming. Set the slider up high and you get people describing each other as "stupid" or "uneducated bumpkins". Bring it lower and you get "they are ill-informed".

Perspective has already been tried out by the New York Times, which used it to help moderate comments. The newspaper currently finds this process so labour-intensive that only 2% of articles are opened up for comment. The new tool helped the moderators work at twice the speed, so the paper may now be able to host conversations on more stories.

Automatic moderation tools

Wikipedia, where the tool has been used to look at disagreements over editing, the Guardian and the Economist have also experimented with the software. Perspective, which is still in development, will now be made available for free to any publisher who wants it.

It sounds as though rival platforms like Facebook and Twitter could find this very useful, and the Perspective team did not rule out working with them.

Strangely, it doesn't appear that it is going to be used by Google itself, despite the fact that YouTube is home to some of the nastiest comments you will find anywhere on the internet. Google says similar automatic moderation tools are already available to owners of YouTube channels.

And what Google is also keen to stress is that it sees no role for itself in determining what is acceptable in online conversation - that is for its customers to decide.

Like other social media platforms, it is determined to be seen as a technology business not a media giant. As its influence on the way we see the world grows, that position is getting harder to maintain.