Towards a lip-reading computer

- Published

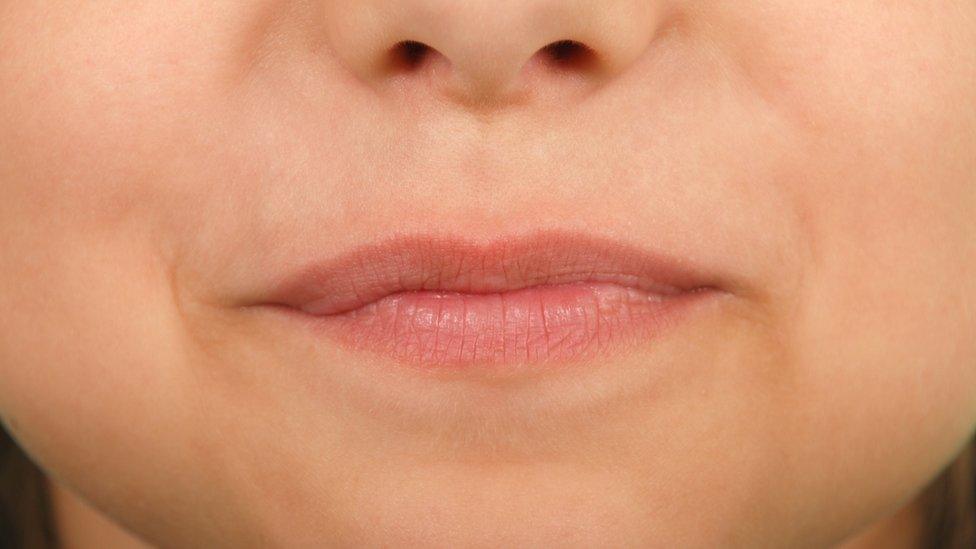

Thousands of hours of BBC footage have been used to train the lip-reading system

Scientists at Oxford say they've invented an artificial intelligence system that can lip-read better than humans.

The system, which has been trained on thousands of hours of BBC News programmes, has been developed in collaboration with Google's DeepMind AI division.

"Watch, Attend and Spell", as the system has been called, can now watch silent speech and get about 50% of the words correct. That may not sound too impressive - but when the researchers supplied the same clips to professional lip-readers, they got only 12% of words right.

Joon Son Chung, a doctoral student at Oxford University's Department of Engineering, explained to me just how challenging a task this is. "Words like mat, bat and pat all have similar mouth shapes." It's context that helps his system - or indeed a professional lip reader - to understand what word is being spoken.

"What the system does," explains Joon, "is to learn things that come together, in this case the mouth shapes and the characters and what the likely upcoming characters are."

Human lip-readers are not likely to be replaced by computers just yet

The BBC supplied the Oxford researchers with clips from Breakfast, Newsnight, Question Time and other BBC news programmes, with subtitles aligned with the lip movements of the speakers. Then a neural network combining state-of-the-art image and speech recognition set to work to learn how to lip-read.

After examining 118,000 sentences in the clips, the system now has 17,500 words stored in its vocabulary. Because it has been trained on the language of news, it is now quite good at understanding that "Prime" will often be followed by "Minister" and "European" by "Union", but much less adept at recognising words not spoken by newsreaders.

A lot more work needs to be done before the system is put to practical use, but the charity Action on Hearing Loss is enthusiastic about this latest advance.

"AI lip-reading technology would be able to enhance the accuracy and speed of speech to text," says Jesal Vishnuram, the charity's technology research manager. "This would help people with subtitles on TV, and with hearing in noisy surroundings."

Right now the system has limitations - it can only operate on full sentences of recorded video. "We want to get it to work in real time," says Joon Son Chung. "As it keeps watching TV, it will learn." And he says getting the system to work live is a lesser challenge than improving its accuracy.

He sees all sorts of potential uses for this technology, from helping people to dictate instructions to their smartphones in noisy environments, to dubbing old silent films.

In many cases, the AI lip-reading system could be used to improve the performance of other forms of speech recognition.

Where the Oxford researchers and the hearing loss charity agree, is on the fact that this is not a case where AI is going to replace humans.

Professional lip-readers need not fear for their jobs - but they can look forward to a time when technology helps them become a lot more accurate.

- Published8 November 2016

- Published20 October 2016

- Published12 October 2016