Facebook can't hide behind algorithms

- Published

- comments

If Facebook’s algorithms were executives, the public would be demanding their heads on a stick, such was the ugly incompetence on display this week.

First, the company admitted a “fail” when its advertising algorithm allowed for the targeting of anti-Semitic users.

Then on Thursday, Mark Zuckerberg said he was handing over details of more than 3,000 advertisements bought by groups with links to the Kremlin, a move made possible by the advertising algorithms that have made Mr Zuckerberg a multi-billionaire.

Gross misconduct, you might say - but of course you can’t sack the algorithm. And besides, it was only doing what it was told.

“The algorithms are working exactly as they were designed to work,” says Siva Vaidhyanathan, professor of media studies at the University of Virginia.

Which is what makes this controversy so extremely difficult to solve - a crisis that is a direct hit to the core business of the world’s biggest social network.

Fundamentally flawed

Facebook didn’t create a huge advertising service by getting contracts with big corporations.

No, its success lies in the little people. The florist who wants to spend a few pounds targeting local teens when the school prom is coming up, or a plumber who has just moved to a new area and needs to drum up work.

Facebook’s wild profits - $3.9bn (£2.9bn) between April and June this year - are due to that automated process. It finds out what users like, it finds advertisers that want to hit those interests, and it marries the two and takes the money. No humans necessary.

But unfortunately, that lack of oversight has left the company open to the kinds of abuse laid bare in ProPublica’s investigation into anti-Semitic targeting.

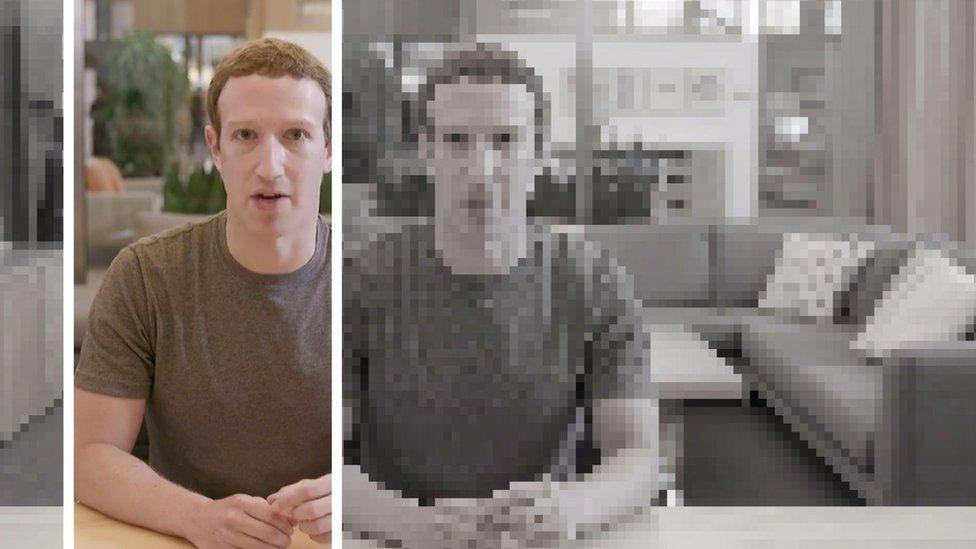

Mark Zuckerberg resembled a "improbably young leader", the New York Times wrote

“Facebook’s algorithms created these categories of anti-Semitic terms,” says Prof Vaidhyanathan, author of Anti-Social Network, a book about Facebook due out later this year.

“It’s a sign of how absurd a human-free system can be, and how dangerous a human-free system can be.”

That system will be slightly less human-free in future. In his nine-minute address, a visibly uncomfortable Mark Zuckerberg said his company would be bringing on human beings to help prevent political abuses. The day before, its chief operating officer said more humans would help solve the anti-Semitism issue as well.

“But Facebook can’t hire enough people to sell ads to other people at that scale,” Prof Vaidhyanathan argues.

“It’s the very idea of Facebook that is the problem."

'Crazy idea'

Mark Zuckerberg is in choppy, uncharted waters. And as the “leader” (as he likes to sometimes say) of the largest community ever created, he has nowhere to turn for advice or precedent.

This was most evident on 10 November, the day after Donald Trump was elected president of the United States.

When asked if fake news had affected voting, Mr Zuckerberg, quick as a snap, dismissed the suggestion as a “crazy idea”.

That turn of phrase has proven to be Mr Zuckerberg’s biggest blunder to date as chief executive.

Since Trump's election win, Facebook's influence has been under question

His naivety about the power of his own company sparked an immense backlash - internally as well as externally - and an investigation into the impact of fake news and other abuses was launched.

On Thursday, the 33-year-old found himself conceding that not only was abuse affecting elections, but that he had done little to stop it happening.

"I wish I could tell you we're going to be able to stop all interference,” he said.

"But that wouldn't be realistic. There will always be bad people in the world, and we can't prevent all governments from all interference.”

A huge turnaround on his position just 10 months ago.

“It seems to me like he basically admits that he has no control over the system he has built,” Prof Vaidhyanathan says.

No wonder, then, that Mr Zuckerberg "had the look of an improbably young leader addressing his people at a moment of crisis”, as the New York Times put it.

Wolves at the door

This isn’t the first time Facebook’s reliance on machines has landed it in trouble - and it would be totally unfair to characterise this as a problem just affecting Mr Zuckerberg’s firm.

Just in the past week, for instance, a Channel Four investigation, external revealed that Amazon’s algorithm would helpfully suggest the components you needed to make a homemade bomb based on what other customers also bought.

Instances like that, and others - such as advertising funding terrorist material - has meant the political mood in the US taken a sharp turn: Big Tech’s algorithms are out of control.

At least two high-profile US senators are drumming up support for a new bill that would force social networks with a user base greater than one million to adhere to new transparency guidelines around campaign ads.

Mr Zuckerberg’s statement on Thursday, an earnest pledge to do better, is being seen as a way to keep the regulation wolves from the door. He - and all the other tech CEOs - would much prefer to deal with this his own way.

But Prof Vaidhyanathan warns he might not get that luxury, and might not find much in the way of sympathy or patience, either.

“All of these problems are the result of the fact Zuckerberg has created and profited from a system that has grown to encompass the world… and harvest information from more than two billion people."

___

Follow Dave Lee on Twitter @DaveLeeBBC, external

You can reach Dave securely through encrypted messaging app Signal on: +1 (628) 400-7370