AI image recognition fooled by single pixel change

- Published

This turtle can sometimes look like a rifle to some image recognition systems

Computers can be fooled into thinking a picture of a taxi is a dog just by changing one pixel, suggests research.

The limitations emerged from Japanese work on ways to fool widely used AI-based image recognition systems.

Many other scientists are now creating "adversarial" example images to expose the fragility of certain types of recognition software.

There is no quick and easy way to fix image recognition systems to stop them being fooled in this way, warn experts.

Bomber or bulldog?

In their research, Su Jiawei and colleagues at Kyushu University made tiny changes to lots of pictures that were then analysed by widely used AI-based image recognition systems.

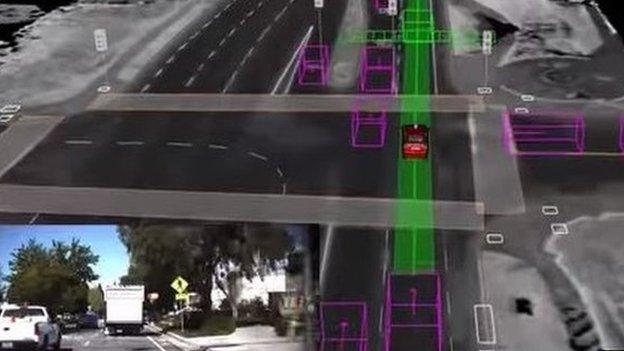

All the systems they tested were based around a type of AI known as deep neural networks. Typically these systems learn by being trained with lots of different examples to give them a sense of how objects, like dogs and taxis, differ.

The researchers found that changing one pixel in about 74% of the test images made the neural nets wrongly label what they saw. Some errors were near misses, such as a cat being mistaken for a dog, but others, including labelling a stealth bomber a dog, were far wider of the mark.

The Japanese researchers developed a variety of pixel-based attacks that caught out all the state-of-the-art image recognition systems they tested.

"As far as we know, there is no data-set or network that is much more robust than others," said Mr Su, from Kyushu, who led the research.

Neural networks work by making links between massive numbers of nodes

Deep issues

Many other research groups around the world were now developing "adversarial examples" that expose the weaknesses of these systems, said Anish Athalye from the Massachusetts Institute of Technology (MIT) who is also looking into the problem.

One example made by Mr Athalye and his colleagues is a 3D printed turtle, external that one image classification system insists on labelling a rifle.

"More and more real-world systems are starting to incorporate neural networks, and it's a big concern that these systems may be possible to subvert or attack using adversarial examples," he told the BBC.

While there had been no examples of malicious attacks in real life, he said, the fact that these supposedly smart systems can be fooled so easily was worrying. Web giants including Facebook, Amazon and Google are all known to be investigating ways to resist adversarial exploitation.

"It's not some weird 'corner case' either," he said. "We've shown in our work that you can have a single object that consistently fools a network over viewpoints, even in the physical world.

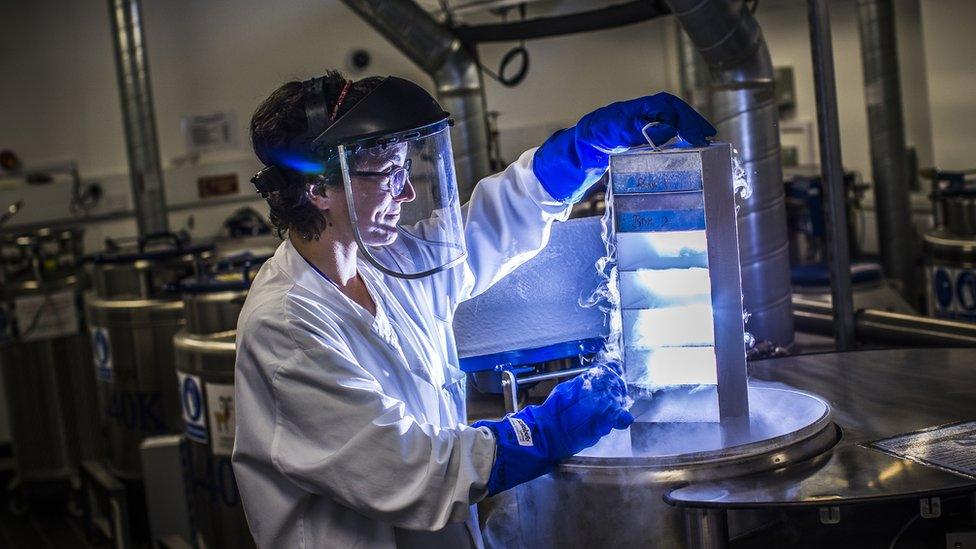

Image recognition systems have been used to classify scenes of natural beauty

"The machine learning community doesn't fully understand what's going on with adversarial examples or why they exist," he added.

Mr Su speculated that adversarial examples exploit a problem with the way neural networks form as they learn.

A learning system based on a neural network typically involves making connections between huge numbers of nodes - like nerve cells in a brain. Analysis involves the network making lots of decisions about what it sees. Each decision should lead the network closer to the right answer.

However, he said, adversarial images sat on "boundaries" between these decisions which meant it did not take much to force the network to make the wrong choice.

"Adversaries can make them go to the other side of a boundary by adding small perturbation and eventually be misclassified," he said.

Fixing deep neural networks so they were no longer vulnerable to these issues could be tricky, said Mr Athalye.

"This is an open problem," he said. "There have been many proposed techniques, and almost all of them are broken."

One promising approach was to use the adversarial examples during training, said Mr Athalye, so the networks are taught to recognise them. But, he said, even this does not solve all the issues exposed by this research.

"There is certainly something strange and interesting going on here, we just don't know exactly what it is yet," he said.

- Published13 March 2017

- Published8 August 2017

- Published17 October 2017

- Published8 September 2015

- Published30 January 2017