UK PM seeks 'safe and ethical' artificial intelligence

- Published

- comments

Theresa May delivers her speech in Davos

The prime minister says she wants the UK to lead the world in deciding how artificial intelligence can be deployed in a safe and ethical manner.

In a speech at the World Economic Forum in Davos, Theresa May said a new advisory body, previously announced in the Autumn Budget, external, will co-ordinate efforts with other countries.

In addition, she confirmed that the UK would join the Davos forum's own council on artificial intelligence.

But others may have stronger claims.

Earlier this week, Google picked France as the base for a new research centre dedicated to exploring how AI, external can be applied to health and the environment.

Facebook also announced it was doubling the size of its existing AI lab in Paris, while software firm SAP committed itself to a 2bn euro ($2.5bn; £1.7bn) investment into the country that will include work on machine learning.

Meanwhile, a report released last month by the Eurasia Group consultancy suggested that the US and China are engaged, external in a "two-way race for AI dominance".

It predicted Beijing would take the lead thanks to the "insurmountable" advantage of offering its companies more flexibility in how they use data about its citizens.

Theresa May is expected to meet US President Donald Trump at the Davos event on Thursday.

'Unthinkable advances'

The prime minister based the UK's claim to leadership in part on the health of its start-up economy, quoting a figure that a new AI-related company has been created in the country every week for the last three years.

In addition, she said the UK is recognised as first in the world for its preparedness to "bring artificial intelligence into government".

DeepMind's game-playing AI experiments are among the UK's highest profile success stories

However, she recognised that many people have concerns about potential job losses and other impacts of the tech, and declared that AI poses one of the "greatest tests of leadership for our time".

"But it is a test that I am confident we can meet," she added.

"For right across the long sweep of history from the invention of electricity to advent of factory production, time and again initially disquieting innovations have delivered previously unthinkable advances and we have found the way to make those changes work for all our people," Mrs May said.

This includes through a new UK advisory body, the Centre for Data Ethics and Innovation.

Academics and tech industry leaders differ in opinion about the risks involved.

At one end of the scale, Prof Stephen Hawking has warned that AI could "spell the end of the human race", while Tesla's Elon Musk has said that a universal basic income - in which people get paid whether or not they work - has a "good chance" of becoming necessary as jobs become increasingly automated.

Facebook's AI chief has played down the risk of robots destroying humanity

But Facebook's AI chief Yann LeCun has said society will develop the "checks and balances" to prevent a Terminator movie-like apocalypse ever coming to pass, external.

And earlier this week, Google's former chief Eric Schmidt, external told the BBC he did not believe predictions of mass job losses would occur.

"There will be some jobs eliminated but the vast majority will be augmented," he explained.

"You're going to have more doctors not fewer. More lawyers not fewer. More teachers not fewer.

"But they are going to be more efficient."

Eric Schmidt thinks AI will enhance jobs rather than destroy them en masse

While many tech industry leaders acknowledge there will be a need for new rules and regulations, they also suggest it may be premature to introduce them in the short term.

Microsoft, for example, has launched a book called The Future Computed, external to coincide with the Davos event.

It proposes that it be given time to develop rules, external to govern its own AI work internally before legislation is passed.

Analysis:

Much of the AI research involved in developing Amazon's Alexa voice assistant was done in the UK

By Rory Cellan-Jones, Technology correspondent

Is the UK really on track to lead the world in Artificial Intelligence?

Well the United States and China might have a thing or two to say about that.

Both are engaged in an AI arms race and are investing the kind of sums that would make Chancellor Philip Hammond's - or even Foreign Secretary Boris Johnson's - eyes water.

Still, it is true that in London and Cambridge some of the world's leading AI scientists are at work. It was here that the Alexa digital voice assistant was developed and where a computer was trained to defeat champion players of the Chinese game of Go.

The trouble is that those scientists were employed by Amazon and by Google, which brought up the DeepMind AI business when it was still in its infancy.

That's a pattern frequently repeated in the UK technology sector. A Chinese AI investor on a trip to the UK this week expressed surprise that the government had not done more to protect these AI assets.

Now there is to be another AI body, the Centre for Data Ethics and Innovation, joining in a global conversation about the moral challenges posed by AI.

We already have the Alan Turing Institute which has a similar mission.

The concern is that while the UK agonises over the implications of this technology, the Chinese will just be getting on with it.

Telegram trouble

Theresa May also addressed the need for tech firms to tackle terrorism and extremist content during her speech.

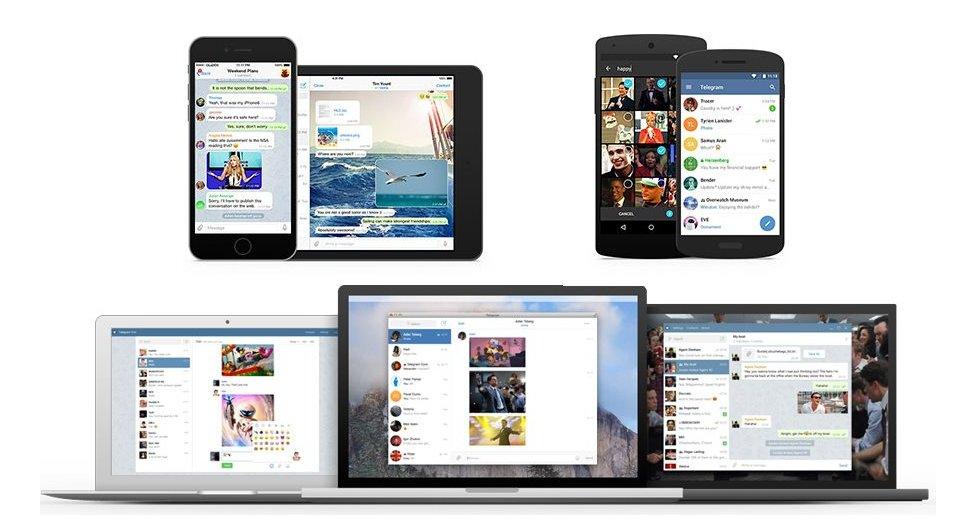

Telegram is a privacy-focused chat app

She said Telegram - a privacy-focused chat app - became popular with criminals and terrorists over a short period of time.

"We need to see more co-operation from smaller platforms like this," she added.

Telegram has previously said it is "no friend of terrorists", external and blocked channels used by extremists.

In addition, the prime minister called on tech company investors to play their part by demanding that trust and safety issues be considered.

- Published6 December 2017

- Published9 January 2018

- Published9 January 2018