Deepfake porn videos deleted from internet by Gfycat

- Published

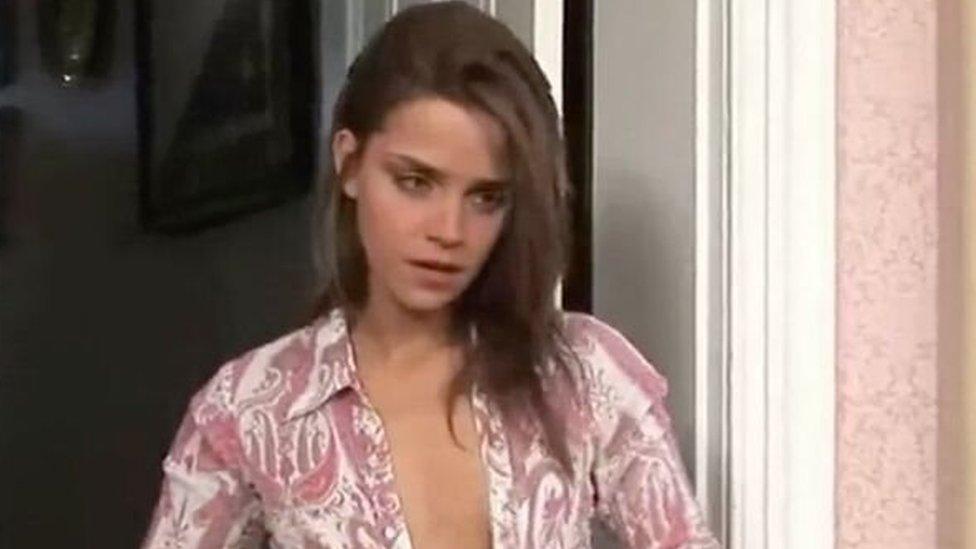

Emma Watson is among several actresses who have had their faces merged with pornography as seen in this screenshot

Pornographic videos that used new software to replace the original face of an actress with that of a celebrity are being deleted by a service that hosted much of the content.

San Francisco-based Gfycat has said it thinks the clips are "objectionable".

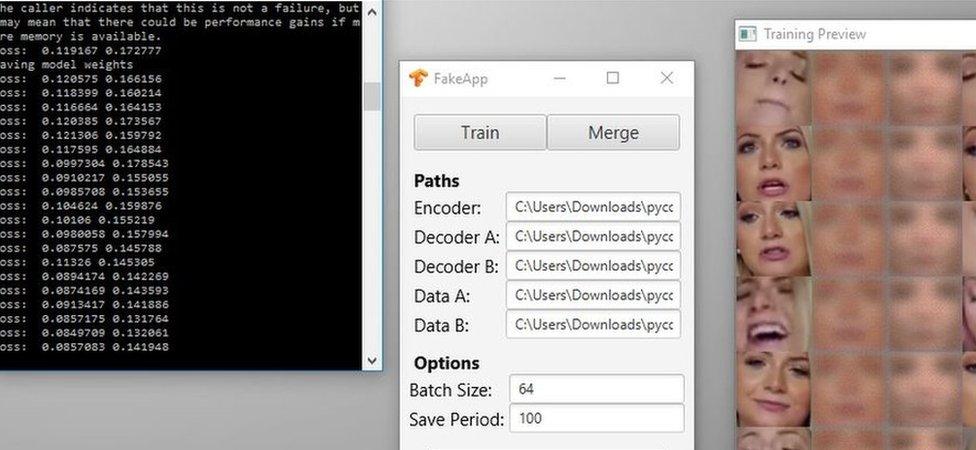

The creation of such videos has become more common after the release of a free tool earlier this month that made the process relatively simple.

The developer says FakeApp has been downloaded more than 100,000 times.

It works by using a machine-learning algorithm to create a computer-generated version of the subject's face.

To do this it requires several hundred photos of the celebrity in question for analysis and a video clip of the person whose features are to be replaced.

The results - known as deepfakes - differ in quality.

FakeApp's creator says a single button press is required to generate the videos once a computer has been given source material

But in some cases, where the two people involved are of a similar build, they can be quite convincing.

Some people have used the technology to create non-pornographic content.

These include a video in which the face of Germany's Chancellor Angela Merkel has been replaced with that of US President Donald Trump, and several in which one movie star's features, external have been swapped with those of another.

Allow YouTube content?

This article contains content provided by Google YouTube. We ask for your permission before anything is loaded, as they may be using cookies and other technologies. You may want to read Google’s cookie policy, external and privacy policy, external before accepting. To view this content choose ‘accept and continue’.

But the most common use of the tool has apparently been to reversion pornography to feature popular female actresses and singers. Many of these clips have subsequently been shared online and critiqued by others.

Many creators uploaded their clips to Gfycat. The service is commonly used to host short videos that are then posted to social website Reddit and elsewhere.

Gfycat allows adult content, but began deleting some of the deepfakes earlier this week, a matter that was first reported by the news site Motherboard.

"Our terms of service allow us to remove content that we find objectionable. We are actively removing this content," Gfycat said in a brief statement.

Photos of the singer Ariana Grande have been used to replace the features of one pornographic actress

Reddit has yet to comment on the matter. It still provides access to clips that Gfycat has yet to delete, as well as deepfakes hosted by others.

Its content policy prohibits, external "involuntary pornography".

But it is not clear whether it would recognise the deepfakes as such. Moreover, it requires the subject involved to make a complaint, external.

However, Gfycat is not the only party to have forced some of the material offline.

The owner to the rights of some of the explicit content has demanded that clips be blocked on copyright grounds.

In addition, the chat service Discord closed down a group dedicated to sharing the clips, saying that they had violated its rules on non-consensual pornography.

You might also be interested in:

- Published17 July 2017