Children 'at risk of robot influence'

- Published

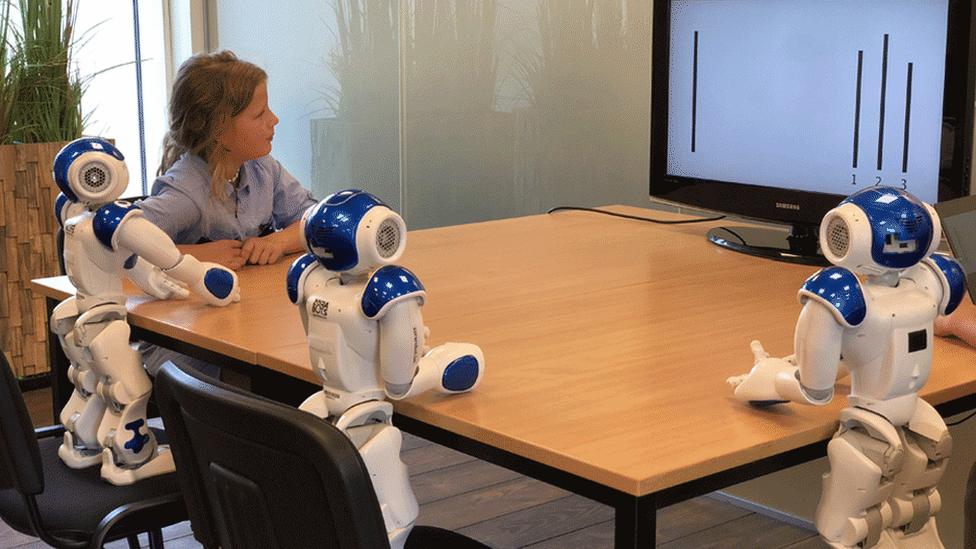

The children were asked to compare lines on a screen, with robots offering their opinions too

Forget peer pressure, future generations are more likely to be influenced by robots, a study suggests.

The research, conducted at the University of Plymouth, found that while adults were not swayed by robots, children were.

The fact that children tended to trust robots without question raised ethical issues as the machines became more pervasive, said researchers.

They called for the robotics community to build in safeguards for children.

Those taking part in the study completed a simple test, known as the Asch paradigm, which involved finding two lines that matched in length.

Known as the conformity experiment, the test has historically found that people tend to agree with their peers even if individually they have given a different answer.

In this case, the peers were robots. When children aged seven to nine were alone in the room, they scored an average of 87% on the test.

But when the robots joined them, their scores dropped to 75% on average. Of the wrong answers, 74% matched those of the robots.

More needed to be done to protect children in future interactions with robots, the research said

Professor of robotics, Tony Belpaeme, who led the research, said: "People often follow the opinions of others and we've known for a long time that it is hard to resist taking over views and opinions of people around us. We know this as conformity. But as robots will soon be found in the home and the workplace, we were wondering if people would conform to robots.

"What our results show is that adults do not conform to what the robots are saying. But when we did the experiment with children, they did. It shows children can perhaps have more of an affinity with robots than adults, which does pose the question: what if robots were to suggest, for example, what products to buy or what to think?"

The conclusion? Children increasingly yielded to social pressure exerted by a group of robots; however, adults resisted being influenced by our robots."

The researchers said that there needed to be further discussions about protective measures to "minimise the risk to children during social child-robot interaction".

Prof Noel Sharkey, who chairs the Foundation for Responsible Robotics, said of the research: "This study shores up concerns about the use of robots with children.

"If robots can convince children (but not adults) that false information is true, the implication for the planned commercial exploitation of robots for childminding and teaching is problematic."

But he added: "One missing component from the studies was testing the children with a voice from a computer. This means that we can't tell if the effect has anything to do with the robots or just the voices played through them."