Wikipedia's broken links fixed by the Internet Archive

- Published

Nine million broken Wikipedia links have been fixed thanks to an alliance with the Internet Archive.

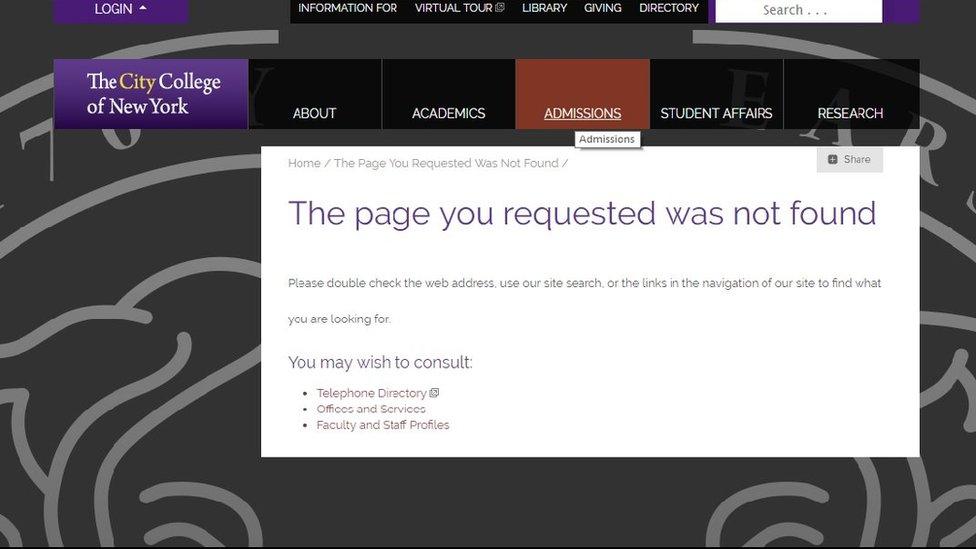

The online encyclopedia's editors have long been encouraged to provide links to web-based sources. But the details can be lost if the third-party sites close or update their pages.

To address this, visitors are now pointed to snapshots of what the sites used to show, when required.

It has also emerged that some editors voted to restrict use of Breitbart, external.

A post on Wikipedia's reliable sources page states that there was a "very clear consensus" that the right-wing news site should stop being used as a source for facts "due to its unreliability".

It suggested that Breitbart could still, however, be used to attribute viewpoints.

But some editors were concerned by the idea.

"Breitbart should be used with caution - but an outright ban on citing it would hurt Wikipedia far more than help," wrote one.

The BBC has contacted Breitbart for a response.

It follows a similar move against the Daily Mail last year.

Volunteers were subsequently encouraged to review existing Wikipedia citations of the UK newspaper and either remove or replace them.

The Motherboard news site, external has reported that Wikipedia editors have also advocated similar limits on the use of articles by the left-wing Occupy Democrats organisation and the conspiracy-theory media platform InfoWars.

Link rot

The collaboration with the Internet Archive makes use of the San Francisco's based project's Wayback Machine tool.

This allows users to enter a web address and then find stored versions of how a page appeared on dates in the past.

Allow X content?

This article contains content provided by X. We ask for your permission before anything is loaded, as they may be using cookies and other technologies. You may want to read X’s cookie policy, external and privacy policy, external before accepting. To view this content choose ‘accept and continue’.

The non-profit said it had begun using automated software three years ago to hunt out links that resulted in "page not found" or "404" and "500" errors.

This bot then searched the Wayback Machine for the relevant information and automatically updated the links.

This, the archive's director said, had resulted in six million pages lost to "link rot" being restored.

The bot was designed to seek out and fix broken links

Mark Graham added that members of the Wikipedia community had also helped tackle a related issue - "content drift".

This occurs when a page remains online but its text and images change so that they no longer resemble what the editor who linked to them had intended.

These human volunteers had fixed more than three million links to date, the director wrote.

"We will expand our efforts to check and edit more Wikipedia sites and increase the speed which we scan those sites and fix broken links," Mr Graham concluded, adding that he also intended to explore whether Wikipedia's contributors could be encouraged to use Wayback Machine snapshots in the first place rather than live-web links.

- Published15 May 2018

- Published9 August 2017