Hate speech: Facebook, Twitter and YouTube told off by MPs

- Published

Yvette Cooper expressed frustration with all three firms

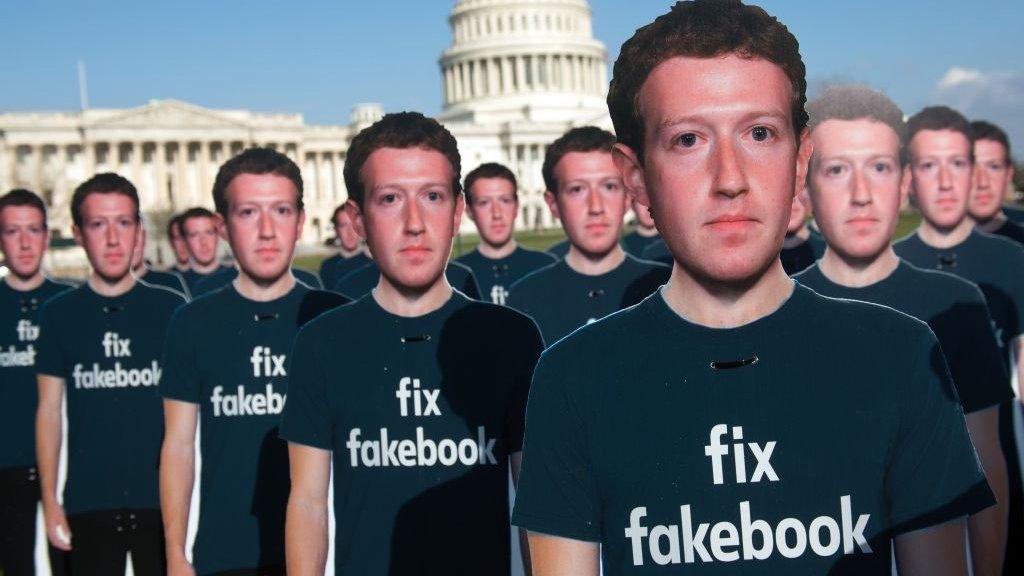

Facebook, Twitter and YouTube have faced tough questions from frustrated MPs about why they are still failing to remove hate speech on their platforms.

Facebook was challenged on why copies of the video showing the New Zealand mosque shootings remained online.

Meanwhile, YouTube was described as a "cesspit" of neo-Nazi content.

All three said they were improving policies and technology, and increasing the number of people working to remove hate speech from their platforms.

But MPs seemed unimpressed, with several saying the firms were "failing" to deal with the issue, despite repeated assurances that their systems were improving.

"It seems to me that time and again you are simply not keeping up with the scale of the problem," said chair Yvette Cooper.

Labour MP Stephen Doughty said he was "fed up" with the lack of progress on hate speech.

Executives from the three platforms were asked if they were actively sharing information about those posting terrorist propaganda with police.

All three said they did when there was "an imminent threat to life" but not otherwise.

Mr Doughty claims to have found links to neo-Nazi groups on all platforms

Labour MP Ms Cooper opened the inquiry by asking why, according to reports in the New Zealand media, some copies of the video showing the mosque shootings in Christchurch still remained on Facebook, Facebook-owned Instagram and YouTube.

Facebook's head of public policy, Neil Potts, told her: "This video was a new type that our machine learning system hadn't seen before. It was a first person shooter with a GoPro on his head. If it was a third person video, we would have seen that before.

"This is unfortunately an adversarial space. Those sharing the video were deliberately splicing and cutting it and using filters to subvert automation. There is still progress to be made with machine learning."

Ms Cooper also asked the executives whether the decision by the Sri Lankan government to block social media sites in the wake of the recent bombings in its country would happen "more often because governments have no confidence in your ability to sort things".

Marco Pancini, director of public policy at YouTube, said: "We need to respect this decision. But voices from civil society are raising concerns about the ability to understand what is happening and to communicate if social media is blocked."

Facebook reiterated that it had dedicated teams working in different languages around the world to deal with content moderation.

"We feel it is better to have an open internet because it is better to know if someone is safe," said Mr Potts.

"But we share the concerns of the Sri Lankan government and we respect and understand that."

'Not doing your jobs'

Mr Doughty asked why so much neo-Nazi content was still so easily found on YouTube, Twitter and Facebook.

"I can find page after page using utterly offensive language. Clearly the systems aren't working," he said.

He accused all three firms of "not doing your jobs".

MPs seemed to be extremely frustrated, with several saying that concerns had been raised about specific accounts repeatedly, and yet they still remained on all platforms.

"We have a number of ongoing assessments. We have no interest in having violent extremist groups on our platform but we can't ban our way out of the problem," said Twitter's head of public policy, Katy Minshall.

"If you have a deep link to hate, we remove you," said Mr Potts.

"Well you clearly don't, Mr Potts," replied Mr Doughty.

We can't ban our way out of the problem, said Twitter's Katy Minshall

Describing YouTube as a "cesspit" of white supremacist material, Mr Doughty said: "Link after link after link. This is in full view."

"We need to look into this content," said Mr Pancini. "It is absolutely an important issue."

He was asked whether YouTube's algorithms promoted far-right content, even to users who did not want to see it.

"Recommended videos is a useful feature if you are looking for music but the challenge for speech is that it is a different dynamic. We are working to promote authoritative content and make sure controversial and offensive content has less visibility," he said.

He was pressed on why the algorithms were not changed.

"It is a very good question but it is not so black and white. We need to find ways to improve quality of results of the algorithm," Mr Pancini said.

Ms Cooper asked Mr Pancini why she personally was being recommended "increasingly extreme content" when she searched on YouTube.

"The logic is based on user behaviour," he replied. "I'm aware of the challenges this raises when it comes to political speech. I'm not here to defend this type of content."

She seemed particularly frustrated that she had asked the same questions to YouTube 18 months ago and yet she felt nothing had changed because she was still seeing the same content.

- Published12 February 2020

- Published29 September 2017

- Published1 May 2017

- Published14 March 2017