King's Cross face recognition 'last used in 2018'

- Published

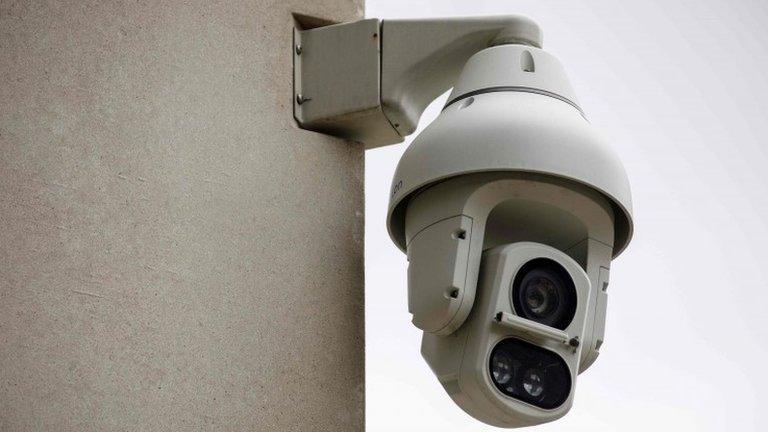

Part of the King's Cross complex

Facial-recognition technology has not been used at London's King's Cross Central development since March 2018, according to the 67-acre (0.3-sq-km) site's developer.

When the use of the technology was initially reported, by the Financial Times in August,, external a spokeswoman said it was to "ensure public safety".

The partnership now says only two on-site cameras used facial recognition.

They had been in one location and had been used to help the police, it added.

According to a statement on its website, external, the two cameras were operational between May 2016 and March 2018 and the data gathered was "regularly deleted".

The King's Cross partnership also denied any data had been shared commercially.

It had used it to help the Metropolitan and British Transport Police "prevent and detect crime in the neighbourhood", it said.

But both forces told BBC News they were unaware of any police involvement.

It said it had since shelved further work on the technology and "has no plans to reintroduce any form of FRT [facial-recognition technology] at the King's Cross estate".

However, as recently as last month, a security company was advertising for a CCTV operator for the area.

The duties of the role included: "To oversee and monitor the health, safety and welfare of all officers across the King's Cross estate using CCTV, Face watch and surveillance tactics."

The advert was later amended to remove this detail, after BBC News raised the issue.

Following the FT's report, the Information Commissioner's Office (ICO) launched an investigation into how the facial-recognition data gathered was being stored.

The Mayor of London, Sadiq Khan, also wrote to the King's Cross Central development group asking for reassurance its use of facial-recognition technology was legal.

The latest statement was posted online on the eve of technology giant Samsung opening an event space on the site, with a launch event planned for Tuesday evening, 3 September.

The FT reporter who broke the original story described the statement as "strange".

Allow X content?

This article contains content provided by X. We ask for your permission before anything is loaded, as they may be using cookies and other technologies. You may want to read X’s cookie policy, external and privacy policy, external before accepting. To view this content choose ‘accept and continue’.

One critic of facial-recognition technology, Dr Stephanie Hare, said many questions remained about what had been going on in the area, which, while privately owned, is open to the public and contains a number of bars, restaurants and family spaces.

"It does not change the fundamentals of the story in terms of the implications for people's privacy and civil liberties, or the need for the ICO to investigate - they deployed this technology secretly for nearly two years," she said.

"Even if they deleted data, I would want to know, 'Did they do anything with it beforehand, analyse it, link it to other data about the people being identified? Did they build their own watch-list? Did they share this data with anyone else? Did they use it to create algorithms that have been shared with anyone else? And most of all, were they comparing the faces of people they gathered to a police watch-list?'"

Dr Hare also said it was unclear why the partnership had stopped using it.

"Was it not accurate? Ultimately unhelpful? Or did they get what they needed from this 22-month experiment?" she said.

- Published19 August 2019

- Published14 August 2019

- Published13 August 2019

- Published12 August 2019

- Published12 July 2019