Why Terminator: Dark Fate is sending a shudder through AI labs

- Published

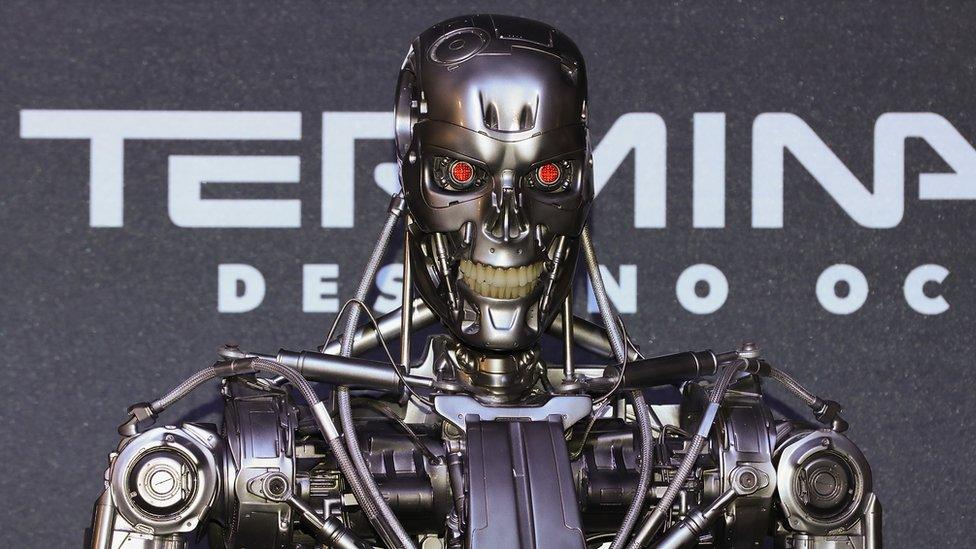

Arnold Schwarzenegger means it when he says: "I'll be back," but not everyone is thrilled there's a new Terminator film out this week.

In labs at the University of Cambridge, Facebook and Amazon, researchers fear Terminator: Dark Fate could mislead the public on the actual dangers of artificial intelligence (AI).

AI pioneer Yoshua Bengio told BBC News he didn't like the Terminator films for several reasons.

"They paint a picture which is really not coherent with the current understanding of how AI systems are built today and in the foreseeable future," says Prof Bengio, who is sometimes called one of the "godfathers of AI" for his work on deep learning in the 1990s and 2000s.

"We are very far from super-intelligent AI systems and there may even be fundamental obstacles to get much beyond human intelligence."

AI pioneer Yoshua Bengio thinks we're still a long way off super-intelligent machines

In the same way Jaws influenced a lot of people's opinions on sharks in a way that didn't line up with scientific reality, sci-fi apocalyptic movies such as Terminator can generate misplaced fears of uncontrollable, all-powerful AI.

"The reality is, that's not going to happen," says Edward Grefenstette, a research scientist at Facebook AI Research in London.

While enhanced human cyborgs run riot in the new Terminator, today's AI systems are just about capable of playing board games such as Go or recognising people's faces in a photo. And while they can do those tasks better than humans, they're a long way off being able to control a body.

"Current state-of-the-art systems would not even be able to succeed in controlling the body of a mouse," says Prof Bengio, who cofounded the Canadian AI research company Element AI.

Today's AI agents struggle to excel at more than one task, which is why they're often referred to as "narrow AI" systems as opposed to "general AI".

Neil Lawrence, a machine learning professor at the University of Cambridge, thinks we should reconsider what we call AI

But it would be more appropriate to call a lot of today's AI technology "computers and statistics", according to Neil Lawrence, who recently left Amazon and joined the University of Cambridge as the first DeepMind professor of machine learning, external.

"Most of what we're calling AI is really using large computational capabilities combined with a lot of data, to unpick statistical correlations," he says.

Individuals such as Elon Musk have done a good job of scaring some into thinking Terminator could become a reality in the not so distant future, thanks in part to phrases such as AI is "potentially more dangerous than nukes, external".

But the AI community is unsure how quickly machine intelligence will advance during the next five years, let alone the next 10 to 30 years.

There's also scepticism in the community about whether AI systems will ever achieve the same level of intelligence as humans, or indeed whether this would be desirable.

"Typically, when people talk about the risks of AI, they have in mind scenarios whereby machines have achieved 'artificial general intelligence' and have the cognitive abilities to act beyond the control and specification of their human creators," Dr Grefenstette says.

"With all due respect to people who talk of the dangers of AGI and its imminence, this is an unrealistic prospect, given that recent progress in AI still invariably focuses on the development of very specific skills within controlled domains."

We should be more concerned with how humans abuse the power AI offers, Prof Bengio says.

How will AI further enhance inequality? How will AI be used in surveillance? How will AI be used in warfare?

The idea of relatively dumb AI systems controlling rampant killing machines is terrifying.

Prof Lawrence says: "The film may make people think about what future wars will look like."

And Prof Bryson says: "It is good to get people thinking about the problems of autonomous weapons systems."

But we don't need to look into the future to see AI doing damage. Facial-recognition systems are being used to track and oppress Uighurs in China, bots are being used to manipulate elections, external, and "deepfake" videos are already out there, external.

"AI is already helping us destroy our democracies and corrupt our economies and the rule of law," according to Joanna Bryson, who leads the Bath Intelligent Systems group, at the University of Bath.

Thankfully, many of today's AI researchers are working hard to ensure their systems have a positive impact on people and society, focusing their efforts on areas such as healthcare and climate change.

Over at Facebook, for example, researchers are trying to work out how to train artificial systems that understand our language, follow instructions, and communicate with us or other systems.

"Our primary goal is to produce artificial intelligence that is more cooperative, communicative, and transparent about its intentions and plans while helping people in real-world scenarios," Dr Grefenstette says.

Joanna Bryson is to become the professor of ethics and technology at Berlin's Hertie School of Governance in February

Ultimately, the responsibility of communicating the true state of AI lies with the media.

The choice of a photo directly affects interest in an article but journalism schools around the world would strongly advise against misleading the public for the sake of clicks.

Unfortunately, there have been numerous instances of outlets using stills from the Terminator films in stories about relatively incremental breakthroughs.

Prof Bryson says she skips over them like adverts, while Prof Lawrence says he assumes they're clickbait.

Journalists writing AI stories "should show the cubicles of the people actually developing the AI", at Google or Facebook, for example, Prof Bryson says.

"The press needs to stop treating AI like some kind of unearthed scientific discovery dug out of the ground or found on Mars," she says. "AI is just a technology that people use to do things."

And she makes a fair point. But be honest, would you have clicked on this story if it didn't have a killer robot photo?

- Published22 February 2018

- Published21 February 2018