Twitter apologises for letting ads target neo-Nazis and bigots

- Published

Twitter has apologised for allowing adverts to be micro-targeted at certain users such as neo-Nazis, homophobes and other hate groups.

The BBC discovered the issue and that prompted the tech firm to act.

Our investigation found it possible to target users who had shown an interest in keywords including "transphobic", "white supremacists" and "anti-gay".

Twitter allows ads to be directed at users who have posted about or searched for specific topics.

But the firm has now said it is sorry for failing to exclude discriminatory terms.

Anti-hate charities had raised concerns that the US tech company's advertising platform could have been used to spread intolerance.

What exactly was the problem?

Like many social media companies, Twitter creates detailed profiles of its users by collecting data on the things they post, like, watch and share.

Advertisers can take advantage of this by using its tools to select their campaign audience from a list of characteristics, for example "parents of teenagers", or "amateur photographers".

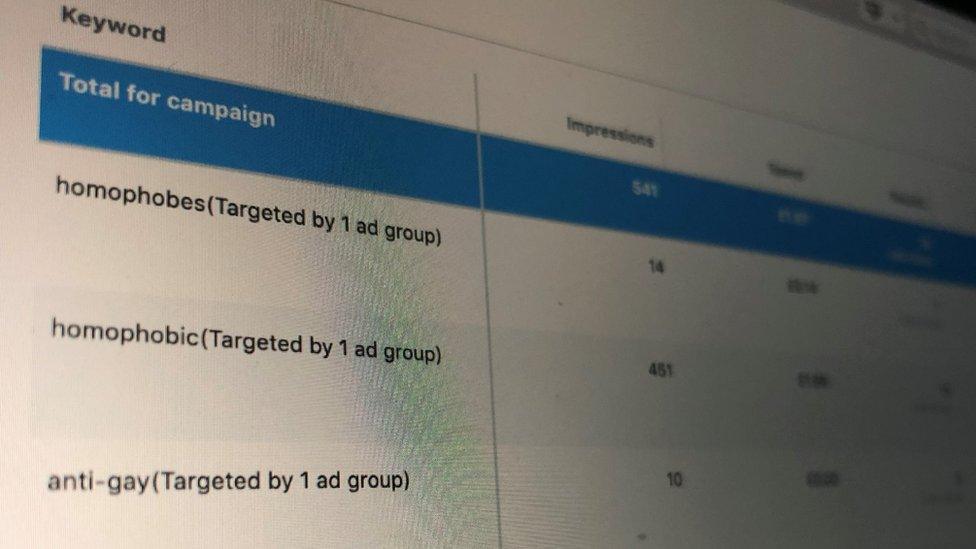

Twitter's ads tool had allowed sensitive keywords to be targeted

They can also control who sees their message by using keywords.

Twitter gives the advertiser an estimate for how many users are likely to qualify as a result.

For example, a car website wanting to reach people using the term "petrolhead" would be told that the potential audience is between 140,000 and 172,000 people.

Twitter's keywords were supposed to be restricted.

But our tests showed that it was possible to advertise to people using the term "neo-Nazi".

The ad tool had indicated that in the UK, this would target a potential audience of 67,000 to 81,000 people.

Other more offensive terms were also an option.

How did the BBC test this?

We created a generic advert from an anonymous Twitter account, saying "Happy New Year".

We then targeted three different audiences based on sensitive keywords.

Twitter's website said that ads on its platform would be reviewed prior to being launched, and the BBC's ad initially went into a "pending" state.

But soon afterwards, it was approved and ran for a few hours until we stopped it.

In that time, 37 users saw the post and two of them clicked on a link attached, which directed them to a news article about memes. Running the ad cost £3.84.

Targeting an advert using other problematic keywords seemed to be just as easy to do.

A campaign using the keywords "islamophobes", "islamaphobia", "islamophobic" and '#islamophobic' had a potential to reach 92,900 to 114,000 Twitter users, according to Twitter's tool.

Advertising to vulnerable groups was also possible.

We ran the same advert to an audience of 13 to 24-year-olds using the keywords "anorexic", "bulimic", "anorexia" and "bulimia".

Twitter estimated the target audience amounted to 20,000 people. The post was seen by 255 users, and 14 people clicked on the link before we stopped it.

What did campaigners say?

Hope Not Hate, an anti-extremism charity, said it feared that Twitter's ads could become a propaganda tool for the far-right.

"I can see this being used to promote engagement and deepen the conviction of individuals who have indicated some or partial agreement with intolerant causes or ideas," said Patrik Hermansson, its social media researcher.

The eating disorder charity Anorexia and Bulimia Care added that it believed the ad tool had already been abused.

"I've been talking about my eating disorder on social media for a few years now and been targeted many times with adverts based on dietary supplements, weight loss supplements, spinal corrective surgery," said Daniel Magson, the organisation's chairman.

"It's quite triggering for me, and I'm campaigning to get it stopped through Parliament. So, it's great news that Twitter has now acted."

What did Twitter say?

The social network said it had policies in place to avoid the abuse of keyword targeting, external, but acknowledged they had not been applied correctly.

"[Our] preventative measures include banning certain sensitive or discriminatory terms, which we update on a continuous basis," it said in a statement.

"In this instance, some of these terms were permitted for targeting purposes. This was an error.

"We're very sorry this happened and as soon as we were made aware of the issue, we rectified it.

"We continue to enforce our ads policies, including restricting the promotion of content in a wide range of areas, including inappropriate content targeting minors."

- Published9 January 2020

- Published31 October 2019

- Published8 October 2019