Coronavirus: False claims viewed by millions on YouTube

- Published

More than a quarter of the most-viewed coronavirus videos on YouTube contain "misleading or inaccurate information", a study suggests.

In total, the misleading videos had been viewed more than 62 million times.

Among the false claims was the idea that pharmaceutical companies already have a coronavirus vaccine but are refusing to sell it.

YouTube said it was committed to reducing the spread of harmful misinformation.

The researchers suggested "good quality, accurate information" had been uploaded to YouTube by government bodies and health experts.

But it said the videos were often difficult to understand and lacked the popular appeal of YouTube stars and vloggers.

The study, published online by BMJ Global Health, external, looked at the most widely viewed coronavirus-related videos in English, as of 21 March.

After excluding duplicate videos, videos longer than an hour and videos that did not include relevant audio or visual material, they were left with 69 to analyse.

The videos were scored on whether they presented exclusively factual information about viral spread, coronavirus symptoms, prevention and potential treatments.

Videos from government agencies scored significantly better than other sources, but were less widely viewed.

Of the 19 videos found to include misinformation:

about a third came from entertainment news sources

national news outlets accounted for about a quarter

internet news sources also account for about a quarter

13% had been uploaded by independent video-makers

The report recommends that governments and health authorities should collaborate with entertainment news sources and social media influencers to make appealing, factual content that is more widely viewed.

YouTube said in a statement: "We are always interested to see research and exploring ways to partner with researchers even more closely. However it's hard to draw broad conclusions from research that uses very small sample sizes and the study itself recognises the limitations of the sample.

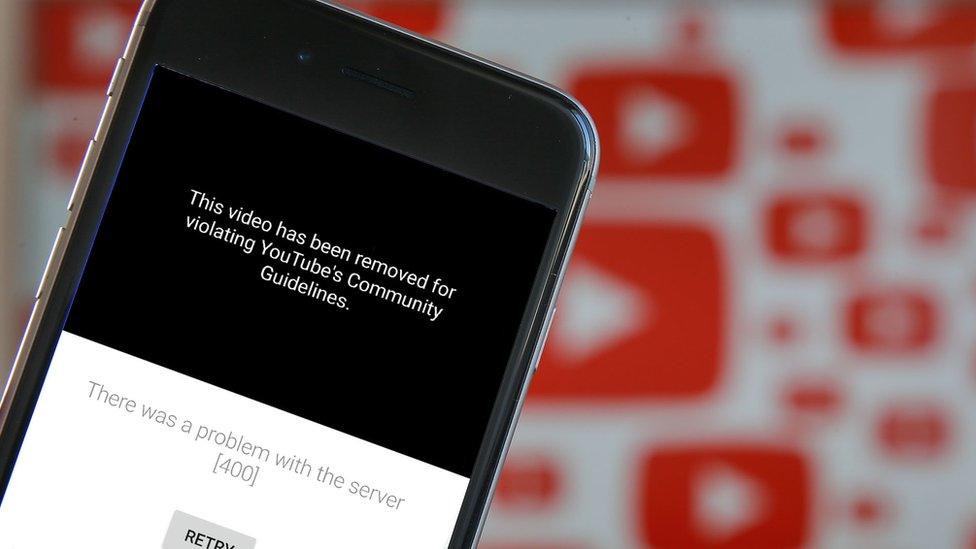

"We're committed to providing timely and helpful information at this critical time. To date we've removed thousands and thousands of videos for violating our COVID-19 policies and directed tens of billions of impressions to global and local health organisations from our home page and information panels.

"We're committed to providing timely and helpful information at this critical time, including raising authoritative content, reducing the spread of harmful misinformation and showing information panels, using NHS and World Health Organization (WHO) data, to help combat misinformation."

Analysis

by Marianna Spring, specialist disinformation and social media reporter

In recent weeks, there has been an increase in highly polished videos promoting conspiracy theories being shared on YouTube - and they prove very popular.

So these findings - although concerning - are not surprising.

The accurate information shared by trusted public health bodies on YouTube tends to be more complex.

It can lack the popular appeal of the conspiracy videos, which give misleading explanations to worried people who are looking for quick answers, or someone to blame.

That includes videos such as Plandemic, which was widely shared online last week.

High-quality production values and interviews with supposed experts can make these videos very convincing. Often facts will be presented out of context and used to draw false conclusions.

And tackling this kind of content is a game of cat-and-mouse for social media sites.

Once videos gain traction, even if they are removed, they continue to be uploaded repeatedly by other users.

It is not just alternative outlets uploading misinformation either. Whether for views or clicks, the study suggests some mainstream media outlets are also guilty of spreading misleading information.

SCHOOLS: When will children be returning?

EXERCISE: What are the guidelines on getting out?

THE R NUMBER: What it means and why it matters

AIR TRAVELLERS: The new quarantine rules

LOOK-UP TOOL: How many cases in your area?

- Published8 May 2020

- Published4 May 2020