Online Harms bill: Warning over 'unacceptable' delay

- Published

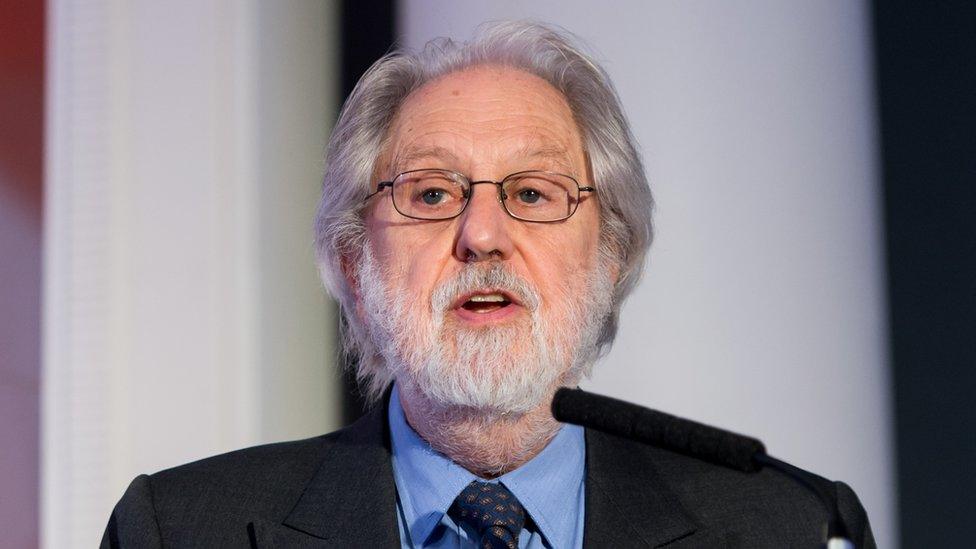

Lord Puttnam accused the government of "losing" the Online Harms Bill

The Chair of the Lords Democracy and Digital Committee has said the government's landmark online protection bill could be delayed for years.

Lord Puttnam said the Online Harms Bill may not come into effect until 2023 or 2024, after a government minister said she could not commit to bringing it to parliament next year.

"I'm afraid we laughed," he said.

The government, however, said the legislation would be introduced "as soon as possible".

The Online Harms Bill was unveiled last year amid a flurry of political action after the story of 14-year-old Molly Russell, who killed herself after viewing online images of self-harm, came to light.

It is seen as a potential tool to hold websites accountable if they fail to tackle harmful content online - but is still in the proposal, or "White Paper" stage.

The Department for Digital, Culture, Media and Sport (DCMS) said the legislation will be ready in this parliamentary session.

But the Lords committee's report said that DCMS minister Caroline Dinenage would not commit to bringing a draft bill to parliament before the end of 2021, prompting fears of a lengthy delay.

In her evidence to the committee in May, she had warned that the Covid-19 pandemic had caused delays.

But speaking to the BBC's Today programme, Lord Puttnam said: "It's finished".

"Here's a bill that the Government paraded as being very important - and it is - which they've managed to lose somehow."

The government originally put forward the idea of online regulation in 2017, following it with the White Paper 18 months later, and a full response is now not due until the end of this year.

Lord Puttnam said a potential 2024 date for it to come into effect would be "seven years from conception - in the technology world that's two lifetimes".

Analysis

By Angus Crawford

The death of Molly Russell seemed to galvanise the debate about online harms.

Just 14, she took her own life after viewing a relentless stream of negative material on Instagram. Within days of her father Ian's decision to talk publicly about what happened, government ministers were calling for a "purge" of social media.

Tech company bosses were summoned, dressed down and warned they might be held personally responsible for harmful content. New legislation was demanded, drafted and put out to consultation.

And 18 months on here we are, left with a warning the new law may have to wait until 2024.

After Molly Russell took her own life, her family discovered distressing material about suicide on her Instagram account

It's left some campaigners depressed about the prospects for real change. But two remarkable things have happened this summer.

First the campaign and hashtag #stophateforprofit. Advertisers have begun to pile out of Facebook and Instagram, the company share price plunged 8% in one day and Mark Zuckerberg promised to act.

And here in the UK, reported on only sparsely, the Age Appropriate Design Code was laid before Parliament. It forces online services to give children's data the highest level of protection. That includes preventing auto-recommending harmful content to young people.

Tighter regulation is coming to the sector, but it will be far from a smooth process.

Lord Puttnam was speaking following the launch of his committee's latest report, on the collapse of trust in the digital era, external.

In a statement, the committee said that democracy itself is threatened by a "pandemic" of misinformation online, which could be an "existential threat" to our way of life.

It said the threat of online misinformation had become even clearer in recent months during the coronavirus pandemic.

Among the report's 45 recommendations were that the regulator of social networks - mooted to be the current broadcast regulator, Ofcom - should hold platforms accountable for content they recommend to large numbers of people, once it crosses a certain threshold.

It also recommended that those companies which repeatedly do not comply should be blocked at ISP level, and fined up to 4% of their global turnover, and that political advertising should be held to stricter standards.

Ofcom's new chief executive has warned that hefty fines would be part of its plans, if it is appointed as regulator.

DCMS said: "Since the start of the pandemic, specialist government units have been working around the clock to identify and rebut false information about coronavirus.

"We are also working closely with social media platforms to help them remove incorrect claims about the virus that could endanger people's health."

- Published8 April 2019

- Published23 June 2020

- Published12 February 2020