Tech Tent: Can we trust algorithms?

- Published

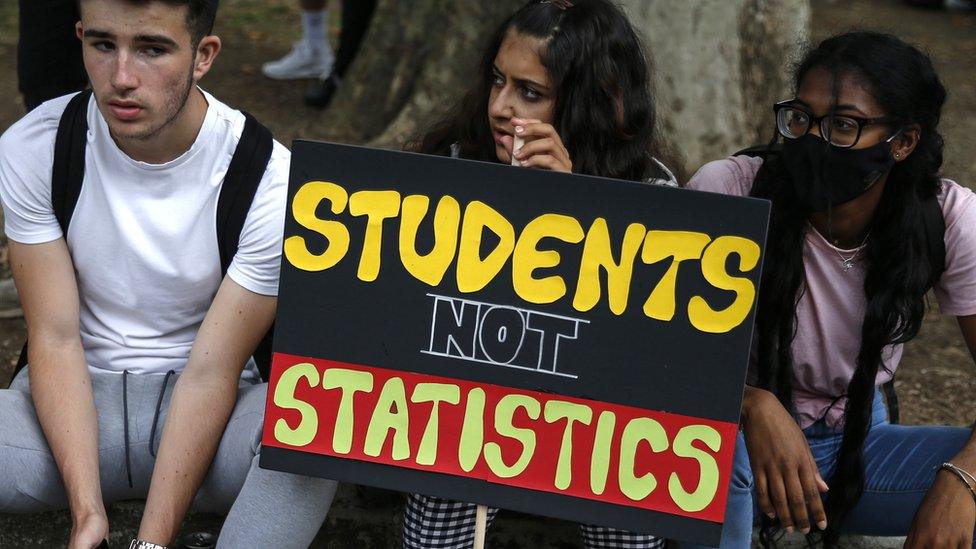

When school students took to the streets in London and other cities this week it was a protest unlike any other.

Its target: an algorithm they said had threatened their futures.

On Tech Tent this week, we look at whether the exam grades fiasco in the UK signals a global crisis for trust in algorithms.

It was a uniquely difficult challenge: how to determine the exam grades on which university places depend when the exams had to be cancelled.

The answer? An algorithm, in effect a statistical recipe which is fed data.

In this case, the data was both the prior performance of a school and the ranking given this year by teachers to pupils from top to bottom.

It appears to have been chosen by politicians with the aim of avoiding grade inflation - that is a big rise in top grades compared with previous years.

But what it meant was that if nobody in a school had been awarded an A grade in the previous year, no student - however outstanding - could get one this year.

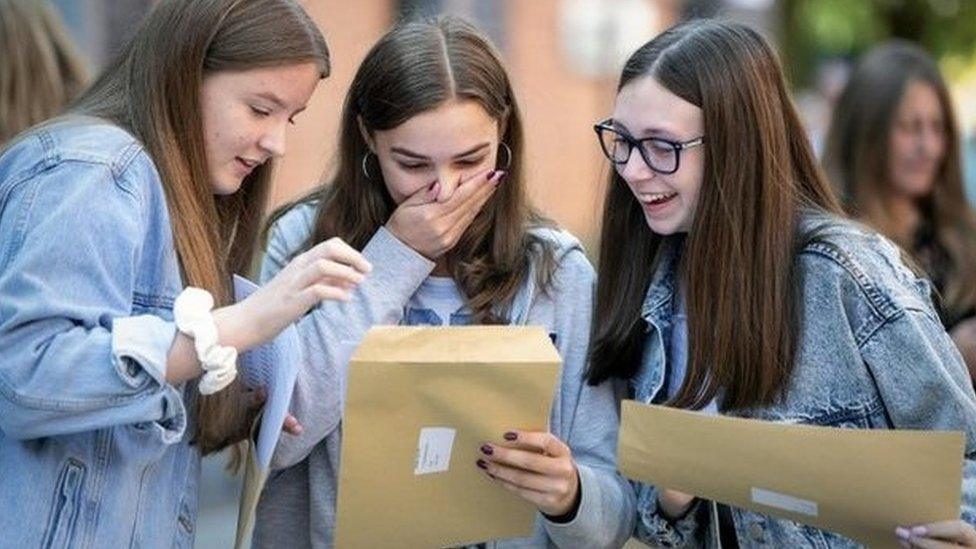

Very soon after the results were released, it became evident that there were thousands of cases where young people had been treated unfairly - even if the overall political goal had been achieved.

"Maybe it was fair on the whole to do it that way," Carly Kind, of the Ada Lovelace Institute, tells Tech Tent. "But at an individual level, many people were wronged in fact - and many people feel wronged by the algorithm."

She points out that the algorithm was about on a par with teacher accuracy in terms of marking exams - but while someone might feel wronged by a grade delivered by a human, it somehow feels worse when the mark comes from a computer.

As director of an institute set up to think about data ethics, she says that transparency is the key.

"I think there is a big lesson about the government being transparent about when it uses algorithms, what it's optimising for, and what it recognises that it's trading off in the process," she said.

If the government had said clearly back in July that its main aim was to avoid grade inflation, and that might deliver some unfairness at the fringes, would that have been acceptable? Perhaps not - but then the public debate could have taken place before the damage was done.

What most people probably do not realise is that algorithms are already making all sorts of decisions for them, from which posts appear in their Facebook News Feeds to which film is recommended to them by Netflix.

And they are increasingly being used by governments to automate processes such as spotting people likely to commit benefit fraud, or identifying problem families where intervention may be required.

They can be both effective and money-saving - if care is taken in setting the objectives and ensuring the data used is free of biases.

But Carly Kind says the A-Levels fiasco has been a PR nightmare for a government wanting to be data-driven: "Students protesting against an algorithm is something I didn't think I'd ever see."

She believes trust, already damaged by incidents such as the Cambridge Analytica scandal, has been further damaged.

"I actually think this has done really indelible harm to the case for using algorithmic systems in public services, which was always already a tenuous case because we don't have a lot of strong evidence yet around where these things are being used to great benefit."

Here in the UK, many nod along in recognition to a comedy sketch from more than a decade ago in which a bored-sounding woman, (played by David Walliams), taps into a keyboard before telling a series of applicants: "Computer says no."

But perhaps the good news from the week's events is that people are now less likely to take no for an answer - or at least question who is behind the computer's intransigence.

- Published21 August 2020

- Published20 August 2020

- Published20 August 2020