Facebook 'Supreme Court' to begin work before US Presidential vote

- Published

Helle Thorning-Schmidt and 19 other influential figures will act as independent arbiters of Facebook and Instagram

Facebook's Oversight Board is "opening its doors to business" in mid-October.

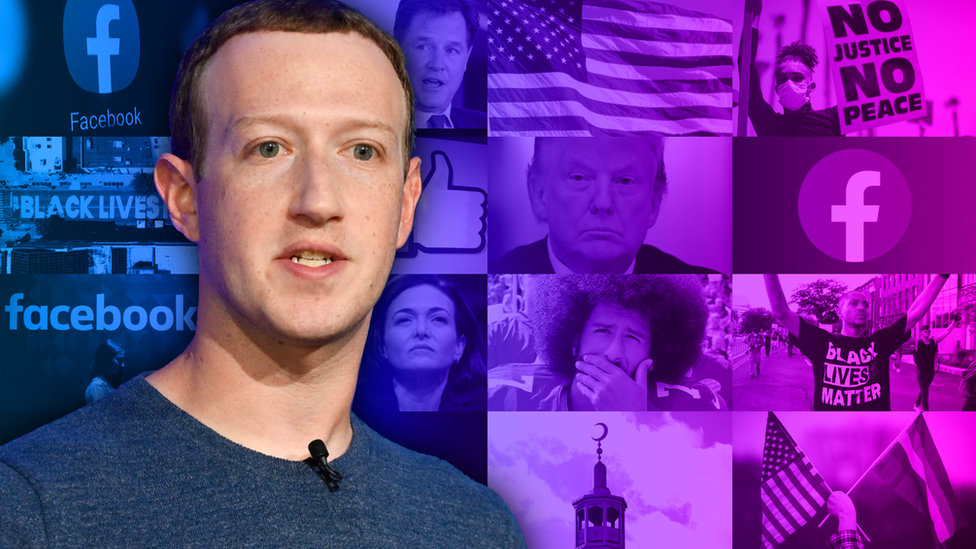

Users will be able to file appeals against posts the firm has removed from its platforms, and the board can overrule decisions made by Facebook's moderators and executives, including chief executive Mark Zuckerberg.

The timing means that some rulings could relate to the US Presidential election, which is on 3 November.

But one member of the board told the BBC it expected to act slowly at first.

"In principle, we will be able to look at issues arising connected to the election and also after the election," Helle Thorning-Schmidt, the former Prime Minister of Denmark, explained.

"But if Facebook takes something down or leaves something up the day after the election, there won't be a ruling the day after.

"That's not why we're here. We're here to take principled decisions and deliberate properly."

Earlier this week, Facebook's global affairs chief Nick Clegg, external told the Financial Times that if there was an "extremely chaotic and, worse still, violent set of circumstances" following a contested election result, it would act aggressively to "significantly restrict the circulation of content on our platform".

In theory, the 20-person panel - which has been likened to the US Supreme Court - could force the firm to reverse some of its judgements.

Ms Thorning-Schmidt said that the board had the capacity to examine "expedited cases" but preferred not to do so in its early days.

'Give us two years'

Facebook first announced its plans to set up the Oversight Board a year ago, and it has taken until now to select its members and arrange how it will work in practice.

The members will be paid an undisclosed sum, but are intended to serve as an independent body, and will decide which cases to look into.

Their work will cover Facebook's main platform as well as the photo-centric app Instagram, which the company owns.

In addition to user complaints, the board can also examine issues that the company has raised itself.

WATCH: Facebook watchdog will not rush into rulings

Facebook has said it expects cases to be resolved within 90 days, external, including any action it is told to take.

The panel's decisions are supposed to be binding and set a precedent for subsequent moderation decisions.

Critics of the scheme have suggested it is a "fig leaf" designed to help Facebook avoid being regulated by others.

But Ms Thorning-Schmidt said it was too early to write it off.

"It would be much better if the global community in the UN [United Nations] could come up with a content moderation system that could look into all social media platforms, but that is not going to happen," she said.

"So this is the second best.

"Give us two years to try to prove that it is better to have this board than not to have this board."

The following interview with Ms Thorning-Schmidt has been edited for brevity and clarity:

Has Facebook already consulted the board about its plans to deal with election-related posts?

It's very important to keep a distance between the Oversight Board and Facebook. So we don't have much to do with Facebook right now. And they don't consult us on what they should be doing. And we don't think they should consult us.

And just to be clear - in the days after the election, you might ask for a removed post to be put back up on Facebook or Instagram?

Yes, it is it is an option. But we are focused on quality rather than speed. Facebook has been criticised for moving fast and breaking things. We want to move slowly and try to create something which is sustainable in the long run. So if Facebook takes down something right after the election, or leaves something up, there won't be a ruling the day after from the Oversight Board.

In theory, the Oversight Board has the right to overrule Mark Zuckerberg himself. But since he still controls the majority of Facebook's voting shares, isn't there a risk he in turn overrules whatever you decided?

It was very, very clear to us when we were appointed that we will work with transparency and independence. If Facebook doesn't follow our decisions, it won't last very long. The obligation from Facebook, including, of course Mark Zuckerberg, is to follow our decisions. So that is the red line for us and not up for discussion.

Mark Zuckerberg has a disproportionate share of Facebook's voting rights given that he owns a 13% stake

Presumably that means you'd resign?

I don't think we should talk about these issues. We want to give this a serious go. I'm urging everyone to look at the Oversight Board and not make the perfect the enemy of the good. This is the best we have these days to try to regulate content on social media. I have not seen any other ways of doing this. And we are all committed to given this giving this a real chance.

Facebook's not been short of controversies over recent years. There's been Cambridge Analytica, hate speech directed at the Rohingyas in Myanmar, a decision not to take down posts from Holocaust deniers, and a decision to take a less interventionist stance on some of President Trump's posts than Twitter has done. Which of these or other cases do you think might have turned out differently had the Oversight Board already existed?

I understand that you want to discuss current examples. And I do find that fascinating as well. But I also think it would be wrong for a board member to go into concrete issues because it would pre-empt our decisions later on. There's been some quite horrific examples in the past, and there will probably be more horrific examples in the future. I have no doubt that the board will look into fact-checking and whether there was real harm caused by content that was left up for too long, which they should have taken down.

Some critics are concerned that this is still a form of self-regulation, and what is needed is external intervention by politicians and official watchdogs.

I think these big tech firms, social media platforms, need regulation in many areas. There is a need for regulation in terms of tax issues. And there's probably also a need for regulation in terms of data protection and how long you keep data. I've argued very adamantly for a duty of care for Facebook and other social media providers. But the board is not taking the place of regulation. Perhaps rather the opposite. The more rulings and decisions we make, the more it will become clear that we need a better conversation about content, and perhaps also more regulation around content.

- Published17 July 2020

- Published8 July 2020

- Published6 May 2020

- Published17 September 2019