Social media giants grilled on hate content

- Published

Jacob Chansley (C), also known as the QAnon Shaman, has been charged over the US Capitol riots

Social network executives have been grilled by MPs on the role their platforms played in recent events in Washington which saw a mob break into Congress.

All said that they needed to do more to monitor extremist groups and content such as conspiracy theories.

But none had any radical new policies to offer.

The government has recently set out tough new rules for how social media firms moderate content.

Facebook said it had removed 30,000 pages, events and groups related to what it called "militarised social movements" since last summer.

"We have a 24-hour operation centre where we are looking for content from groups... of citizens who might use militia-style language," said Facebook's vice president of global policy management, Monika Bickert.

She added: "We had teams that in the weeks leading up to the [events in Washington] were focused on understanding what was being planned and if it could be something that would turn into violence. We were in touch with law enforcement."

Despite its efforts, half of all designated white supremacist groups had a presence on Facebook last year according to a study from the watchdog Tech Transparency Project.

Julian Knight MP, who chairs the Digital, Culture, Media and Sport committee, which is also scrutinising the big tech firms, asked Google's global director of information policy Derek Slater what it was doing to fight conspiracy theories.

"Do you think that it would be wise for you to adopt a new policy where you kept money on your platforms in escrow prior to its distribution so that any cause in which disinformation to found to have taken place... you could perhaps withhold that money?" he asked.

Mr Slater replied that it was an "interesting idea" and that Google was always "re-evaluating its policies", but he made no commitment to the idea.

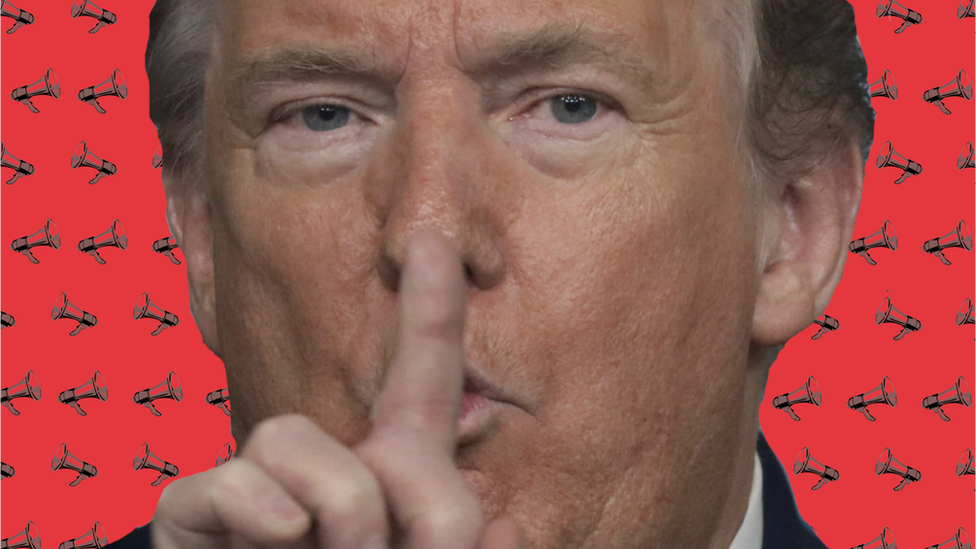

MPs also quizzed Twitter on its decision to permanently ban President Donald Trump.

The firm's head of public policy strategy Nick Pickles was asked if doing so undermined its insistence that it was a platform rather than a publisher.

It was, he said, time to "move beyond" that debate to a conversation about whether social networks were enforcing their own rules correctly.

Questioned why it had banned Mr Trump while still allowing other politicians to "sabre-rattle" on its platform, Mr Pickles added: "This is the complexity and challenge of these issues but generally content moderation is not a good way to hold governments to account."

Mr Trump's tweets were inciting violence "in real-time", he added.

TikTok's director of government relations Theo Bertram said that the video-streaming app had played less of a role in the violence in Washington and hosted fewer banned groups.

But that view was challenged by Yvette Cooper, the chair of the Home Affairs Committee.

It was, she said, in contrast to the Anti-Defamation League which found a significant amount of anti-Semitic content on the platform when it studied it last summer.

- Published14 January 2021

- Published12 January 2021