Can AI tackle racial inequalities in healthcare?

- Published

Have you ever been asked by the doctor how much something hurts out of 10?

Pain tolerance is highly subjective which can make it difficult for doctors to pinpoint why someone's pain may be as high as they say it is.

My five might be your seven, or my 10 could be your three.

A new study published in Nature Medicine is looking to address this, external.

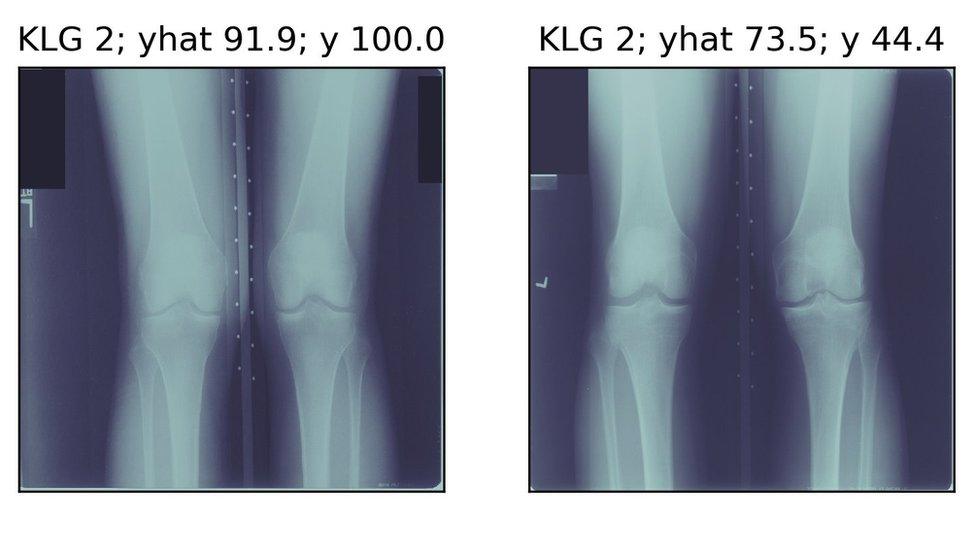

Researchers used artificial intelligence techniques to analyse knee X-rays to "predict patients' experienced pain" for those suffering from osteoarthritis of the knee.

This involved 36,369 observations gathered from 4,172 patients.

The computer analysis could pick up things that a radiologist might miss.

"We didn't train the algorithm to predict what the doctor was going to say about the X-ray," says Ziad Obermeyer, an assistant professor at Berkeley and co-author of the study.

"We trained it to predict what the patient was going to say about their own experience of pain in the knee."

The AI predicted the person X-rayed on the right felt more pain, which was indeed the case

He says the algorithm was able to explain more of the pain people were feeling.

This is important because the way doctors judge pain has been linked to discrimination and even racism.

Race bias

Studies have highlighted healthcare inequalities between white patients and black patients, external in the United States for years.

Doctors appear to be less likely to take some groups seriously when they say they are in pain. For example, studies indicate that black patients are likely to have their pain level underestimated, external and that can adversely affect their treatment.

"I think it takes so much for a lot of us black folks to even get to the doctor," says Paulah Wheeler, co-founder of BLKHLTH, an organisation that works to challenge racism and its impact on black health.

"To have that situation when you're there and you're not being listened to or heard, and you're being disrespected and treated badly. You know, it just compounds the issue even further."

One of the focuses in the study was to explore the "mystery" of why "black patients have higher levels of pain".

The study found that radiologists examining seemingly similar arthritis cases would find that black patients reported more pain than white patients.

But the algorithm indicated that the cases were less similar than they appeared.

It took account of additional undiagnosed features that would be overlooked by doctors employing the commonly used radiographic grading systems.

And because patients who reported severe pain and scored highly on the algorithm's own measure, but low on the official grading systems were more likely to be black, it suggests traditional diagnostics may be ill serving the community.

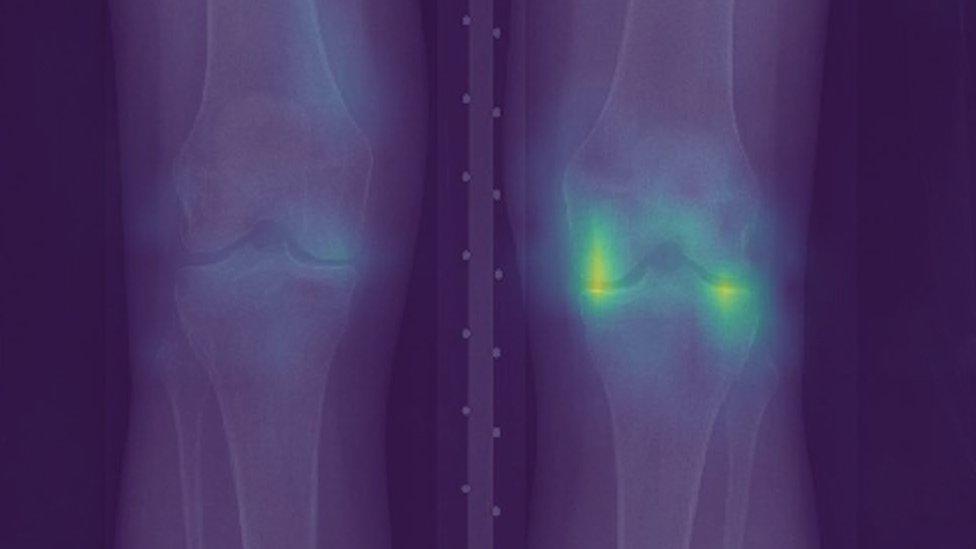

A heat map of a representative X-ray image

"What we found is that the algorithm was able to explain more of the pain that everyone was feeling," said Prof Obermeyer.

"So it just did a better job at finding things that hurt in everyone's knees.

"The benefit of that additional explanatory power was particularly great for black patients."

This also applied to patients of lower socio-economic status, with lower levels of education, and people who don't speak English as their first language.

The researchers acknowledged two important reservations.

Because of the "black box" nature of the way deep learning works, it's not quite clear what features in the X-ray the AI was picking up on that would normally be missed.

And as a consequence, it is as yet unknown whether offering surgery to those who might normally miss out would offer them any additional benefit.

Racial bias

The study is interesting because AI itself has often been accused of being discriminatory.

This is often because the datasets the algorithm was trained on suffered from accidental bias, external.

"Imagine you have a minority population," says Jimeng Sun, a computer science professor at the University of Illinois Urbana-Champaign.

"Then your model trained on a dataset that has very few examples of that."

The resulting algorithm, he explained, would probably be less accurate when applied to the smaller group than one making up the majority of the population.

Essentially the charge is that AI systems often suffer from bias because they have learned to spot patterns in the habits and features of white people that may not work as well when applied to people of other skin tones.

AI MD

The use of AI in healthcare isn't meant to replace a doctor, Mr Sun tells the BBC.

It's more about assisting doctors, particularly with tasks that are often tedious or don't directly correlate to patient care.

Dr Sandra Hobson, assistant professor of orthopaedics at Emory University, thinks the study has huge promise - and a lot of that has to do with the diverse data pool it used.

"Historically, studies have looked at different patients and sometimes studies didn't include women, or sometimes studies didn't include patients of different backgrounds," she explained.

"I think AI has an opportunity to help incorporate data, including patients from all backgrounds, all parts of the country around the world and help make sense of all that data together."

But she added: "It's still only one tool in the patient-physician toolbox."

Paulah Wheeler thinks the history of discrimination in healthcare has made the system inefficient and has led to years of distrust between black people and medical practitioners.

Past criticism of biased AI will make some sceptical about the technology.

But those involved are hopeful it means they can reduce inequalities in care in the future.

Related topics

- Published3 February 2021