MyHeritage offers 'creepy' deepfake tool to reanimate dead

- Published

The website stresses that the technology is only to bring ancestors back to life

Genealogy site MyHeritage has introduced a tool which uses deepfake technology to animate the faces in photographs of dead relatives.

Called DeepNostalgia, external, the firm acknowledged that some people might find the feature "creepy" while others would consider it "magical".

It said it did not include speech to avoid the creation of "deepfake people".

It comes as the UK government considers legislation on deepfake technology.

The Law Commission is considering proposals to make it illegal to created deepfake videos without consent.

MyHeritage said that it had deliberately not included speech in the feature "in order to prevent abuse, such as the creation of deepfake videos of living people".

"This feature is intended for nostalgic use, that is, to bring beloved ancestors back to life," it wrote in its FAQs about the new technology.

But it also acknowledged that "some people love the Deep Nostalgia feature and consider it magical, while others find it creepy and dislike it".

"The results can be controversial, and it's hard to stay indifferent to this technology."

Fake Lincoln

Abraham Lincoln was also brought back to life, with added colour and speech

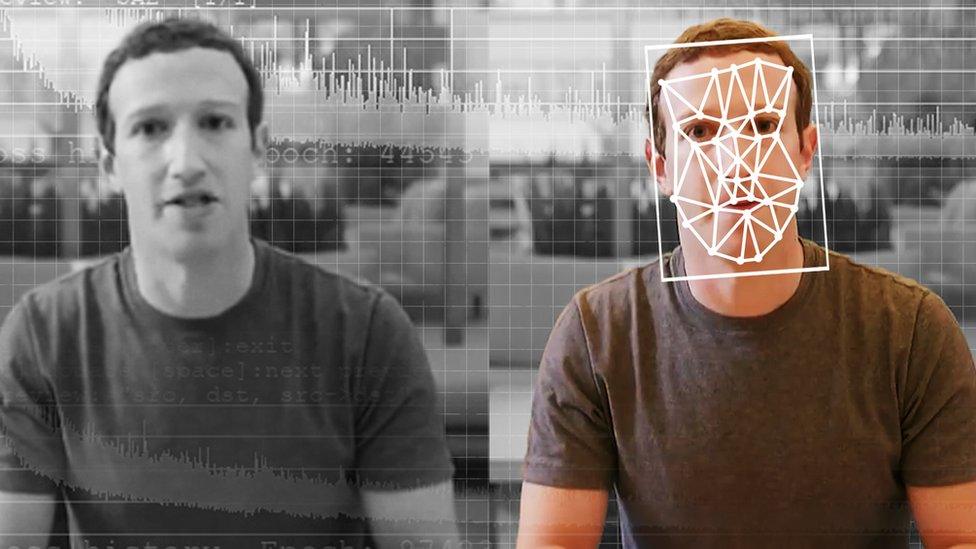

Deepfakes are computer-generated AI-driven videos that can be created from existing photos.

The technology behind Deep Nostalgia was developed by Israeli firm D-ID. It used artificial intelligence and trained its algorithms on pre-recorded videos of living people moving their faces and gesturing.

On the MyHeritage site, historical figures such as Queen Victoria and Florence Nightingale are reanimated. And earlier this month, to mark his birthday, the firm put a video of Abraham Lincoln on YouTube using the technology.

The former US president appears in colour and is shown speaking.

People have begun posting their own reanimated ancestor videos on Twitter, with mixed responses. Some described the results as "amazing" and "emotional" while others expressed concern.

In December, Channel 4 created a deepfake Queen who delivered an alternative Christmas message, as part of a warning about how the technology could be used to spread fake news.

- Published23 December 2020

- Published7 January 2020