TikTok loophole sees users post pornographic and violent videos

- Published

TikTok is banning users who are posting pornographic and violent videos as their profile pictures to circumvent moderation, in a unique viral trend.

BBC News alerted the app to the "Don't search this up," craze, which has accumulated nearly 50 million views.

TikTok has also banned the hashtags used to promote the offending profiles and is deleting the videos.

Users say the trend is encouraging pranksters to post the most offensive or disgusting material they can find.

BBC News has seen clips of hardcore pornography shared as profile pictures on the app, as well as an Islamic State group video of the murder of Jordanian pilot Muadh al-Kasasbeh, burned to death in a cage in 2015.

'Especially worrying'

Tom, a teenager from Germany, whose surname we have agreed not to use, first contacted BBC News about the trend.

He said: "I've seen gore and hardcore porn and I'm really concerned about this because so many kids use TikTok.

"I find it especially worrying that there are posts with millions of views specifically pointing out these profiles, yet it takes ages for TikTok to act."

Tom added he had reported multiple users to the app for posting the offensive profile-picture videos.

On the platform users can put videos as their profile pictures, rather than a still image.

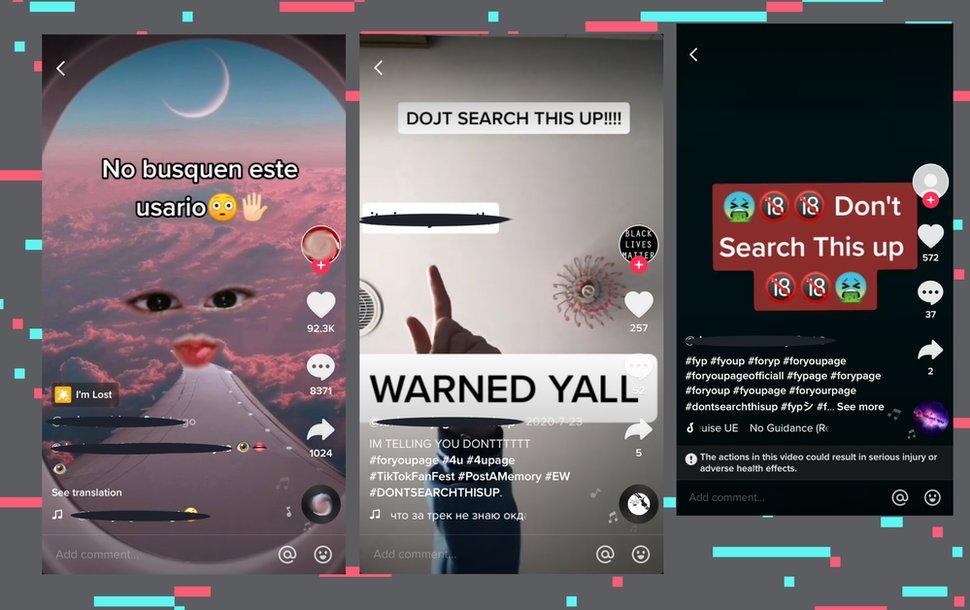

Videos about the trend are being posted in English and Spanish languages on the app.

The shocking videos are being posted as user profile pictures to circumvent moderation systems

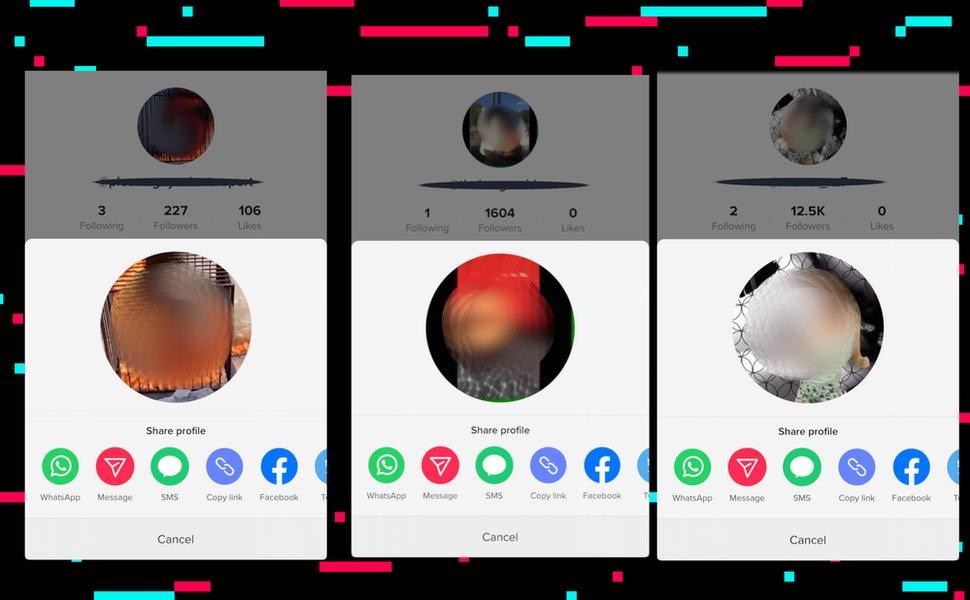

The offending accounts are often named with a random jumble of letters and words and have no actual TikTok videos uploaded to them apart from inside the profile box.

Some of the accounts have tens of thousands of followers waiting for the profile picture to be changed to something shocking.

Regular users are able to find these accounts by watching otherwise mundane TikTok videos advertising usernames people should "search up" to find the most shocking clips.

And these videos are being recommended by the TikTok algorithm on the app's main For You Page.

The offending accounts are being advertised with viral videos challenging people to "search them up"

Experts say the trend is unique and highlights an unknown vulnerability in TikTok.

"TikTok has a rather positive reputation when it comes to trust and safety so I would not fault them for not having moderation policies and tools for a toxic phenomenon they were not aware of," Roi Carthy, from social-media moderation company L1ght, said.

"Clearly TikTok is working to stop this trend - but for users, as always, vigilance is key.

"TikTok fans should be conscious of the risks on the platform and parents should make an effort to learn what new apps their children are using, along with the content and human dynamics they are exposed to."

TikTok is one of the world's fastest growing apps, with an estimated 700 million monthly active users around the world.

A spokesman for the company said: "We have permanently banned accounts that attempted to circumvent our rules via their profile photo and we have disabled hashtags including #dontsearchthisup.

"Our safety team is continuing their analysis and we will continue to take all necessary steps to keep our community safe."

Disabling a hashtag meant the term could not be found in search or created again, TikTok added.

Its moderation teams are also understood to be analysing and removing similar copycat terms being used for the trend.

BBC News tried to speak to one user who posted an explicit video to their profile but did not receive a response.