Tech Tent: Can AI write a play?

- Published

Is artificial intelligence now so advanced that it could write a play? If so, should we be worried about where it is heading?

Those are two of the questions we ask in the latest Tech Tent podcast, which takes the temperature of AI.

Listen to the latest Tech Tent podcast on BBC Sounds

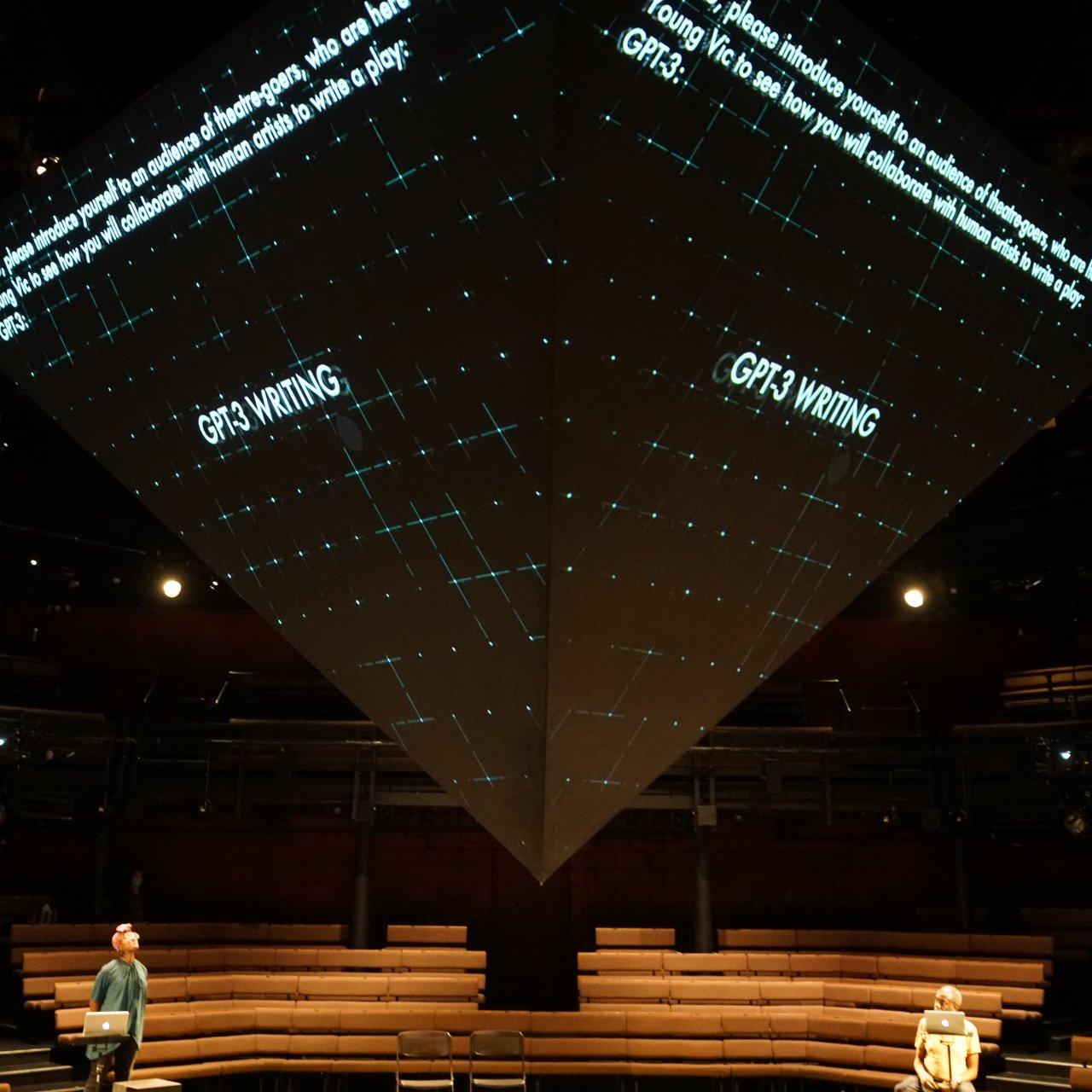

London's Young Vic theatre staged a fascinating experiment this week. It attempted over three nights to get GPT-3, an AI natural language system that has gobbled up vast amounts of text from the web, to write a play.

It was not meant to do this alone. This was a collaborative exercise involving human writers Chinonyerem Odimba and Nina Segal, three actors, and director Jennifer Tang.

When I went along on the second night, she was guiding her team and the audience through the process, demonstrating how GPT-3 worked by getting it to undertake tasks set by us - such as "make up a Shakespeare sonnet in the style of a reality TV star".

They then set about building on a few scenes the AI had written the previous night, prompting it to build on a scenario involving characters called "Beastman" and "Beastwoman" who appeared to be in a post-apocalyptic world.

Here's one of the scenes GPT-3 generated:

ACTOR 1: We've been here for years, survived like bats in caves, caves we dug into the earth ourselves, we filled them with all sorts of junk to give the illusion of survival. The reality is much different.

ACTOR 2: The world changed dramatically. When the great collision hits us. The sky went dark, the land cracked, and our species was almost wiped out.

Jennifer Tang tells Tech Tent it has been a collaborative process between the humans and the machine.

"It sometimes loses its way. But that is, I guess, the creative challenge of how we meet the AI and its content, and nudge it back on course," she says.

GPT-3's words were projected above the stage

And she credits the AI with some almost-human insights: "It is surprisingly good at commenting on our humanity and our characters that we recognise... and through dramatic conventions that feel very alive on the stage."

I'm not so sure. Much of GPT-3's dialogue seemed banal and repetitive and the real creativity came from Jennifer Tang and her team, who made us think about AI. She smiled when I made that point: "That is reassuring, I'm really glad I might have a job still," she said.

"I'm wondering now whether the place that AI has in human creativity is a way to spark ideas within us."

Prof Michael Wooldridge, a leading expert on AI at Oxford University, agrees that we should not worry that systems like GPT-3 will make human artists redundant.

"A good human playwright has some insights into the human condition and human emotions and human relationships, and can express those through the medium of the play. And GPT-3 does not have any of those things and any insights that come out of it are really the insights on our part that we are attributing to it," he explains.

Still, even if it does not look likely to win awards for its plays, this kind of artificial intelligence system does promise advances in more mundane activities that are ripe for automation.

Playwright Chinonyerem Odimba helped bring the project to life

A recent paper by academics at California's Stanford University cited GPT-3 as an example of what they called foundation models of AI: systems that can do a variety of tasks.

They argue that this represents a paradigm shift in the technology and we need to proceed with care.

If biases are built into these systems via the huge amounts of data they depend on, then they could have a negative effect in all sorts of areas.

As Prof Wooldridge points out, there have been dramatic advances in AI over the last decade, from instant translation to image recognition - even if the robot butlers we were promised have yet to arrive. But our worries have too often focused on the wrong dangers - that the technology will achieve consciousness and kill us, or that robots will cause mass unemployment.

Our real concern should be about the prejudices built into AI that result in unjust treatment when you apply for a job or come into contact with the police.

In other words, it is the humans we should fear, not the machines.