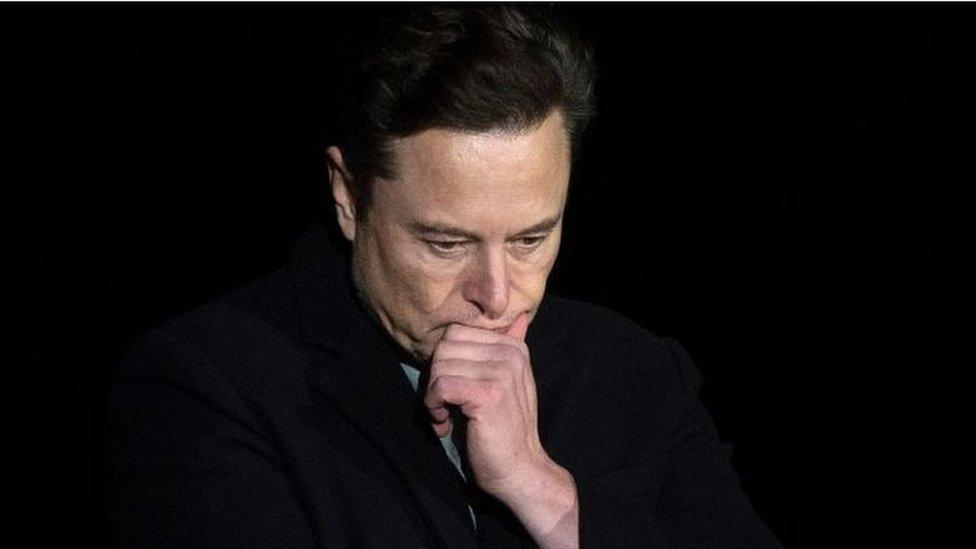

Doubts cast over Elon Musk's Twitter bot claims

- Published

Filings made by Elon Musk's legal team in his battle with Twitter have been questioned by leading bot researchers.

Botometer - an online tool that tracks spam and fake accounts - was used by Mr Musk in a countersuit against Twitter.

Using the tool, Mr Musk's team estimated that 33% of "visible accounts" on the social media platform were "false or spam accounts".

However, Botometer creator and maintainer, Kaicheng Yang, said the figure "doesn't mean anything".

Mr Yang questioned the methodology used by Mr Musk's team, and told the BBC they had not approached him before using the tool.

Mr Musk is currently in dispute with Twitter, after trying to pull out of a deal to purchase the company for $44bn (£36.6bn).

A court case is due in October in Delaware, where a judge will rule on whether Mr Musk will have to buy it.

In July, Mr Musk said he no longer wished to purchase the company, as he could not verify how many humans were on the platform.

Since then, the world's richest person has claimed repeatedly that fake and spam accounts could be many times higher than stated by Twitter.

In his countersuit, made public on 5 August,, external he claimed a third of visible Twitter accounts, assessed by his team, were fake. Using that figure the team estimated that a minimum of 10% of daily active users are bots.

Twitter says it estimates that fewer than 5% of its daily active users are bot accounts.

Correct threshold

Botometer is a tool that uses several indicators,, external like when and how often an account tweets and the content of the posts, to create a bot "score" out of five.

A score of zero indicates a Twitter account is unlikely to be a bot, and a five suggests that it is unlikely to be a human.

However, researchers say the tool does not give a definitive answer as to whether or not an account is a bot.

"In order to estimate the prevalence [of bots] you need to choose a threshold to cut the score," says Mr Yang.

"If you change the threshold from a three to a two then you will get more bots and less human. So how to choose this threshold is key to the answer of how many bots there are on the platform."

Mr Yang says Mr Musk's countersuit does not explain what threshold it used to reach its 33% number.

"It [the countersuit] doesn't make the details clear, so he [Mr Musk] has the freedom to do whatever he wants. So the number to me, it doesn't mean anything," he said.

Robotic behaviour

The comments raise doubts on how Mr Musk's team reached their conclusions over bot numbers on the platform.

The BBC put Mr Yang's comment to Mr Musk's legal team, who have not yet responded.

In Mr Musk's countersuit, his team says: "The Musk Parties' analysis has been constrained due to the limited data that Twitter has provided and limited time in which to analyse that incomplete data."

Botometer was set up by the University of Indiana's Observatory on Social Media.

Clayton Davis, a data scientist who worked on the project, says the system uses machine learning, and factors like tweet regularity and linguistic variability, as well as other telltale signs of robotic behaviour.

"Humans behave in a particular way. If an account exhibits enough behaviour that is not like how humans do things then maybe it's not human," he says.

Only Twitter has the data

The researchers behind Botometer have tried to calculate how many spam and fake accounts are on Twitter in the past.

In 2017, the group of academics behind the tool published a paper, external that estimated that between 9% and 15% of active Twitter accounts were bots.

However, Mr Davis says the report was heavily caveated and reliant on limited data.

"The only only person who has a God's eye view is Twitter," Mr Davis says.

Twitter says it calculates the number of fake accounts through mainly human review. It says it picks out thousands of accounts at random each quarter and looks for bot activity.

Allow X content?

This article contains content provided by X. We ask for your permission before anything is loaded, as they may be using cookies and other technologies. You may want to read X’s cookie policy, external and privacy policy, external before accepting. To view this content choose ‘accept and continue’.

Unlike other public bot analysis tools, Twitter says it also uses private data - like IP addresses, phone numbers and geolocation - to analyse whether an account is real or fake.

When it comes to Botometer, Twitter argues its approach is "extremely limited".

It gives the example of a Twitter account with no photo or location given - red flags to a public bot detector. However the owner of the account may be someone with strong feelings about privacy.

Unsurprisingly, Twitter says its way is the best system to evaluate how many fake accounts exist.

Michael Kearney, creator of Tweet Bot or Not,, external another public tool for assessing bots, told the BBC the number of spam and fake accounts on Twitter is partly down to definition.

Bots tweet more

"Depending on how you define a bot, you could have anywhere from less than 1% to 20%," he says.

"I think a strict definition would be a fairly low number. You have to factor in things like bot accounts that do exist, tweet at much higher volumes," he said.

There is no universally agreed upon definition of a bot. For example, is a Twitter account that tweets out automated tweets, but is operated by a human - a bot?

Fake accounts are often run by humans, while accounts like weather bots are actively encouraged on Twitter.

A "bot" has no one, widely accepted definition

Despite this definitional problem, Twitter says it detects and deletes more than a million bot accounts, external every day using automated tools.

But its systems do not catch them all, and Twitter accepts that millions of accounts slip though the net. However, it says they make up a relatively small proportion of its 217 million daily active users.

Vested interest

Some bot experts claim Twitter has a vested interest in undercounting fake accounts.

"Twitter has slightly conflicting priorities," says Mr Davis.

"On the one hand, they care about credibility. They want people to think that the engagements are real on Twitter. But they also care about having high user numbers."

The vast majority of Twitter's revenue comes from advertising, and the more daily active users it has, the more it can charge advertisers.

Mr Kearney believes Twitter could have built stronger tools for finding fake accounts.

"Twitter, is perhaps not leveraging all the technology they possibly could to have the clearest answer," he says.

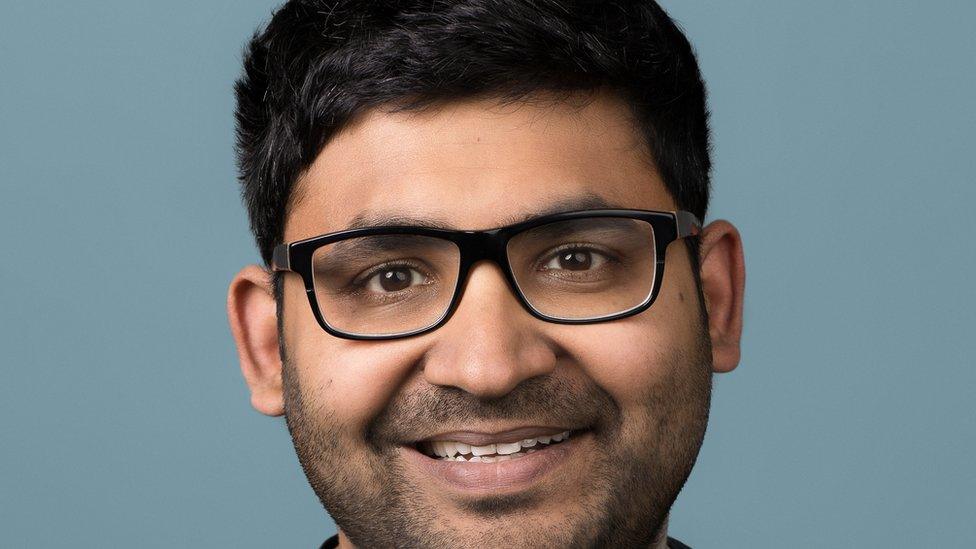

Parag Agrawal, Twitter's chief executive

Mr Musk's legal team says in its countersuit that Twitter should be using more sophisticated technology to estimate bot activity.

He also accuses Twitter of not giving him enough user data for bot estimates to be independently checked.

Mr Yang, however, believes Twitter's methodology is relatively robust and says if he had its data, he "would probably do something similar to Twitter" to verify accounts.

But he also agreed that the characteristics of a bot need to be better defined.

"It's important to have people from both sides sit down together and go through the accounts one by one", he says - to agree on an accepted bot definition.

However both sides appear done talking. In October, in a court in Delaware, we'll get a clearer idea of who the judge thinks is right.

- Published9 July 2022