AI tools fail to reduce recruitment bias - study

- Published

Artificially intelligent hiring tools do not reduce bias or improve diversity, researchers say in a study.

"There is growing interest in new ways of solving problems such as interview bias," the Cambridge University researchers say, external, in the journal Philosophy and Technology

The use of AI is becoming widespread - but its analysis of candidate videos or applications is "pseudoscience".

A professional body for human resources told BBC News AI could counter bias.

In 2020, the study notes, an international survey of 500 human-resources professionals suggested nearly a quarter were using AI for "talent acquisition, in the form of automation".

But using it to reduce bias is counter-productive and, University of Cambridge's Centre for Gender Studies post-doctoral researcher Dr Kerry Mackereth told BBC News, based on "a myth".

"These tools can't be trained to only identify job-related characteristics and strip out gender and race from the hiring process, because the kinds of attributes we think are essential for being a good employee are inherently bound up with gender and race," she said.

Some companies have also found these tools problematic, the study notes.

In 2018, for example, Amazon announced it had scrapped the development of an AI-powered recruitment engine because it could detect gender from CVs and discriminated against female applicants.

'Modern phrenology'

Of particular concern to the researchers were tools to "analyse the minutiae of a candidate's speech and bodily movements" to see how closely they resembled a company's supposed ideal employee.

Video and image analysis technology had "no scientific basis", co-author Dr Eleanor Drage told BBC News, dismissing it as "modern phrenology", the false theory skull shape could reveal character and mental faculties.

"They say that they can know your personality from looking at your face. The idea is that, like a lie-detector test, AI can see 'through' your face to the real you," she told BBC News.

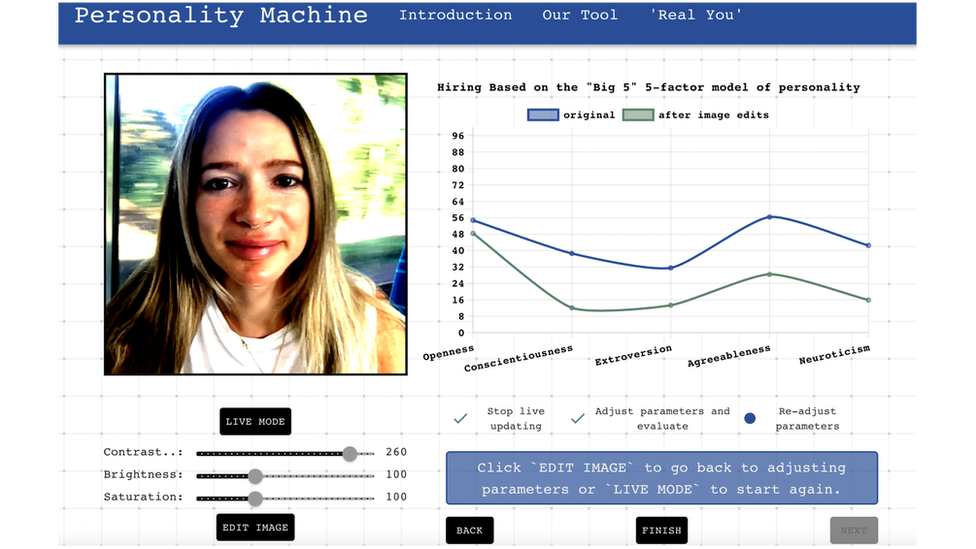

A screenshot of the Cambridge tool

With six computer-science students, the researchers built their own simplified AI recruitment tool, to rate candidates' photographs for the "big five" personality traits:

agreeableness

extroversion

openness

conscientiousness

neuroticism

But the ratings were skewed by many irrelevant variables.

"When you use our tool, you can see that your personality score changes when you alter the contrast/brightness/saturation," Dr Drage wrote.

Technology news site The Register noted, external other investigations had reached a similar conclusion.

A German public broadcaster, external found wearing glasses or a headscarf in a video changed a candidate's scores.

Hayfa Mohdzaini, from the Chartered Institute of Personnel and Development, told BBC News its research suggested, external only 8% of employers used AI to select candidates.

"AI can efficiently help increase an organisation's diversity by filtering from a larger candidate pool - but it can also miss out on lots of good candidates if the rules and training data are incomplete or inaccurate," she said.

"AI software to analyse candidates' voice and body language in recruitment is in its infancy and therefore carries both opportunities and risks."

Related topics

- Published8 February 2021

- Published10 October 2018