OpenAI: A triumph of people power

- Published

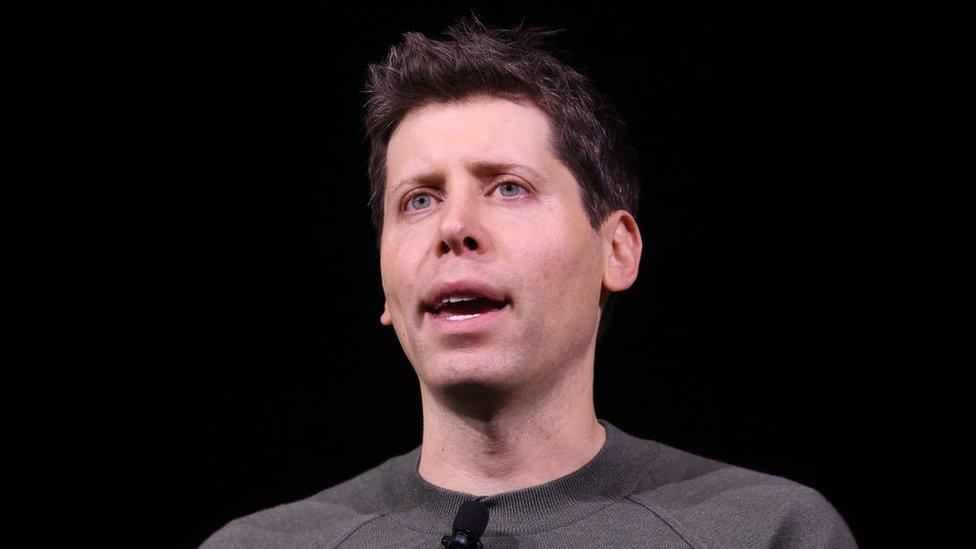

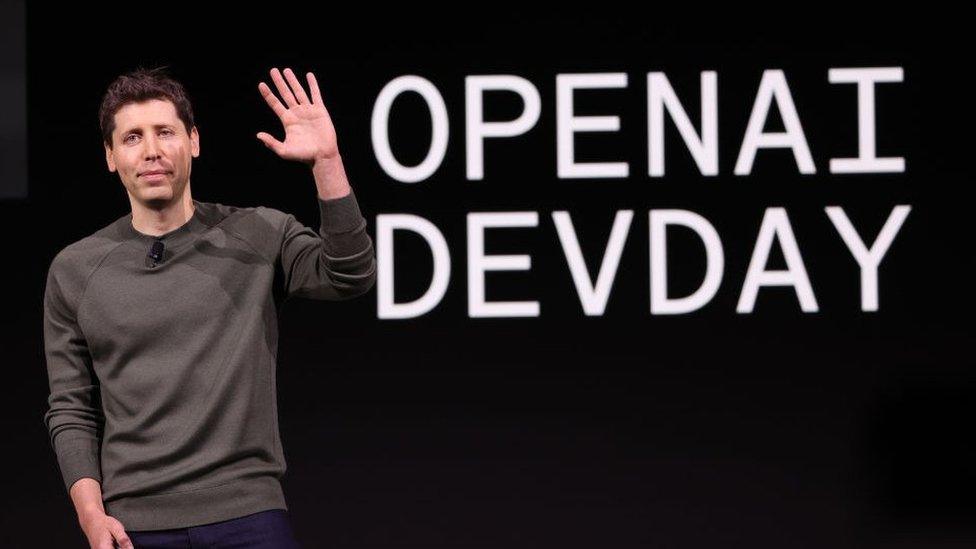

Five days after he was suddenly sacked, Sam Altman is going back to his old job as the boss of OpenAI, creator of the chatbot ChatGPT.

The emoji that's been most bandied around on my work chats so far today is the one of the exploding head.

We still don't know why he was fired in the first place. But the board of directors at OpenAI discovered something which troubled them so much they went to extraordinary lengths to oust him, acting quickly and quietly, informing almost nobody.

In a statement they implied he had somehow not been honest with them: accusing him of not being "consistently candid" in his communications.

Despite the gravity of all that, and the appointment of two new CEOs in almost as many days, what followed was a spectacular explosion of support for Mr Altman from within the firm itself - and it's worked.

Coded messages, coloured hearts

Almost every single member of staff co-signed a letter saying they would consider quitting if Mr Altman wasn't reinstated. Chief scientist Ilya Setskover, one of the board which made the original decision, was one of those signatories. He later wrote on X, formerly Twitter, that he regretted his role in Mr Altman's departure.

Allow X content?

This article contains content provided by X. We ask for your permission before anything is loaded, as they may be using cookies and other technologies. You may want to read X’s cookie policy, external and privacy policy, external before accepting. To view this content choose ‘accept and continue’.

"OpenAI is nothing without its people" was posted on X by many workers, including Mira Murati who was at the time the interim CEO. There were also lots of coloured hearts being shared. The whole thing had the vibes of last year's big Silicon Valley meltdown: the shake-up at Twitter (now X) following Elon Musk's takeover. Laid-off Twitter staff sent a coded message then too: the emoji giving a salute.

Sam Altman, meanwhile, didn't hang around. By Monday, he had accepted a new job at Microsoft, OpenAI's biggest investor.

Several people muttered to me about this effectively being a Microsoft takeover without staging a takeover: in the space of two days the Seattle giant had hired Mr Altman, OpenAI co-founder Greg Brockman, and in a social media post on X, external, Microsoft chief technology officer Kevin Scott said the firm would also take on anyone else from OpenAI who wished to defect - sorry, join them.

But now, he's back. Someone once told me you can walk through the walls of a large corporation if they want you to, Matrix-style. And that appears to be exactly what Sam Altman has achieved here.

The root of it all

Why does this entire soap-opera matter?

We might be talking about the creators of pioneering technology but there are two very human reasons why we should all care: money and power.

Let's start with money. OpenAI was valued last month at $80bn. Investment money has been pouring in. But its overheads are also high. Someone once explained to me it costs the firm "a few pence" every time someone puts a query into ChatGPT because of the computing power involved.

A researcher in the Netherlands told me in October that if every Google search query cost the same as a chatbot one, even the super-wealthy Google would not be able to afford to run its search engine.

So it's no surprise that OpenAI looked towards profit-making, and keeping its investors on side.

And with money comes power. Despite this popcorn-grabbing distraction, let's not forget what OpenAI is actually doing: shaping a technology which could reshape the world.

The power to mess things up

AI is evolving rapidly, growing ever more powerful - and that makes it potentially more of a threat. In a very recent speech Mr Altman himself said what was coming next year from OpenAI would make the current ChatGPT look like "a quaint relative" - and we know the existing model is able to pass the bar exam taken by trainee lawyers.

There aren't many people driving this unprecedentedly disruptive tech, and Sam Altman is one of them. AI promises a smarter, more efficient future if things go well, and destruction if they do not. But as the last five days have shown, people power is still at the heart of all that innovation. And humans also still have the power to mess things up.

I'll leave you with my favourite comment about the whole debacle: "People building [artificial general intelligence]unable to predict consequences of their actions three days in advance", wrote quantum physics professor Andrzej Dragan on X.

Related topics

- Published22 November 2023

- Published21 November 2023

- Published20 November 2023

- Published20 November 2023