Social media: Senior police officer calls for boycott over abuse images

- Published

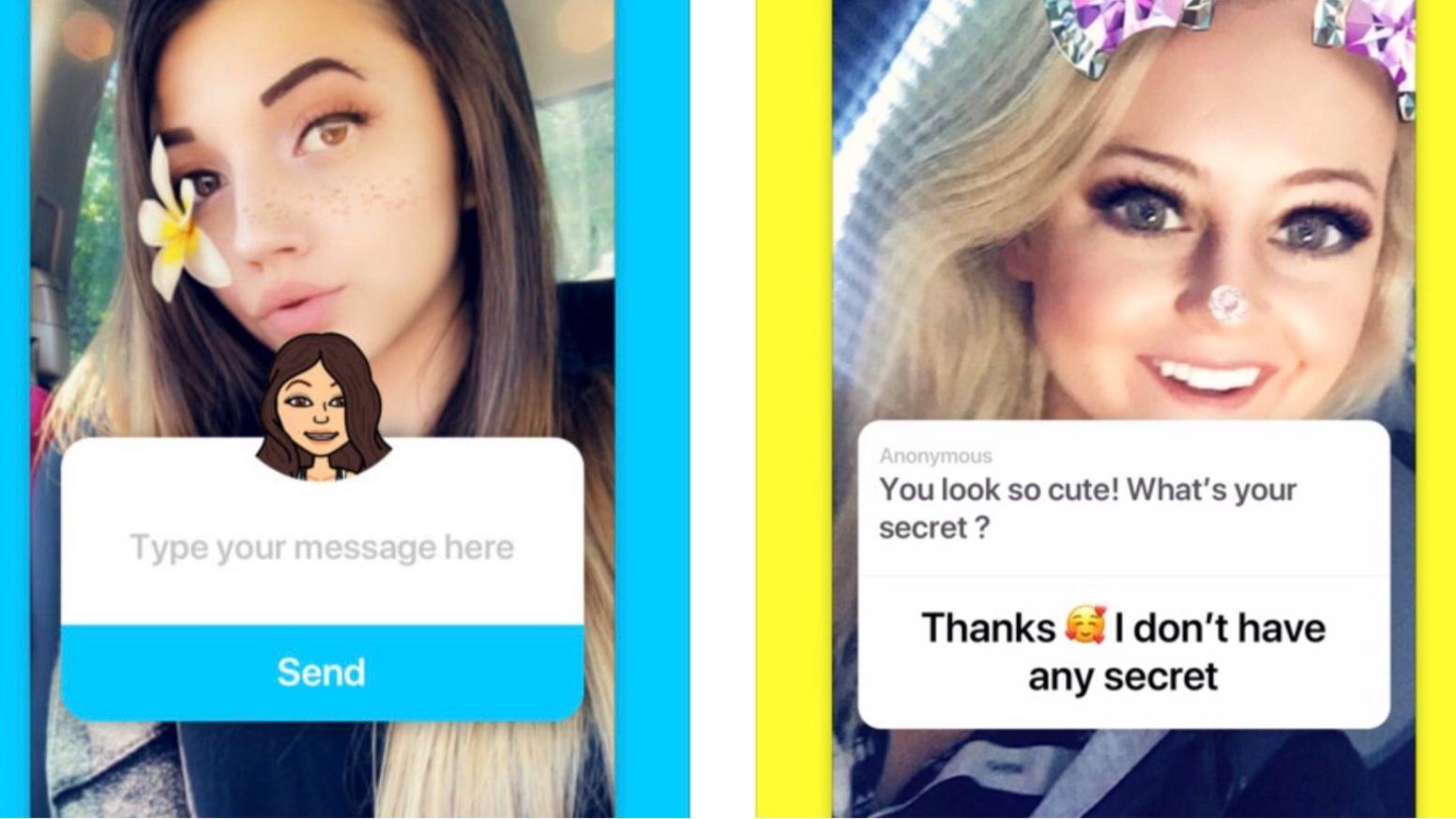

A boycott of social media sites could force firms to take action to safeguard children, a senior police officer says.

Chief Constable Simon Bailey said the companies were able to "eradicate" indecent imagery on their platforms.

Mr Bailey, the UK's lead officer for child protection, said websites would take notice of "reputational damage".

The Internet Association, which represents tech firms including Twitter and Facebook, said the industry spent millions removing abusive content.

The number of images on the police's child abuse image database has ballooned from less than 10,000 in the 1990s to 13.4 million currently.

Starting on Monday, the Independent Inquiry into Child Sexual Abuse will hold two weeks of hearings focusing on internet companies' responses, external to the problem, during which Mr Bailey - and executives from Facebook, Google, Apple, BT and Microsoft - will give evidence.

Mr Bailey is the chief constable of Norfolk and also leads on child protection for policing body the National Police Chiefs' Council.

He told the Press Association that the government's online harms White Paper, external could be a "game changer", but only if it leads to effective punitive measures.

Published last month, the White Paper sets out plans for online safety measures and is currently open for consultation.

The White Paper suggests, among other things, that internet sites could be fined or blocked if they fail to tackle issues such as terrorist propaganda and child abuse images.

Mr Bailey believes the really big platforms have the technology and funds to "pretty much eradicate" indecent imagery - but did not think they were "taking their responsibilities seriously enough".

"Ultimately, the financial penalties for some of the giants of this world are going to be an absolute drop in the ocean," he said.

"But if the brand starts to become tainted... maybe the damage to that brand will be so significant that they will feel compelled to do something in response.

"We have got to look at how we drive a conversation within our society that says 'do you know what? We are not going to use that any more, that system or that brand or that site because of what they are permitting to be hosted or what they are allowing to take place'."

'Ensure safety'

In response to the calls, the Internet Association said online companies had invested in content moderators and developing technology to remove abusive content.

A spokesperson said the companies also work with organisations around the world, including Internet Watch Foundation, a UK-based child abuse watchdog, to remove these images from the internet.

"Internet companies are working hard to get this right and continue to engage with the government's recent online harms White Paper, but we must ensure that any new measures are proportionate and do not damage the significant benefits that the internet brings to the UK."

Facebook says it has removed 8.7 million "pieces of content" that violated its policy around child nudity or sexual exploitation of children between July and September last year.

Its global head of safety, Antigone Davis, said: "Like Chief Constable Bailey, we think the safety of young people on the internet is of the utmost importance.

"We have strong policies, we are using the most advanced technologies to prevent abuse, we work with experts and the police, and we educate families and young people on the precautions they should take.

"Families and young people find social media valuable and we want to make sure we are doing our utmost to ensure their safety."

- Published10 May 2019

- Published9 May 2019

- Published7 May 2019

- Published1 May 2019