Universities warn against using ChatGPT for assignments

- Published

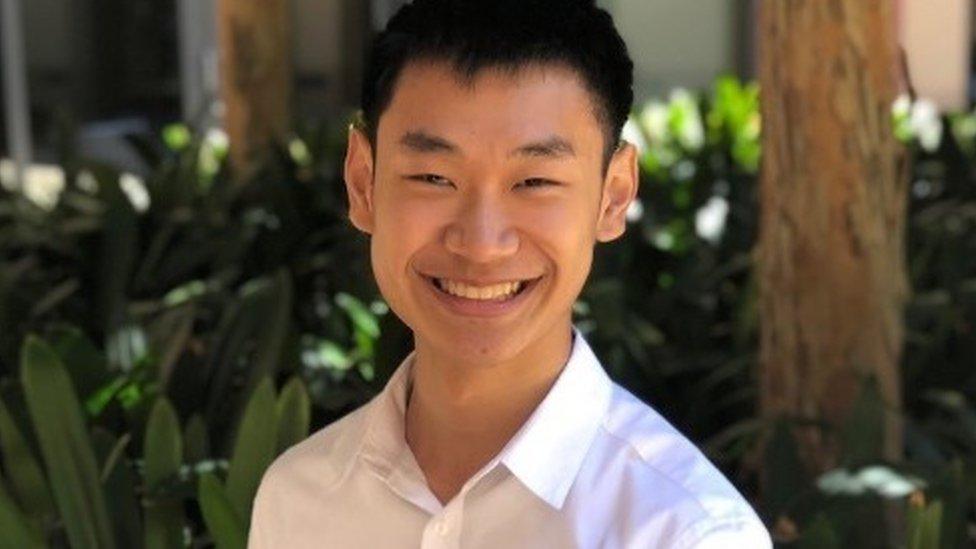

Former student Pieter Snepvangers used ChatGPT to write him an essay answer as part of an experiment

Universities are warning students they could be punished for using artificial intelligence (AI) to complete essays.

"Don't take the chance," said Steve West, Vice Chancellor at the University of the West of England (UWE).

It comes after a former University of Bristol student experimented with the ChatGPT bot to get a 2:2 on one of his old essay questions.

"I got 65% and it took two weeks when I did it. ChatGPT was 12 marks off - that's scary," said Pieter Snepvangers.

ChatGPT, which reacts to users in a conversational way, provides convincingly human responses to questions using information from the internet,

Mr Snepvangers' essay was graded at 53% by one of his former lecturers, who was fully aware it had been created using artificial intelligence.

The student asked the bot 10 questions and received a 3,500-word essay, which he then spent 10 minutes formatting for the experiment.

'Convincing' English

"ChatGPT didn't produce in-text referencing, but my lecturer said if I had done that it would have scored a high 2:2 or a 2:1," said Mr Snepvangers.

"He said the English was convincing, but it often danced around answering the actual question.

"Can you imagine what the software will be like for students starting university now by their third year?

"Universities have to switch on and find a way of adapting their assessments," said Mr Snepvangers.

His lecturer also said four out of 33 essays handed in for one module this year "looked fishy from an AI perspective."

A University of Bristol spokesperson said: "ChatGPT's unauthorised use, like that of other chatbots or artificial intelligence software, would be considered a form of cheating under our assessment regulations."

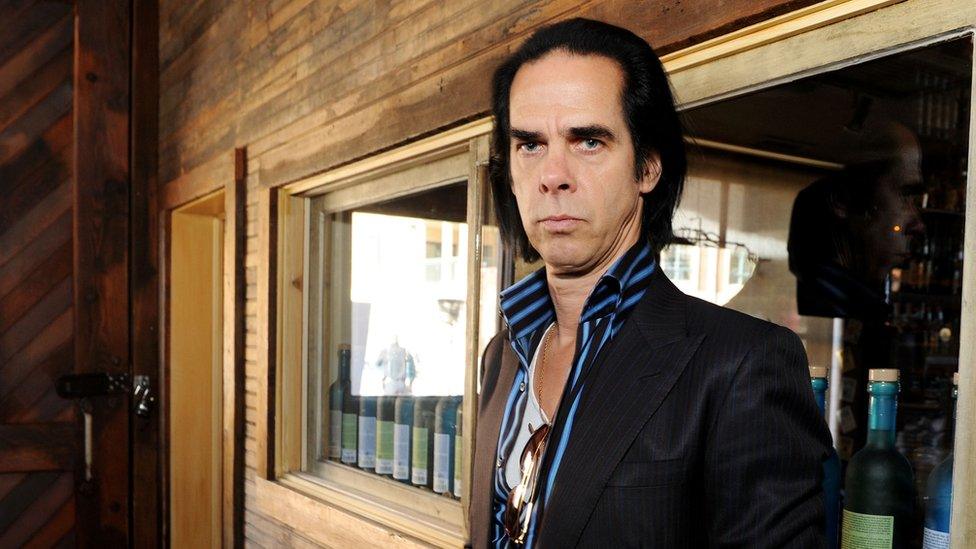

Vice chancellor of UWE, Steve West says the technology "can't be put back in the bottle"

ChatGPT has become hugely popular since it launched in December.

It is trained on large amounts of data that enable it to make predictions about how to string words together.

It can be hard to detect using traditional anti-plagiarism software because it generates a brand new answer for questions asked.

'Students don't need AI'

Vice chancellor of the University of the West of England, Steve West - who warned students that using AI to create essays would be an "assessment offence" - said he had dabbled with the software.

"I'm a foot surgeon by background so I put in a question about a complex surgical procedure and it gave me an answer.

"Would I use that answer to do that surgery? Absolutely not.

"We can't put the technology back in the bottle, but its limitations have to be understood.

"But academic staff are bright and they will spot it being used. Students - you're brighter than that, you don't need to do it," he said.

Follow BBC West on Facebook, external, Twitter, external and Instagram, external. Send your story ideas to: bristol@bbc.co.uk , external

- Published6 February 2023

- Published17 January 2023

- Published13 January 2023

- Published7 December 2022