Inside Facebook HQ with safety team checking reports

- Published

Across one floor of Facebook's sprawling, glass-fronted building in Dublin's Docklands is a team of people working on an enormous task.

A sign reading "no visitors" at the entrance to the open plan office suggests it is sensitive work.

Clocks on the wall show the time in various cities as reports come in from Facebook users around the world.

The promise is simple: "Everything gets looked at, absolutely everything gets looked at."

"Whether someone goes to our help centre to flag that their account has been hacked or whether they want to report a page or photo because they think it's inappropriate, it'll come to this team in Dublin," explains Safety Policy Manager Julie de Balliencourt.

Despite claims that Facebook relies on algorithms, image-recognition software and automated systems, the company insists human beings make the decisions.

A lot of reports are trivial. Football fans reporting rival supporters or friends who've fallen out and want to make a point.

But at times, lives are at risk.

Facebook allows users to unfollow others or hide posts from their timeline, as well as the option to report content.

"We prioritise based on how serious something could potentially be," Julie said.

"So if we feel that someone is being bullied or there's a risk of real-world harm we are going to prioritise those reports ahead of the rest and they'll be reviewed in a matter of hours."

Facebook has faced criticism in the past for being inconsistent in the way it handles sensitive content.

Controversy over pictures of women breastfeeding and beheading videos are examples of the company's policies proving unpopular.

But the social network insists there are hard and fast rules about what users can or can't post.

"There is a line and there is content that we won't allow, we have a whole set of rules that are available on our site," said Julie.

Jess Lathan, 26, is one of many people who posted a comment on the Newsbeat Facebook, external page expressing frustration at how the reporting process works.

"I've tried to report several different indecent images on Facebook, really graphic or pornographic images.

Safety Policy Manager Julie de Balliencourt admits the team checking reports can sometimes make mistakes.

"I'm not sure how bad it has to get before something violates Facebook's terms and is actually removed," she said.

Facebook Content Policy Manager Ciara Lyden described the process of responding to rules as "a balancing act".

"It comes from the starting point of how do you keep people safe, but Facebook is also a place for sharing and we want to connect the world and allow people to be open."

But the company admits it doesn't always get it right.

"Because we have teams of people, sometimes we make mistakes.

"We're human and we're very sorry when we do make a mistake," said Julie de Balliencourt.

Facebook won't say exactly how many people work in their Community Operations team.

Newsbeat understands some of its work is outsourced to companies in various countries around the world.

While Facebook promises that every report is looked at by a human being, automated systems are also used.

"We have things in place relating to spam," Julie explains.

"Facebook is highly attractive to spammers and phishers. We have automation in place to flag fake accounts and remove them."

However, decisions to remove content reported for being violent, explicit or offensive are open to interpretation.

"Where it can become tricky, you need to take into account who is making the report, maybe the relationship they have with the person they are reporting.

"The language they speak, the country they come from - maybe if it's a photo that is posted on a page we will want to look at the whole page not just the photo in isolation."

Around 500 staff are based at Facebook in Dublin, the company's largest office outside California

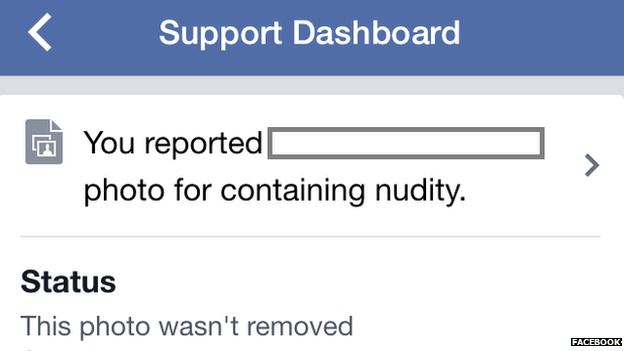

A common complaint from users is a lack of feedback on why content is or isn't removed.

Facebook admits there is room for improvement, promising changes to the reporting system.

"In the next few months we want to be more explicit and explain in much more detail why something was ignored or why something was actioned," Julie said.

But still, users should expect to be challenged by some of the content they see, suggests Julie.

"The same thing will apply if you choose to watch the news, you're going to see different types of content that are thought provoking.

"Those are real things happening in today's world that would maybe raise really good questions.

"The fact is that you see great things and sometimes you see things that shouldn't be there."

With 1.3 billion users, Facebook will never keep everyone happy.

It is clearly trying to strike a balance, but as the number of users continues to grow, that challenge may only become harder.

Follow @BBCNewsbeat, external on Twitter, BBCNewsbeat, external on Instagram and Radio1Newsbeat, external on YouTube