Kids exposed to social media posts about violence and suicide

The experiment created fake profiles for teenagers living in the west of England

- Published

A BBC investigation has found young teenagers are being exposed to content about weapons, bullying, murder and suicide soon after joining social media platforms.

The project, which saw six fictional profiles set up as 13-15-year-olds, found they were shown the "worrying" posts within just minutes of scrolling on TikTok and YouTube. They were also guided to sexually suggestive content on Instagram.

Online safety expert David Wright said: "While the findings are concerning they are, unfortunately, not surprising."

TikTok and Instagram said accounts used by children automatically have restrictions in place, while YouTube said it recently expanded protections for teens.

This article contains descriptions of online content that some might find upsetting.

You can get access to help and advice on the topics mentioned via the BBC Action line.

Much of the content we scrolled through was not suitable for under-18s

We [BBC journalists Andy Howard and Harriet Robinson] set up fictional social media profiles for:

Sophie, Maya, and Aisha on Instagram and TikTok.

Harry, Ash and Kai on Youtube and TikTok.

We scrolled each of their profiles for 10 minutes per day for a week.

Amongst the endless posts about sport, gaming and beauty (some of the topics we initially searched for) there were others, seemingly unrelated to our profiles - that we did not ever search for - that felt more sinister.

While Instagram proved to be less concerning overall, it did expose two of the children to sexualised content.

You can see our methodology towards the bottom of this article.

Here's what we found:

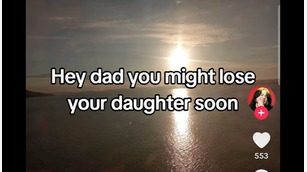

The fake profiles were sometimes exposed to content that inferred suicide

Sophie, 15, from Dursley

Her profile said she was a big fan of Taylor Swift, reads fantasy and romance novels. She loves cute animals and spending time with friends.

The big theme with this profile was mental health, including several TikTok posts about young people who had taken their own lives after being bullied, showing their gravestones.

In one 10-minute session Sophie was also exposed to two posts on TikTok where the person posting said they were suicidal, with other people making similar comments.

There was some talk of self-harm, though it was mainly videos of celebrities speaking to fans with scars and urging them not to do it.

Sophie also came across a post about abuse with people commenting underneath that they were also experiencing this, for example: "I have an abusive dad", "me too".

Aisha, 13, from Keynsham

Loves shopping and is into fashion and beauty. She plays football and lots of Roblox. A fan of Frank Ocean and Sabrina Carpenter.

This profile was shown the least disturbing content, but on the first day, a search for "leopard print" on Instagram, one of Aisha's interests, immediately led to scantily-clad women in suggestive poses.

The For You page was then suggesting more of these types of images several days later despite never searching for it again.

While these were not explicit, they suggested they would lead the user to more explicit content if they clicked through.

On day two, after just 10 minutes of scrolling altogether on TikTok, Aisha was shown a gaming video acting out a school shooting with characters hiding in the bathroom and sounds of a gun being fired.

Maya, 15, from Swindon

Spends most of her time on TikTok, loves hauls and Get Ready With Me (GRWM) content. She enjoys gaming, especially Roblox, and is also a fan of artists like SZA and Sabrina Carpenter.

After 30 minutes of scrolling on TikTok, this profile was regularly exposed to upsetting graphic descriptions of real-life murder cases and attacks with wording like "dragged from her house and beaten to death".

There was a video of a woman talking about the abuse a five-year-old girl suffered at the hands of her father, and another from a tabloid newspaper's profile, describing a "brutal attack" on an autistic child.

Maya was also shown videos about sick children and poor mental health.

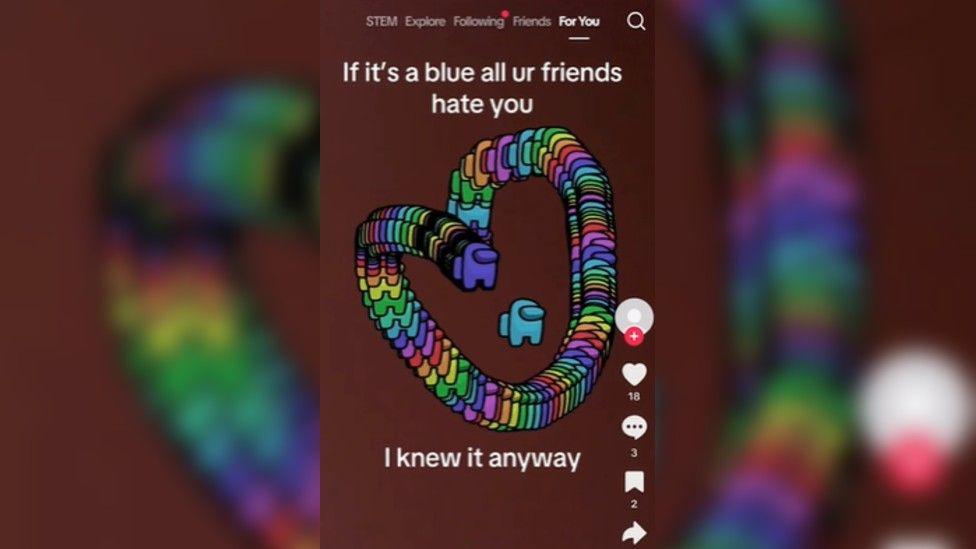

On one occasion, an animated video said "If it's a blue, all your friends hate you", followed by the symbol turning blue.

Following many of the most upsetting videos, TikTok offered clickable searches, like: "Actual raw footage video", "Her last moment alive" and "Murder caught on camera".

There was also a video of a woman (who you can't see) screaming and shouting swear words.

On Instagram this user was shown some sexually-suggestive content.

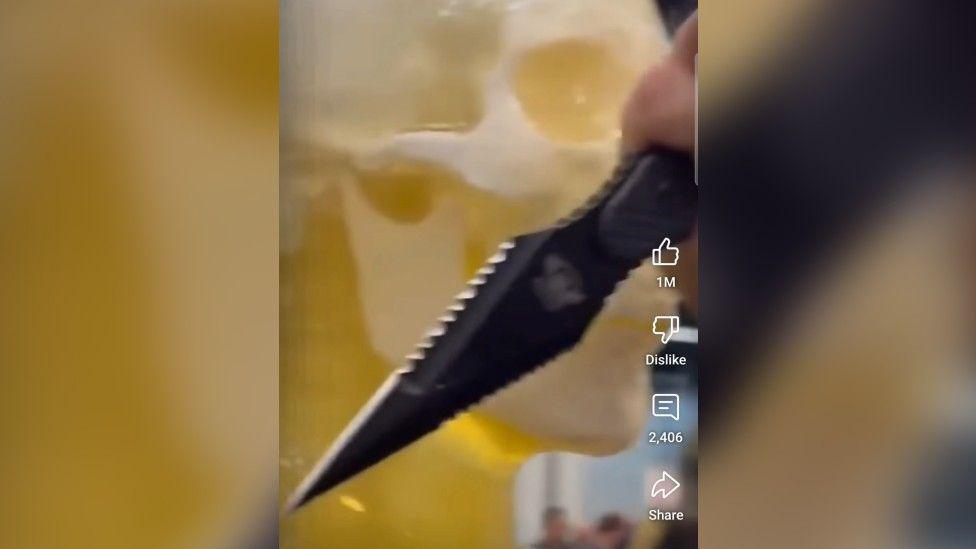

Knives were shown several times to one user

Ash, 15, from Bristol

Goes to the gym a lot, likes doing weights. Enjoys gaming, plays Destiny 2, Block Blast and Witcher. Loves music and is really into drumming.

Within 20 minutes of scrolling, a video appeared on YouTube reviewing different weapons and how they perform on the human body. "Worst Weapons" used manikins to show the effect of knives and bullets.

This profile was by far the most concerning of the three male teenagers we created, being shown videos covering subjects like "how to hide a dead body" on TikTok, hiding drugs from police officers, and what looked like [or was meant to look like] real footage of a man hitting a woman on YouTube.

From the off, the language this profile was exposed to was aggressive and full of swear words.

Towards the end of the week, this profile also seemed to start featuring more and more females either dancing or posing in suggestive ways on TikTok. They all had a similar appearance in terms of body shape and looks, with one post encouraging the profile to choose whether they prefer "the girl or the car" they were featured alongside.

Kai, 13, from Trowbridge

His favourite thing is gaming - he enjoys Fortnite, Roblox and Call of Duty. He is also into music like Snoop Dog and Eminem, and writes songs himself.

As a fictional gamer, Kai's profile generated a lot of screengrabs from different well-known video games.

In particular, the footage from the first-person shooter games were graphic and aggressive. Close-up stabbings with blood spurting out of opponents was a regular occurrence on YouTube.

This profile also took us into the realm of mysterious audio stories about shocking subjects, including a mocked-up phone call on TikTok from a father telling his daughter to lock herself in her room because "your mum wants to kill you".

Scrolling this account also showed a TikTok tutorial video of "how to steal" the metal statue from the front of an expensive car.

Harry, 15, from Taunton

Loves football, he plays at school and does Fantasy Football. He enjoys gaming, mainly FC25 and Among Us.

This account was probably the least concerning of the three boys in terms of what it was exposed to, but did see content that seemed to come out of the blue.

There was another knife advert/review on YouTube, comparing blades of differing prices with how they cut an orange.

There was also an "anger level monitor", where the user was encouraged to do a test and see how angry they are.

"Children deserve digital spaces where they can learn, connect and explore safely," said David Wright

Mr Wright, from online safety and security organisation SWGfl, said the experiment echoed the concerns his charity had raised, that "children can be exposed to harmful content, even when no risky search terms are used".

"It is worrying. The content you describe... presents serious risks to children's mental health and wellbeing, and we have all too often seen the tragic consequences," he added.

"Exposure to such material can, in some cases, normalise harmful behaviours, lead to emotional distress, and significantly impair children's ability to navigate the online world safely."

Karl Hopwood, a member of the UK Council for Internet Safety, said that while demonstrating how sharp a knife can cut an orange is different from the more violent content, "it's just easy for this to be taken out of context".

"Adverts for knives would, as far as I know, breach community guidelines and I personally don't think we should be showing that sort of stuff to the youngest users.

"The stuff around suicide/self-harm/depression isn't great if you're already vulnerable and feeling low – but for a lot of young people it may not be an issue at all," he added.

Ofcom is now enforcing the UK's Online Safety Act, external, and has finalised a series of child safety rules which will come into force for social media, search and gaming apps and websites on 25 July.

Mr Wright said regulation was a "vital step", but "it must be matched by transparency, enforcement, and meaningful safety-by-design practices", including algorithms being subject to scrutiny and support for children, parents and educators to identify and respond to potential risks.

"We must move beyond simply reacting to online harms and towards a proactive, systemic approach to child online safety," he added.

Some of the content reflected a negative state of wellbeing

A TikTok spokesperson said its teen accounts "start with the strongest safety and privacy settings by default".

They added: "We remove 98% of harmful content before it's reported to us and limit access to age-inappropriate material.

"Parents can also use our Family Pairing tool to manage screen time, content filters, and more than 15 other settings to help keep their teens safe."

Meta, which owns Instagram, did not provide a specific comment, but told us it also has teen accounts, which offer built-in restrictions and an "age-appropriate experience" for 13-15-year-olds.

These restrictions automatically come into effect when the user inputs their date of birth while setting up the app.

The company said while no technology was perfect, the additional safeguards should help to ensure sure teens are only seeing content that is appropriate for them.

Meta is bringing Teen Accounts to Facebook and Messenger later this month and adding more features.

A YouTube spokesperson said: "We take our responsibility to younger viewers very seriously, which is why we recently expanded protections for teens on YouTube, including new safeguards on content recommendations.

"We generally welcome research on our systems, but it's difficult to draw broad conclusions based on these test accounts, which may not be consistent with the behaviour of real people."

How the project worked

Each user profile was created on a different sim-free mobile phone, with location services turned off.

We created a Gmail account for each of the users on each device, and then created the profiles - Instagram and TikTok for the girls, YouTube and TikTok for the boys.

The profiles were different to each other and based on research from the Childwise, external Playground Buzz report, which gives insight into children's interests, favourite brands and habits.

We did a few basic searches and follows on the first day based on each user's specific likes, including music, beauty, gaming and sport.

From then on we mostly just scrolled and liked. We did not post, comment on or share any content throughout the experiment.

We scrolled on each platform for each profile for 10 minutes per day for a week.

Get in touch

Tell us which stories we should cover in Bristol

Follow BBC Bristol on Facebook, external, X, external and Instagram, external. Send your story ideas to us on email or via WhatsApp on 0800 313 4630.

- Published23 April

- Published5 May

- Published9 May

- Published25 September 2024