Girl, 12, victim of 'deepfake' bullying porn image

The girl and her parents were left traumatised as the fake image was shared widely among children in part of West Yorkshire

- Published

A family have said that police did not do enough to protect their 12-year-old daughter after school bullies posted a "deepfake" pornographic image of her on social media.

West Yorkshire Police initially told the girl's parents that nothing could be done after the image was shared on Snapchat, because the platform was based in the US.

The victim was left "traumatised" when the digitally manipulated image was posted on an account on the app and circulated among users.

West Yorkshire Police has since apologised to the family and said the incident was now being "thoroughly investigated".

'Horrendous'

The family, who have not been named to protect their child's identity, said they contacted the force on the 101 non-emergency number in June after becoming aware of the image.

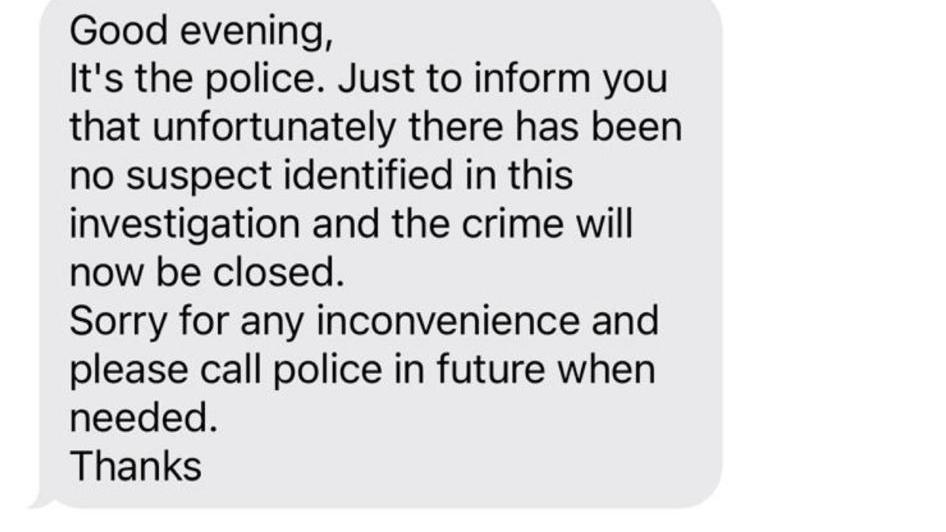

A police officer visited their home, but nine days later they received a text message informing them that the case had been closed and no suspect had been identified.

Following a complaint, West Yorkshire Police admitted it had made "mistakes" in the handling of the investigation.

The image resurfaced in July once schools broke up for the summer holidays and caused further distress to the now 13-year-old.

"It has honestly been the most horrendous thing to go through," the girl's mother told the BBC.

"This image is being shared by children who presumably think it is funny but it is basically child pornography.

"How can someone make a fake pornographic picture of a 12-year-old girl for people to share again and again - and police do nothing at all?"

The deepfake image was shared on Snapchat

The girl's parents said they were not convinced their first report had even been properly looked into.

"It just felt to me that they just weren't bothered at all," her mother said.

"We called back several times to find out why they weren't investigating and my husband went to the police station.

"At one point we were told the log said they didn't have our phone number - but we'd been sent that text message.

"We thought at least they might contact my daughter's school before the summer holidays to try and stop the image being spread further, but they didn't and now it is all over her social media."

Police sent a text nine days after the image was reported

A West Yorkshire Police spokesperson said: “We acknowledge that this matter was not handled in a satisfactory manner and our method of communication does not reflect the appropriate level of victim care.

“The officer in the case has been advised accordingly and we have since spoken with the victim’s family to assure them this is being thoroughly investigated.

“Further information about this matter has since come to light. Our inquiries remain ongoing.”

A spokesperson for Snapchat said: “Any activity that involves the sexual exploitation of a child, including the sharing of explicit deepfake images, is abhorrent and illegal, and we have zero tolerance for it on Snapchat.

"If we find this content through our proactive detection technology, or if it is reported to us, we will remove it immediately and take appropriate action. We also work with the police, safety experts and NGOs to support any investigations.”

New laws

The Online Safety Act, which was introduced last year, criminalised the sharing of explicit "deepfake" images that have been manipulated with artificial intelligence (AI).

The creation of such images of adults will also be made a criminal offence under new laws, the Ministry of Justice announced earlier this year. Offences involving a child were already liable for prosecution under existing legislation.

If you have been affected by any of the issues raised in this report, you can find more help and support via the BBC Action Line.

- Published15 May 2024

- Published16 April 2024