What should social networks do about hate speech?

- Published

Mourners gathered outside the Emanuel African Methodist Episcopal Church in Charleston after a mass shooting that killed nine people.

Extreme racist comments posted on the discussion website Reddit in the wake of the Charleston church shooting have once again raised questions about freedom of speech and the internet. How far should social networks go in censoring hate speech?

Reddit is arguably the mainstream social network most devoted to freedom of speech. It has continued to uphold that idea even in the face of criticism - for instance a controversy over a user who posted extreme content, external including a thread devoted to pictures of underage girls. The site didn't ban the user Violentacrez, but he did lose his job after his real identity was exposed by the website Gawker, external.

But the site's anti-censorship stance (or rather, its mostly anti-censorship stance - and more about that later) came under fire this week after reports surfaced of posts expressing support, external for the man charged with murdering nine worshipers in a black church in Charleston.

The posts were made under a thread or "subreddit" called Coontown - which, as the offensive name suggests, is a corner of Reddit made up mostly of virulently racist and white supremacist posts. One commenter called the shooter "one of us". In another popular post, a moderator said "we don't advocate violence here", but went on say the life of a black person "has no more value than the life of a flea or a tick" (most of the rest of the post, which contains at least 15 racial slurs, is unpublishable on this website).

Despite the extreme and often shocking nature of the comments, they're perfectly legal under American law - and legally Reddit is by and large an American company - according to Eugene Volokh, a law professor and the man behind a Washington Post blog on legal issues, the Volokh Conspiracy, external.

"American law protects people's ability to express all sorts of views, including views in support of crime or violence, without fear of government restriction," Volokh says. "But a private institution like Reddit is also free to say we don't want our facilities to be used as a means of disseminating this information."

Reddit did just that earlier this month when it banned five subreddits, including racist and anti-gay threads and a mocking forum called "Fat People Hate". In a statement, external, the company said it was "banning behaviour, not ideas" and that the offending threads were specifically targeting and harassing individuals.

"We want as little involvement as possible in managing these [online] interactions but will be involved when needed to protect privacy and free expression, and to prevent harassment," the statement read, external. "While we do not always agree with the content and views expressed on the site, we do protect the right of people to express their views."

Reddit has found itself at the centre of a debate about hate speech and what should be censored on social media

But the ban opened the site up for criticism. Some users hurled "Nazi" and other insults at Reddit CEO Ellen Pao, external, while others questioned why other offensive threads weren't also taken down, external and said the company hadn't gone far enough.

Mark Potok of the Southern Poverty Law Centre, a non-profit organisation based in Alabama which tracks US hate speech, says the site has attracted people who previously hid out on other sites dedicated to extremist ideology.

"More and more people in the white supremacist world are moving out of organised groups and into more public spaces. Reddit really has become a home for some incredible websites," he says, citing not only a string of racist subreddits but a thread that actively encourages the rape of women.

The SPLC recently issued a report on Reddit's racist content, external: "The world of online hate, long dominated by website forums like Stormfront and its smaller neo-Nazi rival Vanguard News Network, has found a new - and wildly popular - home on the Internet."

"There's a lot of hypocrisy in Reddit banning a particular subreddit that mocks people for being fat while they allow other extreme content to trundle along just fine," Potok says. While he acknowledged that Reddit is within its rights to allow racist comments, he suggested that the site and other social networks could act more like traditional publishers who exercise editorial control over what does or doesn't go into their publications.

But Jillian Yorke of the Electronic Frontier Foundation questioned whether private companies are best placed to make decisions about censorship. She referred to controversies over breastfeeding pictures on social media and noted that the biggest social networks ban nudity, which might not be offensive to all or even most users.

"Legally these companies have the right to ban whatever they want, but when it comes down to it the rules are skewed and the enforcement of them is as well," she says. Yorke says that the biggest social networks should become more pro-active in thinking of ways to implement community policing - for instance Twitter's recent announcement about shared "block" lists which will make it easier for users to block multiple accounts, external.

Reddit turned down requests for an interview about this story, but in a statement told BBC Trending that it continues to be "committed to promoting free expression."

"There are some subreddits with very little viewership that get highlighted repeatedly for their content, but those are a tiny fraction of the content on the site," the company said.

Blog by Mike Wendling, external

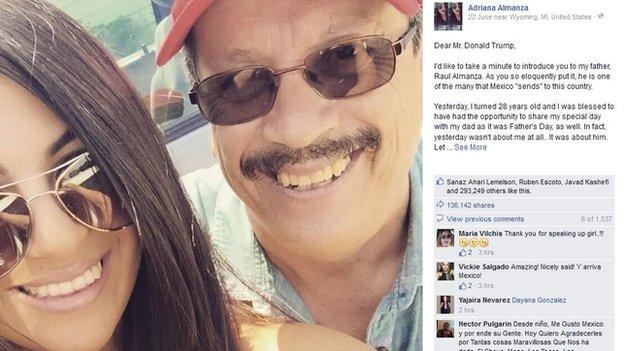

Next story: The letter that summed up anger at Donald Trump

An open letter written after US business mogul Donald Trump made controversial comments about Mexicans while announcing his presidential campaign has catalysed anger within America's Hispanic community. READ MORE

You can follow BBC Trending on Twitter @BBCtrending, external, and find us on Facebook, external. All our stories are at bbc.com/trending.