Should robots ever look like us?

- Published

Should we aim to make robots look exactly like humans or cute cartoonish versions of us?

Humanoid robots are a familiar trope in popular culture, but is making machines look like us a little bit creepy and even potentially dangerous?

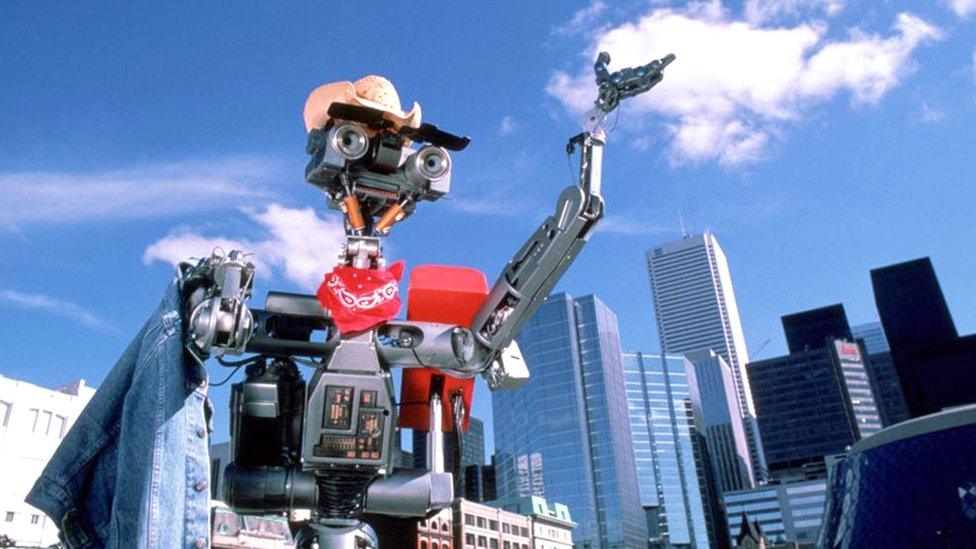

Whether it is Isaac Asimov's robotics novels, 1980s movie character Johnny 5, Hollywood's Avengers: The Age of Ultron or Channel 4's sci-fi drama Humans, there has long been a fascination in popular culture with robots becoming sentient - beings that can experience feelings and human-like consciousness.

But how realistic - and desirable - is the prospect of robots that become almost indistinguishable from humans?

Sophia is one of the world's most famous robots.

Dr Ben Goertzel, who developed the AI software for Sophia, a social humanoid robot made by Hong Kong-based Hanson Robotics, believes robots should look like humans to help "break down suspicions and reservations people might have" about interacting with them.

"You will have humanoid robots because people like them," he tells the BBC. "They'd rather give orders or complain about their girlfriend to a humanoid robot rather than to the Roomba [a vacuum cleaner robot] ."

"I think [Softbank's] Pepper robot is very ugly. It's sort of like a rolling kiosk. Sophia will look you in the eye, it will mirror your facial movements. It's a different experience than looking at a screen on Pepper's chest."

Robot tells MPs: "I am Pepper and I am a resident robot at Middlesex University"

There are now 20 Sophia robots in existence, and six of them are being used around the world to give speeches and demonstrate the technology.

Companies have approached Hanson Robotics with a interest in using Sophia to greet their customers, but humanoid robots like Sophia and Pepper are still very expensive to manufacture, Dr Goertzel admits.

Many roboticists disagree with his approach.

Dr Ben Goertzel with his humanoid robot Sophia

Dor Skuler, co-founder and chief executive of Intuition Robotics, is strongly opposed to robots looking or sounding like humans.

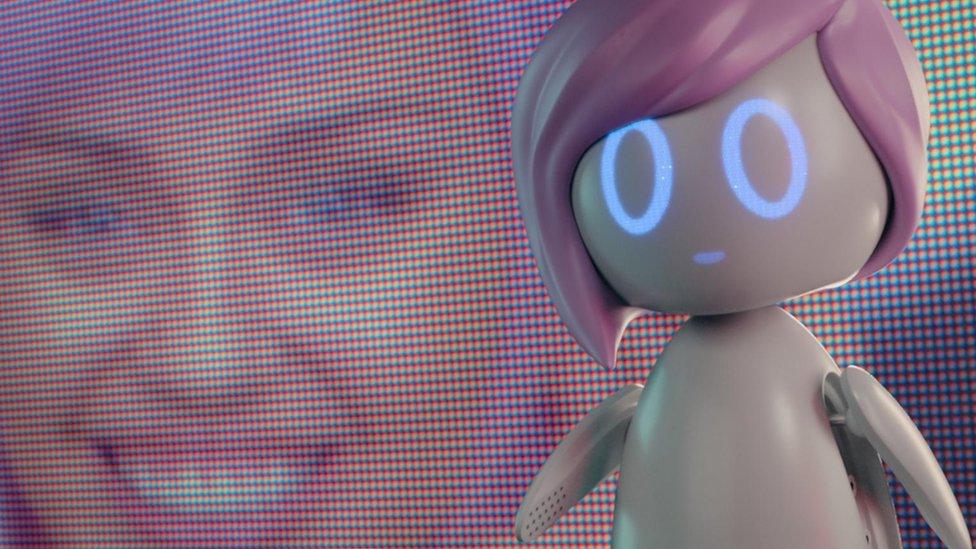

His firm makes ElliQ, a small social home robot for the elderly that aims to combat loneliness. It can talk and answer questions, but it takes pains to remind users continuously that it is a machine, not a human.

He is concerned about the "Uncanny Valley" effect - Masahiro Mori's idea that the more closely robots resemble humans, the more we'll find them creepy and revolting.

And he thinks it is ethically wrong for robots to pretend to be human.

Intuition Robotics co-founder Dor Skuler with social home robot ElliQ

Inevitably people will always realise that the robot isn't real, he argues, and they will then feel betrayed: "I don't see the link between trying to fool you and trying to give you what you need.

"ElliQ is cute, and it is a friend. From our research, an objectoid can still create positive affinity and alleviate loneliness without needing to pretend to be human."

Dr Reid Simmons, a research professor at Carnegie Mellon University's Robotics Institute and director of its undergraduate AI degree, agrees.

"What a lot of us believe is that it is sufficient for a robot to have rudimentary characteristics of human gaze and gesture, without having to go to hyper-realistic human form.

"I'm a strong believer that we need to stay away from the Uncanny Valley, because it sets up expectations that the technology can't deliver."

Would you be happy being interviewed by a robot?

But Dr Goertzel, who founded SingularityNet, a marketplace for AI that enables programmers to develop and sell AI apps for use in robots like Sophia, believes that robots will eventually become as smart - if not smarter - than humans.

And the more we see humanoid versions around, the quicker we'll get used to them. Sometimes people feel easier confiding in a non-human machine, he suggests.

But will we ever reach that sci-fi point where robots gain consciousness, freedom of choice, and perhaps rights under the law?

"I think that if robots have the same intelligence as humans, then they will have the same consciousness," says Dr Goertzel.

Sentient robot Johnny 5 from the 1980s movie Short Circuit knew he was no ordinary machine

This belief is not rare within the field of artificial general intelligence (AGI), but neither is it widely held.

"Five years ago AGI was a very obscure little corner of research, but now it's being taken seriously by the big guys like Google DeepMind," he says.

"We need robots to be more compassionate than humans, but not emulate human emotional habits."

But many roboticists and computer scientists disagree:

"It's impossible," says Mr Skuler. "Emotion is a distinctly human trait. It's a trait of living things.

"Morals or self-worth cannot be reduced to a set of rules and algorithms. It's a feeling and it's based on the ethics we grow up with as people."

Gemma Chan plays an anthropomorphic robot called Mia in the Channel 4 drama Humans

But AI can learn human behaviour and understand how to respond, even if it can't experience the emotions itself, he says.

Intuition Robotics is currently collaborating with Toyota Research Institute to develop an in-car social agent that acts a digital companion for the car. As part of keeping people in the car safe, the AI will be able to detect and understand emotions in the words they speak.

"I feel we're in the same pre-scientific stage in AI, in terms of understanding intelligence. There's all sorts of the impressive feats in machine learning, but in terms of intelligence we just don't understand enough of the same basic principles," says Dr Simmons.

"People are aiming to make AGI work, but I don't think they have the necessary understanding to get us there at this point."

In Black Mirror, Miley Cyrus plays a pop star who has her mind downloaded into a robot doll

In a recent episode of Black Mirror, Miley Cyrus plays a pop star who has her mind downloaded into an AI system so that small robot dolls called Ashley Too can be manufactured and sold as companions to teenage girls.

This will remain the stuff of fiction for ever, many experts believe.

"I would say downloading a brain or personality into a robot is not possible - we're far from being able to replicate a human brain," says Dr Simmons.

But Dr Goertzel insists that "when [scientific pioneer Nikola] Tesla introduced robots in the 1920s, no one believed him, but now we have them.

"Things will happen beyond human thinking."

Follow Technology of Business editor Matthew Wall on Twitter, external and Facebook, external

- Published8 July 2019

- Published8 July 2019