The robot assistant that can guess what you want

- Published

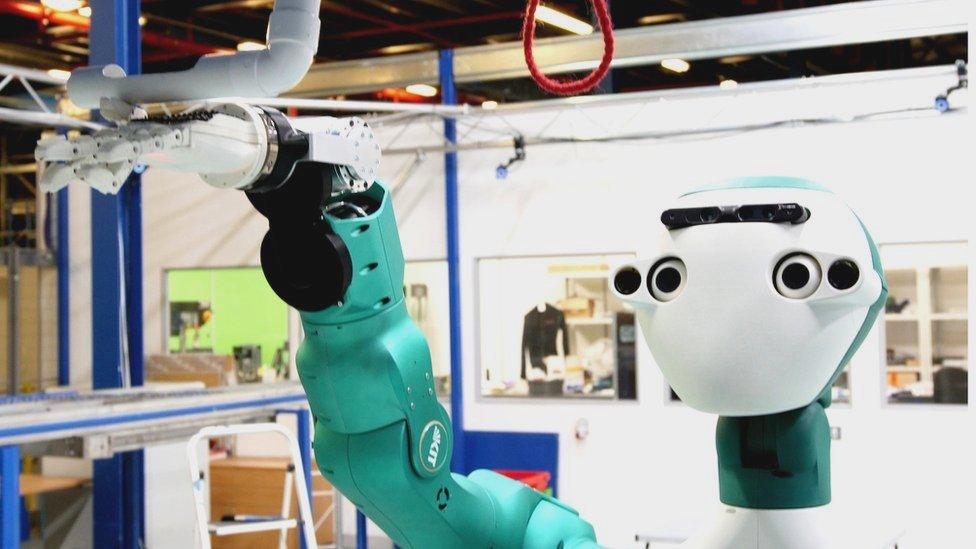

Watch the SecondHands robot in action

Thomas Roszak was working as a maintenance technician at Ocado's giant warehouse in Hatfield when he received a very unusual assignment.

His regular job involved repairing and maintaining the online supermarket's automated sorting and packing system, which puts together grocery orders from customers.

It can be physically demanding work, manipulating heavy panels and working with other pieces of bulky machinery.

In a project designed to ease that burden, Ocado Technology, had been developing a robot that can recognise when a technician might need help and step in with either the right tool or help with lifting.

Mr Roszak was asked to help test the machine and when he saw it for the first time, was taken back to his childhood,

"I grew up on movies like The Terminator, so when I saw that robot I was actually impressed with what it looked like. You can actually imagine it looks like a man."

For Mr Roszak, the robot's strength was a real asset. One test job involved removing a heavy metal plate from above head height.

A human would struggle to hold the plate for more than two or three minutes, but the robot found it "easy" to hold the plate in place, while Mr Roszak undid the bolts.

Thomas Roszak and the SecondHands robot

Mr Roszak's experience was early on in the project, called SecondHands, which involved Ocado and researchers at four European universities, external and funded by the European Union.

The goal was to build a collaborative robot, or cobot, based on the ARMAR-6 robot, developed at the Karlsruhe Institute of Technology in Germany.

The project ended on 4 May and, while the robot is still a long way from becoming a regular feature in workplaces, the researchers feel they have made important progress, by bringing together robot arms and hands, vision systems, voice recognition and knitting it all together with an artificial intelligence (AI).

It was an ambitious project, says Graham Deacon, robotics research fellow at Ocado Technology, because they didn't want a robot that would just respond to commands, they wanted the machine to actually anticipate what the technician needed.

"The key point about the whole thing was that we will have a robot system that could kind of watch what the maintenance technicians were doing, understand what activity they were engaged in, and then proactively offer the appropriate assistance at the right point in time," he says.

The Ocado warehouse run by robots

So the robot's AI was trained by watching humans perform tasks.

"I would say 80% of the skills of the robot were really learned from human demonstration," says Prof Tamim Asfour from the Karlsruhe Institute of Technology (KIT).

That was important as it is much faster to train AI that way than to programme instructions from scratch, says Prof Asfour.

The team also wanted the robot to recognise speech and respond in a natural way.

"The breakthroughs made in areas like natural language interfaces and task understanding will lead to better acceptance of cobots by humans, and allow them to be used in a more easy, natural way," says Sebastian Stüker, from KIT.

To be useful robots have to be strong, but that also makes them potentially dangerous to humans. Most industrial robot arms have to operate behind cages, because they would skittle any human that strayed into their path.

The ARMAR-6 robot has five cameras that can track the movement of humans and recognise objects

To ensure their machine could work side-by-side with a human, the SecondHands robot has a sophisticated vision system, with five cameras which track the movements of its human co-worker and identify objects, such as tools.

It has also been trained to recognise when to use appropriate force. "One of the requirements in the design to build the arms... is they actually recognise collisions with the body of humans and stop immediately," says Prof Asfour.

Prof Nathan Lepora is head of the tactile robotics group at Bristol Robotics Laboratory (who were not involved in the SecondHands project), and he agrees this has been an ambitious project.

"This is enormously challenging. You are trying to reproduce capabilities that only humans currently possess: to work alongside other humans. It required expertise in building humanoid robots, online learning where the robot teaches itself to do tasks, and sophisticated computer vision to navigate and interact with the environment," he says.

The PR2 was an attempt to make a cobot a decade ago

Prof Lepora says the SecondHands project reminds him of the PR2 robot made and sold by Willow Garage a decade ago.

"However, the ARMAR-6 benefits from much more dexterous hands, and also there have been huge advances in AI over the last decade, particularly in computer vision, which are now extending into robot learning - robots learning from instructors or by themselves how to do tasks and jobs."

Asimo, made by Japanese industrial giant Honda, external, is perhaps the world's best known robot. It can run and climb stairs. The eventual goal is for it to help people in the home, but Honda admits it is still not ready to do that.

Honda's Asimo has some impressive tricks but ARMAR-6 is a better communicator

But with its ability to anticipate and communicate, the ARMAR-6 robot is potentially much more useful.

"Asimo is able to respond to its name and recognises sounds associated with a falling object or collision, but ARMAR-6 can understand much more and even ask qualifying questions like 'which tool do you need?'" says Graham Deacon from Ocado Technology.

"The ARMAR-6's cognitive and speech capabilities make it much more interactive, and in a natural way - a prerequisite for a truly interactive and co-operative cobot," he adds.

So what happens next, now the five-year project is over? At the moment the ARMAR-6 has only been tested on a small set of tasks. Much more work has to be done to extend its range, and beyond that it would need certification to prove it could work safely alongside humans.

Mr Deacon declines to speculate as to when that might be, but is hopeful it will happen,

"The results of this project have shown categorically how robots can amplify the benefits of human expertise. We'll continue to build on these learnings, looking forward to a future where we can use these breakthroughs to apply them to a real world setting."