Kate rumours linked to Russian disinformation

- Published

The Princess of Wales was the subject of rumours and conspiracy theories on social media

Security researchers believe a Russia-based disinformation group amplified and added to the frenzy of social media conspiracies about the Princess of Wales's health.

In the days before Catherine revealed her cancer diagnosis in a video message, there had been a surge in online rumours and often wild claims about her health, adding to the emotional pressure on the princess and her husband Prince William.

Now security experts analysing social media data say there were hallmark signs of a co-ordinated campaign sharing and adding to the false claims and divisive content, both in support of and criticising the Princess of Wales.

The researchers say this is consistent with the previous patterns of a Russian disinformation group.

The accounts involved were also spreading content opposing France's backing for Ukraine, suggesting a wider international context for the royal rumours.

This particular foreign influence network has a track record in this area, with a focus on eroding support for Ukraine following Russia's invasion.

Prince William and Catherine have been under pressure from social media rumours

The BBC had previously tracked down amateur sleuths and real social media users who started and drove speculation and conspiracy theories.

Even without any artificial boost, these claims had been racking up millions of views and likes. And algorithms were already promoting this social media storm about the royals, without the intervention of networks of fake accounts.

But Martin Innes, director of the Security, Crime and Intelligence Innovation Institute at Cardiff University, says his researchers found systematic attempts to further intensify the wave of rumours about the princess, with royal hashtags being shared billions of times across a range of social media platforms.

Prof Innes has suggested the involvement of the so-called "Doppelganger" Russian disinformation group. It is linked to people who have been sanctioned recently in the United States over claims they were part of a "malign influence campaign" that spread fake news.

"Their messaging around Kate appears wrapped up in their ongoing campaigns to attack France's reputation, promote the integrity of the Russian elections, and denigrate Ukraine as part of the wider war effort," says Prof Innes.

He says the operatives running this rumour machine would be seen in Russia as "political technologists".

Their approach is to fan the online flames of an existing story - tapping into disputes and doubts that already exist - which Prof Innes says is a much more effective approach and harder to track than starting misinformation from scratch.

He says they "hijack" popular claims and inject more confusion and chaos. It then becomes harder to separate the co-ordinated disinformation from individuals sharing conspiracies and chasing clicks.

But the social media data, examined by the Cardiff University team, shows extreme spikes and the simultaneous sharing of messages in a way that they think is consistent with a network of fake accounts being operated.

Researchers found many new accounts sharing identically worded messages

Dr Jon Roozenbeek, an expert in disinformation at King's College London, says such Russian involvement in conspiracy theories is "topic agnostic" - in that they don't really care about the subject, it can be anything that "pushes buttons" and adds to social tensions.

He says they seek out "wedge issues" in an opportunistic way.

Showing the scale of the challenge, TikTok says it took down more than 180 million fake accounts in just three months.

Many of the accounts spreading the Catherine conspiracy theories were newly created this month, says Prof Innes. They fed off a so-called "master" account, which in this case had a name which was a version of "master", with a cascade of other fake accounts replying to and sharing messages and drawing in other users.

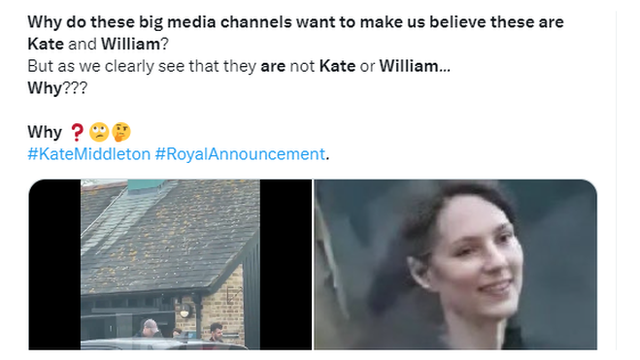

Identical phrases - such as "Why do these big media channels want to make us believe these are Kate and William?" - were churned out by multiple accounts. Although real people are known to re-share the same message on their own accounts in this way - a tactic referred to "copypasta" - there are other clues about the accounts, which suggest a more organised network.

Another phrase about Catherine was shared at the same time by what were ostensibly 365 different accounts on X, formerly Twitter. There were also new TikTok accounts, created in recent days, that seemed to put out nothing but royal rumours.

French security agencies have linked online attacks to France's support for Ukraine

The Cardiff researchers highlight an overlap with a Russia-linked fake news website, published in English, complete with a "fact checked" logo, which has a stream of ghoulish and bizarre stories about Catherine.

Also UK embassies in Russia and Ukraine both had to put out fake news warnings last week about claims being circulated that King Charles was dead.

When it comes to saying who is responsible for such disruptive activities, it can be difficult to attribute networks of accounts to a particular group, organisation or state.

And further muddying the waters will be all kinds of individuals, interest groups and other overseas players commenting on social media on the same topic.

The UK embassy in Moscow had to put out a fake news warning

Instead, experts in this field tend to rely on indications that a particular network is connected to an existing influence operation or particular group. Are their tactics the same? Does getting involved in this social media conversation align with their interests?

For instance in this case, one clue was a Russia-sourced video which frequently appeared in the social media exchanges over Kate, and which had previously been identified with a particular disinformation group.

This same group spreading rumours against Catherine had also been part of destabilising online campaigns in France, says Prof Innes.

President Macron, who is seen as taking an increasingly tough line over Ukraine, has faced a blizzard of hostile personal rumours.

France's state agency for tackling disinformation, Viginum, has warned of extensive networks of fake news being pumped out by Russia-linked websites and social media accounts.

The Royal Family in the UK, including Prince William, has been outspoken in support of Ukraine since Russia's invasion.

Princess Catherine issued a video message making public her cancer diagnosis

These foreign influence operations aim to erode public trust, to sow discord by amplifying and feeding conspiracy theories that are already there. That makes it much harder to track back, because there can be a combination of real people who start false claims and then inauthentic accounts which push them further.

It might begin with "internet sleuths" asking genuine questions and then fake accounts turn it into a social media storm.

Anna George, who researches extremism and conspiracy theories at the Oxford Internet Institute, says a characteristic of Russian disinformation is not to necessarily care what narrative is being sent out, as long as it spreads doubt about what is real and unreal: "They want to sow confusion about what people can trust."

The royal rumours spread with an unusual speed, says Ms George, getting into the mainstream more quickly than most conspiracy theories, reflecting how any external influence was tapping into high levels of public curiosity.

Prof Innes says engines of disinformation can also be a business proposition. His researchers identified the role of troll farms, thought to be in Pakistan, being hired to spread messages.

In the wake of the video about Catherine's cancer treatment, there seems to be a change in mood online. While committed conspiracy theorists and sleuths continue to share evidence-free claims, lots of social media users have recognised the real harm caused to those at the centre of a frenzy like this.

Last week users on X were being actively recommended content by the site's algorithms falsely suggesting a video of the Princess of Wales out shopping was really a body double.

But CEO Linda Yaccarino has since said: "Her request for privacy, to protect her children and allow her to move forward seems like a reasonable request to respect."

The Department for Science, Innovation and Technology says it would engage with disinformation where it presents a threat to UK democracy.