The search for a thinking machine

- Published

Building an artificial brain that thinks like a human is complex and experts are only just beginning to get to grips with it

By 2050 some experts believe that machines will have reached human level intelligence.

Thanks, in part, to a new era of machine learning, computer are already starting to assimilate information from raw data in the same way as the human infant learns from the world around her.

It means we are getting machines that can, for example, teach themselves how to play computer games and get incredibly good at them (work ongoing at Google's DeepMind) and devices that can start to communicate in human-like speech, such as voice assistants on smartphones.

Computers are beginning to understand the world outside of bits and bytes.

Fei-Fei Li wants to create seeing machines that can help improve our lives

Fei-Fei Li has spent the last 15 years teaching computers how to see.

First as a PhD student and latterly as director of the computer vision lab at Stanford University, she has pursued the painstakingly difficult goal with an aim of ultimately creating the electronic eyes for robots and machines to see and, more importantly, understand their environment.

Half of all human brainpower goes into visual processing even though it is something we all do without apparent effort.

"No one tells a child how to see, especially in the early years. They learn this through real-world experiences and examples," said Ms Li in a talk at the 2015 Technology, Entertainment and Design (Ted) conference.

"If you consider a child's eyes as a pair of biological cameras, they take one picture about every 200 milliseconds, the average time an eye movement is made. So by age three, a child would have seen hundreds of millions of pictures of the real world. That's a lot of training examples," she added.

She decided to teach computers in a similar way.

"Instead of focusing solely on better and better algorithms, my insight was to give the algorithms the kind of training data that a child is given through experiences in both quantity and quality."

Back in 2007, Ms Li and a colleague set about the mammoth task of sorting and labelling a billion diverse and random images from the internet to offer examples of the real world for the computer - the theory being that if the machine saw enough pictures of something, a cat for example, it would be able to recognise it in real life.

They used crowdsourcing platforms such as Amazon's Mechanical Turk, calling on 50,000 workers from 167 countries to help label millions of random images of cats, planes and people.

Eventually they built ImageNet - a database of 15 million images across 22,000 classes of objects organised by everyday English words.

It has become an invaluable resource used across the world by research scientists attempting to give computers vision.

Each year Stanford runs a competition, inviting the likes of Google, Microsoft and Chinese tech giant Baidu to test how well their systems can perform using ImageNet. In the last few years they have got remarkably good at recognising images - with around a 5% error rate.

To teach the computer to recognise images, Ms Li and her team used neural networks, computer programs assembled from artificial brain cells that learn and behave in a remarkably similar way to human brains.

A neural network dedicated to interpreting pictures has anything from a few dozen to hundreds, thousands, or even millions of artificial neurons arranged in a series of layers.

Each layer will recognise different elements of the picture - one will learn that there are pixels in the picture, another layer will recognise differences in the colours, a third layer will determine its shape and so on.

By the time it gets to the top layer - and today's neural networks can contain up to 30 layers - it can make a pretty good guess at identifying the image.

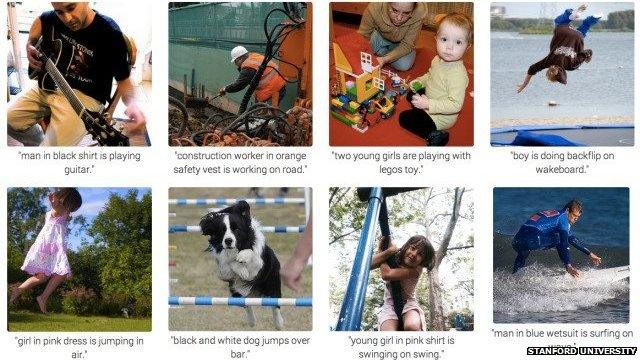

Some of the pictures the Stanford computers labelled

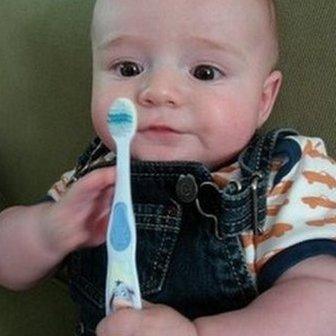

At Stanford, the image-reading machine now writes pretty accurate captions (see examples above) for a whole range of images although it does still get things wrong - so for instance a picture of a baby holding a toothbrush was wrongly labelled "a young boy is holding a baseball bat".

Despite a decade of hard work, it still only has the visual intelligence level of a three-year-old, said Prof Li.

And, unlike a toddler, it doesn't yet understand context.

"So far, we have taught the computer to see objects or even tell us a simple story when seeing a picture," Prof Li said.

But when she asks it to assess a picture of her own son at a family celebration the machine labels it simply: "Boy standing next to a cake".

The computer doesn't always get it right - labelling this: a young boy is holding a baseball bat.

She added: "What the computer doesn't see is that this is a special Italian cake that's only served during Easter time."

That is the next step for the laboratory - to get machines to understand whole scenes, human behaviours and the relationships between objects.

The ultimate aim is to create "seeing" robots that can assist in surgical operations, search out and rescue people in disaster zones and generally improve our lives for the better, said Ms Li.

AI history

The work into visual learning at Stanford illustrates how complex just one aspect of creating a thinking machine can be and it comes on the back of 60 years of fitful progress in the field.

Back in 1950, pioneering computer scientist Alan Turing wrote a paper speculating about a thinking machine and the term "artificial intelligence" was coined in 1956 by Prof John McCarthy at a gathering of scientists in New Hampshire known as the Dartmouth Conference.

Alan Turing was one of the first to start thinking about the possiblities of AI

After some heady days and big developments in the 1950s and 60s, during which both the Stanford lab and one at the Massachusetts Institute of Technology were set up, it became clear that the task of creating a thinking machine was going to be a lot harder than originally thought.

There followed what was dubbed the AI winter - a period of academic dead-ends when funding for AI research dried up.

But, by the 1990s, the focus in the AI community shifted from a logic-based approach - which basically involved writing a whole lot of rules for computers to follow - to a statistical one, using huge datasets and asking computers to mine them to solve problems for themselves.

In the 2000s, faster processing power and the ready availability of vast amounts of data created a turning point for AI and the technology underpins many of the services we use today.

It allows Amazon to recommend books, Netflix to suggest movies and Google to offer up relevant search results. Smart little algorithms began trading on Wall Street - sometimes going further than they should, as in the 2010 Flash Crash when a rogue algorithm was blamed for wiping billions off the New York stock exchange.

It also provided the foundations for the voice assistants, such as Apple's Siri and Microsoft's Cortana, on smartphones.

At the moment such machines are learning rather than thinking and whether a machine can ever be programmed to think is debatable given that the nature of human thought has eluded philosophers and scientists for centuries.

And there will remain elements to the human mind - daydreaming for example - that machines will never replicate.

But increasingly they are evaluating their knowledge and improving it and most people would agree that AI is entering a new golden age where the machine brain is only going to get smarter.

AI TIMELINE

1951 - The first neural net machine SNARC was built and in the same year, Christopher Strachey wrote a checkers programme and Dietrich Prinz wrote one for chess.

1957 - The General Problem Solver was invented by Allen Newell and Herbert Simon.

1958 - AI pioneer John McCarthy came up with LISP, a programming language that allowed computers to operate on themselves.

1960 - Research labs built at MIT with a $2.2m grant from the Advanced Reserch Projects Agency - later known as Darpa

1960 - Stanford AI project founded by John McCarthy.

1964 - Joseph Weizenbaum created the first chatbot Eliza, which could fool humans but repeated back what was said to her.

1968 - Arthur C. Clarke and Stanley Kubrick immortalised Hal, that classic vision of a machine that would match or exceed human intelligence by 2001.

1973 - A report on AI research in the UK formed the basis for the British government to discontinue support for AI in all but two universities.

1979 - The Stanford Cart became the first computer-controlled autonomous vehicle when it circumnavigated the Stanford AI lab.

1981 - Danny Hillis designed a machine that utilised parallel computing to bring new power to AI.

1980s - Backpropogation algorithm allowed neural networks to start being able to learn from their mistakes.

1985 - Aaron, an autonomous painting robot, was shown off.

1997 - DeepBlue, IBM's chess machine, beat then world champion Garry Kasparov.

1999 - Sony launched the AIBO, one of the first artificially intelligent pet robots.

2002 - The Roomba, an autonomous vacuum cleaner, was introduced.

2011 - IBM's Watson defeated champions from TV game show Jeopardy.

2011 - Smartphones introduced natural language voice assistants - Siri, Google Now and Cortana.

2014 - Stanford and Google revealed computers that could interpret images.

- Published13 February 2014