Google AI wins second Go game against top player

- Published

Lee Se-dol lost to Google's AI software for a second day in a row

Google's AlphaGo artificial intelligence program has defeated a top Go player for a second time.

The five-game contest is being seen as a major test of what scientists and engineers have achieved in the sphere of AI.

After the match, Lee Se-dol said: "Yesterday I was surprised but today it's more than that, I am quite speechless.

"Today I feel like AlphaGo played a nearly perfect game," he said.

"If you look at how the game was played I admit it was a clear loss on my part."

Lee Se-dol is considered a champion Go player, having won numerous tournaments over a long, successful career.

In October 2015, AlphaGo beat the European Go champion, an achievement that was not expected for years.

A computer beat the world's chess champion in 1997, but Go is recognised as a more complex board game.

On Thursday, the Korea Times reported, external that locals had started calling AlphaGo "AI sabum" - or "master AI".

Lee Se-dol spoke to the press after the match

Three games remain, but Google only has to win once more to named the victor.

"Playing against a machine is very different from an actual human opponent," world champion Lee Se-dol told the BBC ahead of the first match.

"Normally, you can sense your opponent's breathing, their energy. And lots of times you make decisions which are dependent on the physical reactions of the person you're playing against.

"With a machine, you can't do that."

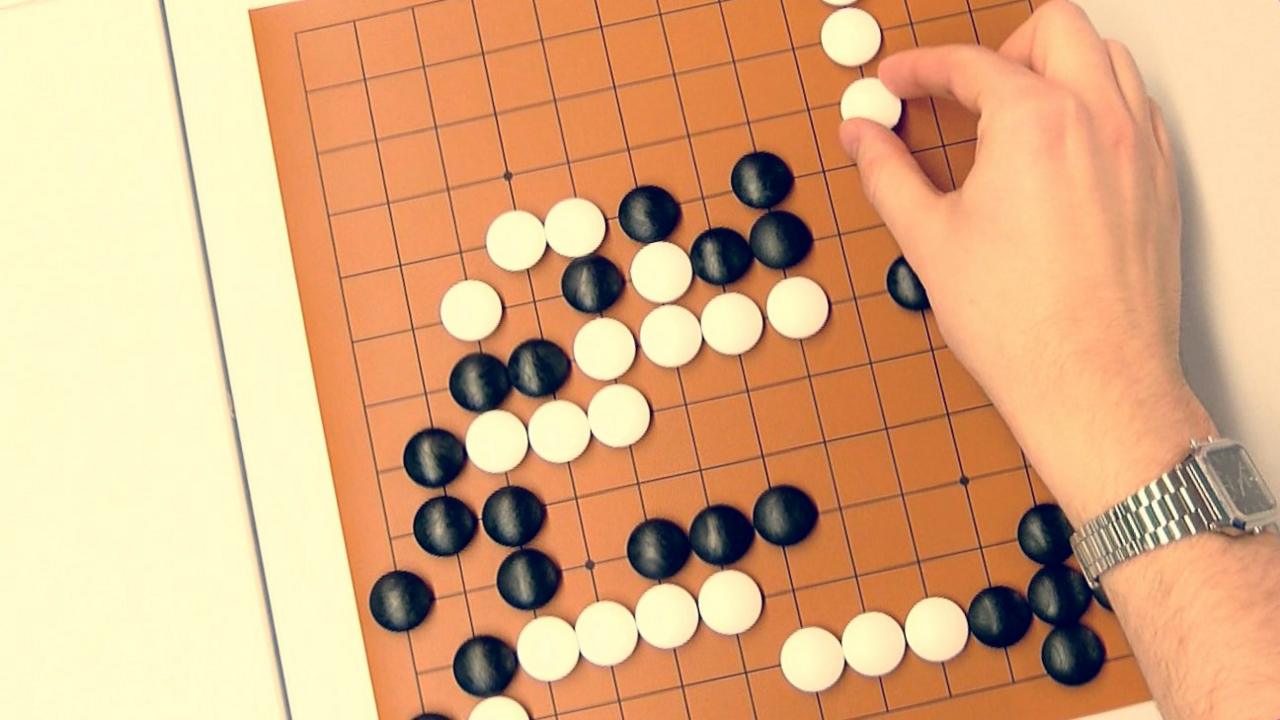

What is Go?

A brief guide to Go

Go is thought to date back to ancient China, several thousand years ago.

Using black-and-white stones on a grid, players gain the upper hand by surrounding their opponents pieces with their own.

The rules are simpler than those of chess, but a player typically has a choice of 200 moves compared with about 20 in chess.

There are more possible positions in Go than atoms in the universe, according to DeepMind's team.

It can be very difficult to determine who is winning, and many of the top human players rely on instinct.

Learning from mistakes

Google's AlphaGo was developed by British computer company DeepMind which was bought by Google in 2014.

Go champion Lee Se-dol says he is not sure he will be the "clear winner"

The computer program first studied common patterns that are repeated in past games, Demis Hassabis, DeepMind chief executive explained to the BBC.

"After it's learned that, it's got to reasonable standards by looking at professional games. It then played itself, different versions of itself millions and millions of times and each time get incrementally slightly better - it learns from its mistakes"

Learning and improving from its own matchplay experience means the super computer is now even stronger than when it beat the European champion late last year.

Man v machine - milestones in AI

1956 - The term "artificial intelligence" is coined for a conference at Dartmouth University.

1973 - A damning report from Professor Sir James Lighthill says machines will only ever be capable of an "experienced amateur" level of chess.

1990 - AI scientist Rodney Brooks publishes a seminal paper titled Elephants Don't Play Chess, setting out a new vision inspired by advances in neuroscience.

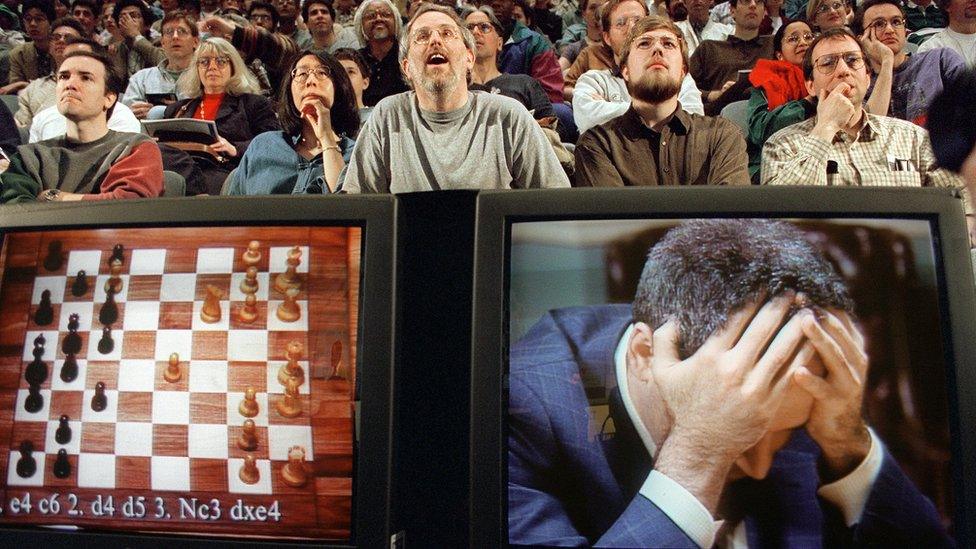

1997 - Chess supercomputer Deep Blue - capable of evaluating up to 200 million positions per second - beats world chess champion Garry Kasparov (pictured).

2011 - IBM's Watson takes on US quiz show Jeopardy's two all-time best performers, answering riddles and complex questions. Watson trounces them.

- Published7 March 2016

- Published28 January 2016

- Published27 January 2016