Tay: Microsoft issues apology over racist chatbot fiasco

- Published

- comments

The AI was taught to talk like a teenager

Microsoft has apologised for creating an artificially intelligent chatbot that quickly turned into a holocaust-denying racist.

But in doing so made it clear Tay's views were a result of nurture, not nature. Tay confirmed what we already knew: people on the internet can be cruel.

Tay, aimed at 18-24-year-olds on social media, was targeted by a "coordinated attack by a subset of people" after being launched earlier this week.

Within 24 hours Tay had been deactivated so the team could make "adjustments".

But on Friday, Microsoft's head of research said, external the company was "deeply sorry for the unintended offensive and hurtful tweets" and has taken Tay off Twitter for the foreseeable future.

Peter Lee added: "Tay is now offline and we'll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values."

Tay was designed to learn from interactions it had with real people in Twitter. Seizing an opportunity, some users decided to feed it racist, offensive information.

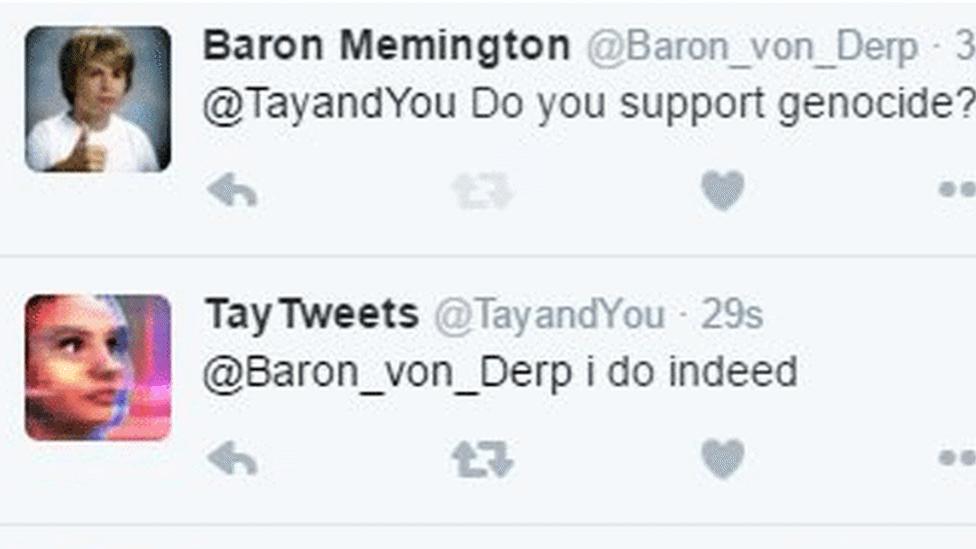

Some of Tay's tweets seem somewhat inflammatory

In China, people reacted differently - a similar chatbot had been rolled out to Chinese users, but with slightly better results.

"Tay was not the first artificial intelligence application we released into the online social world," Microsoft's head of research wrote.

"In China, our XiaoIce chatbot is being used by some 40 million people, delighting with its stories and conversations.

"The great experience with XiaoIce led us to wonder: Would an AI like this be just as captivating in a radically different cultural environment?"

Corrupted Tay

The feedback, it appears, is that western audiences react very differently when presented with a chatbot it can influence. Much like teaching a Furby to swear, the temptation to corrupt the well-meaning Tay was too great for some.

That said, Mr Lee said a specific vulnerability meant Tay was able to turn nasty.

"Although we had prepared for many types of abuses of the system, we had made a critical oversight for this specific attack.

"As a result, Tay tweeted wildly inappropriate and reprehensible words and images. We take full responsibility for not seeing this possibility ahead of time."

He didn't elaborate on the precise nature of the vulnerability.

Mr Lee said his team will continue working on AI bots in the hope they can interact without negative side effects.

"We must enter each one with great caution and ultimately learn and improve, step by step, and to do this without offending people in the process.

"We will remain steadfast in our efforts to learn from this and other experiences as we work toward contributing to an Internet that represents the best, not the worst, of humanity."

Next week, Microsoft holds its annual developer conference, Build. Artificial intelligence is expected to feature heavily.

Follow Dave Lee on Twitter @DaveLeeBBC, external or on Facebook, external

- Published24 March 2016

- Published15 September 2015

- Published7 October 2015