Artificial intelligence: How to avoid racist algorithms

- Published

Only white babies appear in a search for "babies" on Microsoft search engine Bing...

There is growing concern that many of the algorithms that make decisions about our lives - from what we see on the internet to how likely we are to become victims or instigators of crime - are trained on data sets that do not include a diverse range of people.

The result can be that the decision-making becomes inherently biased, albeit accidentally.

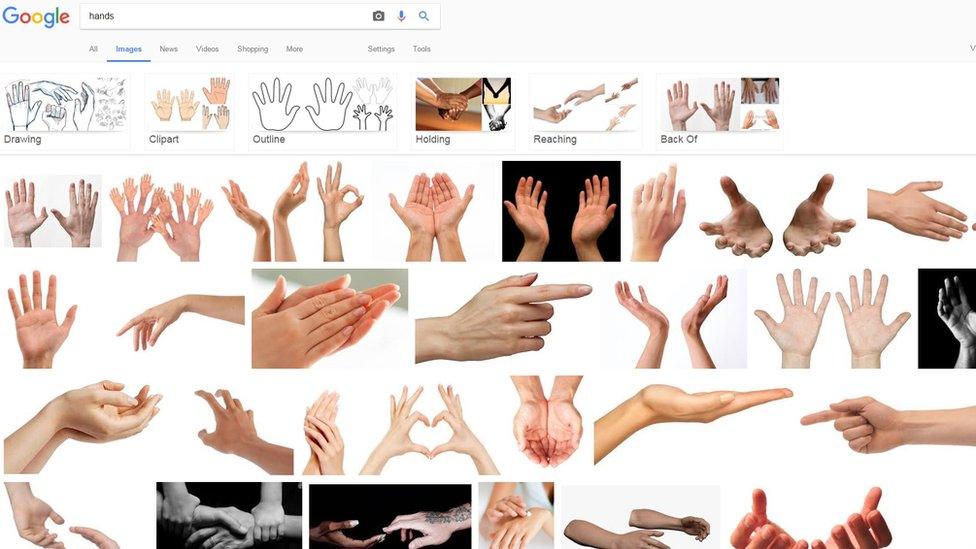

Try searching online for an image of "hands" or "babies" using any of the big search engines and you are likely to find largely white results.

In 2015, graphic designer Johanna Burai created the World White Web project, external after searching for an image of human hands and finding exclusively white hands in the top image results on Google.

Her website offers "alternative" hand pictures that can be used by content creators online to redress the balance and thus be picked up by the search engine.

Google says its image search results are "a reflection of content from across the web, including the frequency with which types of images appear and the way they're described online" and are not connected to its "values".

Ms Burai, who no longer maintains her website, believes things have improved.

"I think it's getting better... people see the problem," she said.

"When I started the project people were shocked. Now there's much more awareness."

...and white hands appear if you type "hands" into Google.

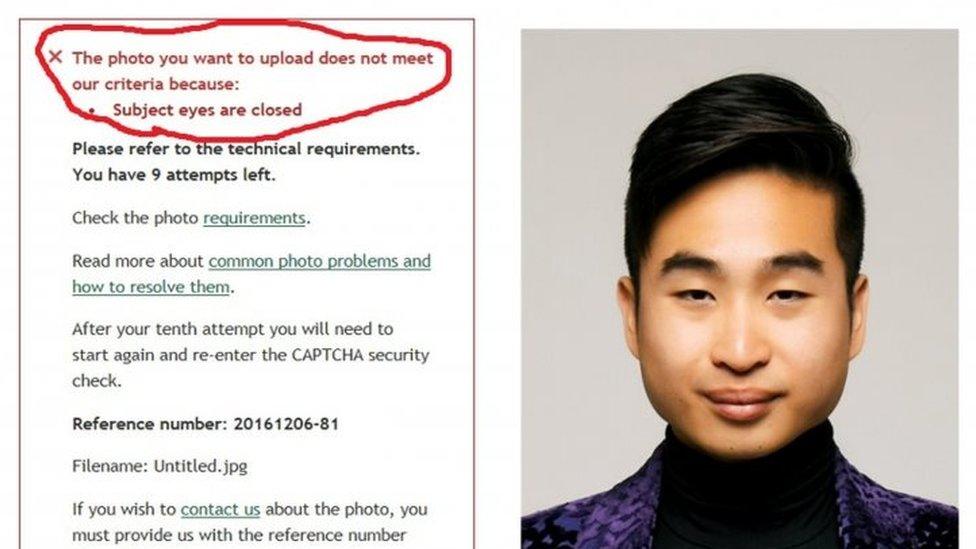

The Algorithmic Justice League, external (AJL) was launched by Joy Buolamwini, a postgraduate student at the Massachusetts Institute of Technology, in November 2016.

She was trying to use facial recognition software for a project but it could not process her face - Ms Buolamwini has dark skin.

"I found that wearing a white mask, because I have very dark skin, made it easier for the system to work," she says.

"It was the reduction of a face to a model that a computer could more easily read."

It was not the first time she had encountered the problem.

Five years earlier, she had had to ask a lighter-skinned room-mate to help her.

"I had mixed feelings. I was frustrated because this was a problem I'd seen five years earlier was still persisting," she said.

"And I was amused that the white mask worked so well."

Joy Buolamwini found her computer system recognised the white mask, but not her face.

Ms Buolamwini describes the reaction to the AJL as "immense and intense".

This ranges from teachers wanting to show her work to their students, and researchers wanting her to check their own algorithms for signs of bias, to people reporting their own experiences.

And there seem to be quite a few.

One researcher wanted to check that an algorithm being built to identify skin melanomas (skin cancer) would work on dark skin.

"I'm now starting to think, are we testing to make sure these systems work on older people who aren't as well represented in the tech space?" Ms Buolamwini says.

"Are we also looking to make sure these systems work on people who might be overweight, because of some of the people who have reported it? It is definitely hitting a chord."

Diverse data

Ms Buolamwini thinks the situation has arisen partly because of the well-documented lack of diversity within the tech industry itself.

Every year the tech giants release diversity reports and they make for grim reading.

Google's latest figures, external (January 2016) state that 19% of its tech staff are women and just 1% are black.

At Microsoft in September 2016, external 17.5% of the tech workforce were women and 2.7% black or African American.

At Facebook in June 2016, external its US tech staff were 17% women and 1% black.

You get the picture. But what has that got to do with algorithms?

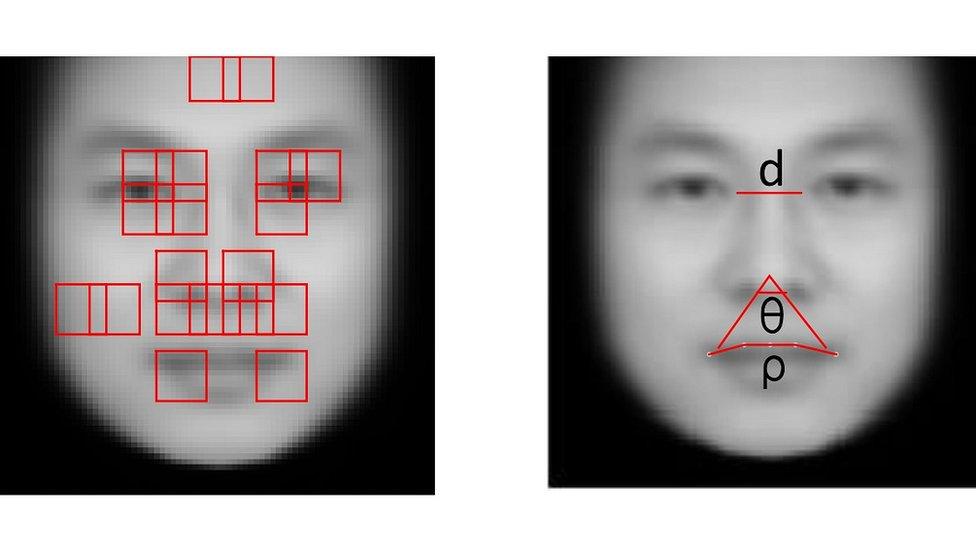

"If you test your system on people who look like you and it works fine then you're never going to know that there's a problem," Joy Buolamwini argues.

Biased beauty

Of the 44 winners of a beauty contest last year judged by algorithms, and based on some 6,000 uploaded selfies from 100 different countries, only one was non-white and a handful were Asian.

Alex Zhavoronkov, Beauty.AI's chief science officer, told the Guardian the result was flawed because the data set used to train the AI (artifical intelligence) had not been diverse enough, external.

"If you have not that many people of colour within the data set, then you might actually have biased results," he said at the time.

On a more serious note, AI software used in the US to predict which convicted criminals might reoffend, was found to be more likely to incorrectly identify black offenders as high risk and white offenders as low risk, according to a study by the website Propublica, external (the software firm disputed these findings).

Suresh Venkatasubramanian, an associate professor at the University of Utah school of computing, says creators of AI need to act now while the problem is still visible.

"The worst that can happen is that things will change and we won't realise it," he told the BBC.

"In other words the concern has been that the bias, or skew, in decision-making will shift from things we recognise as human prejudice to things we no longer recognise and therefore cannot detect - because we will take the decision-making for granted."

Are we accidentally programming prejudiced robots?

He is however optimistic about tech's progress.

"To say all algorithms have racist manifestations doesn't make sense to me," he says.

"Not because it's impossible but because that's not how it's actually working.

"In the last three to four years what's picked up is the discussion around the problems and possible solutions," he adds.

He offers a number of these:

creating better and more diverse data sets with which to train the algorithms (they learn by processing thousands of, for example, images)

sharing best practice among software vendors, and

building algorithms which explain their decision making so that any bias can be understood.

Ms Buolamwini says she is hopeful that the situation will improve if people are more aware of the potential problems.

"Any technology that we create is going to reflect both our aspirations and our limitations," she says.

"If we are limited when it comes to being inclusive that's going to be reflected in the robots we develop or the tech that's incorporated within the robots."

- Published9 January 2017

- Published7 December 2016

- Published24 November 2016

- Published8 June 2016

- Published25 March 2016