The one law of robotics: Humans must flourish

- Published

- comments

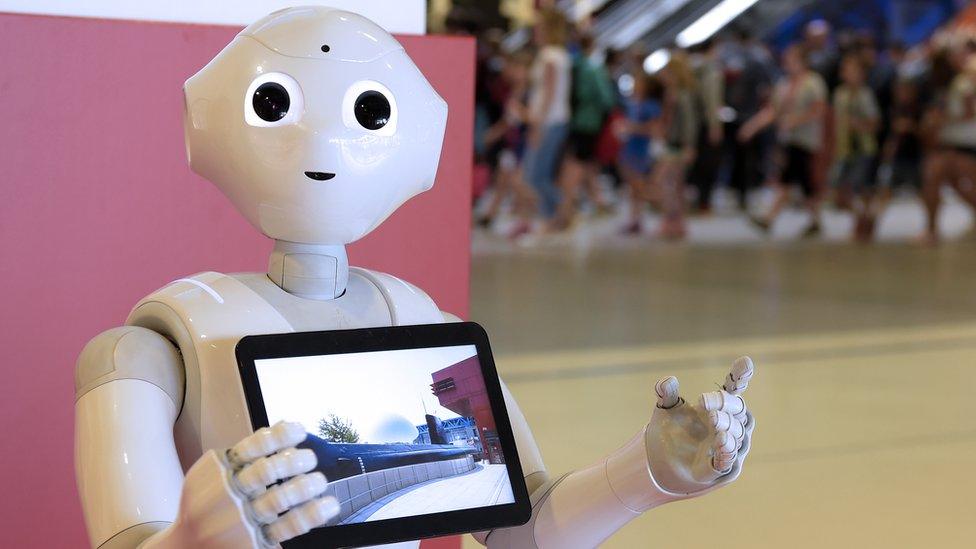

Could robots harm humans?

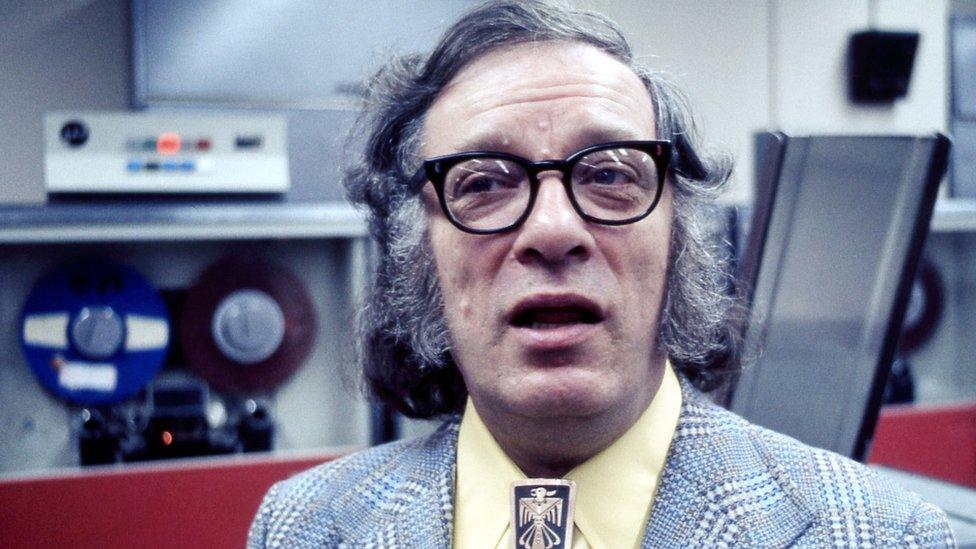

The science-fiction writer Isaac Asimov wrote about controlling intelligent machines with the three laws of robotics:

A robot may not injure a human being or, through inaction, allow a human being to come to harm

A robot must obey orders given to it by human beings except where such orders would conflict with the first law

A robot must protect its own existence as long as such protection does not conflict with the first or second law

As so often is the case, science fiction has become science fact. A report published by the Royal Society and the British Academy, external suggests that there should not be three but just one overarching principle to govern the intelligent machines that we will soon be living alongside: "Humans should flourish."

According to Prof Dame Ottoline Leyser, who co-chairs the Royal Society's science policy advisory group, human flourishing should be the key to how intelligent systems governed.

Isaac Asimov laid out some laws for robots

"This was the term that really encapsulated what we wanted to say," she told BBC News.

"The thriving of people and communities needs to be put first, and we think Asimov's principles can be subsumed into that."

The report calls for a new body to ensure intelligent machines serve people rather than control them.

It says that a system of democratic supervision is essential to regulate the development of self-learning systems.

Without it they have the potential to cause great harm, the report says.

It is not warning of machines enslaving humanity, at least not yet.

But when systems that learn and make decisions independently are used in the home and across a range of commercial and public services, there is scope for plenty of bad things to happen.

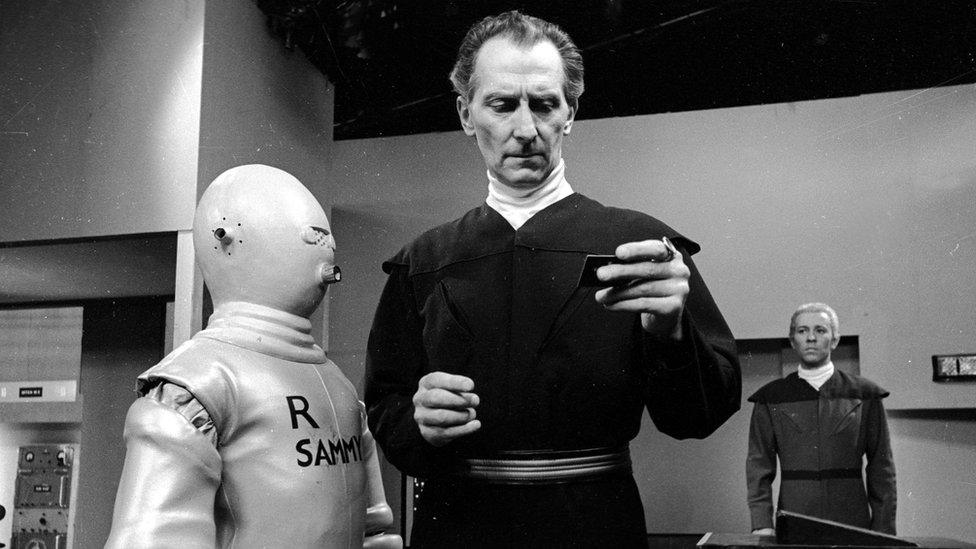

In Asimov's Caves Of Steel, humans lived closely alongside robots

The report calls for safeguards to prioritise the interests of humans over machines.

The development of such systems cannot by governed solely by technical standards. They also have to be imbued with ethical and democratic values, according to Antony Walker, who is deputy chief executive of the lobby group TechUK and another of the report's authors.

"There are many benefits that will come out of these technologies, but the public has to have the trust and confidence that these systems are being thought through and governed properly," he said.

The age of Asimov

The report calls for a completely new approach. It suggests a "stewardship body" of experts and interested parties should build an ethical framework for the development of artificial intelligence technologies.

It recommends four high-level principles to promote human flourishing:

Protect individual and collective rights and interests

Ensure transparency, accountability and inclusivity

Seek out good practices and learn from success and failure

Enhance existing democratic governance

And the need for a new way to govern machines is urgent. The age of Asimov is already here.

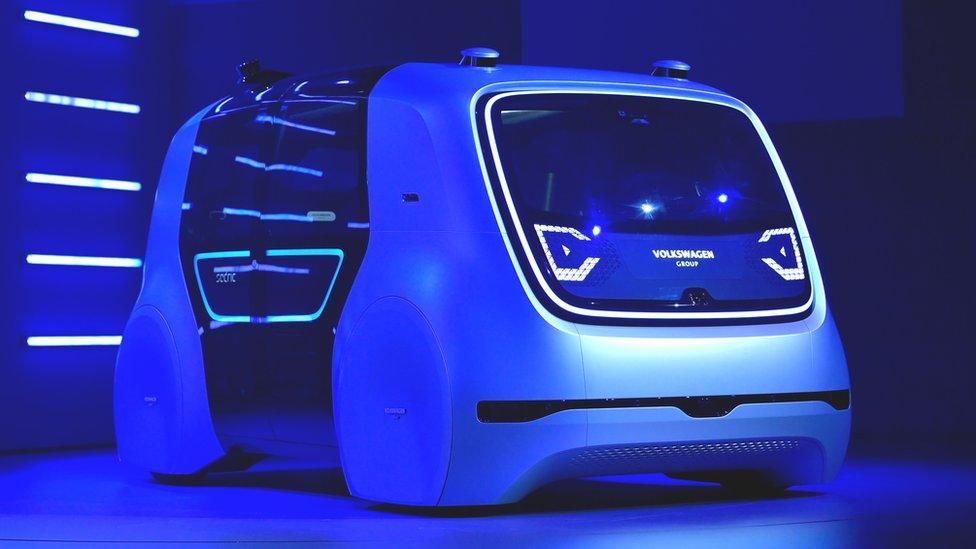

The development of autonomous vehicles, for example, raises questions about how human safety should be prioritised.

What happens in a situation where the machine has to choose between the safety of those in the vehicle and pedestrians?

There is also the issue of determining liability if there is an accident. Was it the fault of the vehicle owner or the machine?

Another example is the emergence of intelligent systems for personalised tuition.

These identify a student's strengths and weaknesses and teach accordingly.

Several companies are testing driverless cars

Should such a self-learning system be able to teach without proper guidelines?

How do we make sure that we are comfortable with the way in which the machine is directing the child, just as we are concerned about the way in which a tutor teaches a child?

These issues are not for the technology companies that develop the systems to resolve, they are for all of us.

It is for this reason that the report argues that details of intelligent systems cannot be kept secret for commercial reasons.

They have to be publicly available so that if something starts to goes wrong it can be spotted and put right.

Current regulations focus on personal data.

But they have nothing to say about the data we give away on a daily basis, through tracking of our mobile phones, our purchasing preferences, electricity smart meters and online "likes".

There are systems that can piece together this public data and build up a personality profile that could potentially be used by insurance companies to set premiums, or by employers to assess suitability for certain jobs.

Such systems can offer huge benefits, but if unchecked we could find our life chances determined by machines.

The key, according to Prof Leyser, is that regulation has to be on a case-by-case basis.

"An algorithm to predict what books you should be recommended on Amazon is a very different thing from using an algorithm to diagnose your disease in a medical situation," she told the BBC.

"So, it is not sensible to regulate algorithms as a whole without taking into account what it is being used for."

The Conservative Party promised a digital charter in its manifesto, and the creation of a data use and ethics commission.

While most of the rhetoric by ministers has been about stopping the internet from being used to incite terrorism and violence, some believe that the charter and commission might also adopt some of the ideas put forward in the data governance report.

The UK's Minister for Digital, Matt Hancock, told the BBC that it was "critical" to get the rules right on how we used data as a society.

"Data governance, and the effective and ethical use of data, are vital for the future of our economy and society," he said.

"We are committed to continuing to work closely with industry to get this right."

Fundamentally, intelligent systems will take off only if people trust them and how they are regulated.

Without that, the enormous potential these systems have for human flourishing will never be fully realised.

- Published27 May 2015